Elon Musk’s launch post was characteristically terse: “an order of magnitude smarter and faster.” What he didn’t say was how. That’s the interesting part.

Grok 4.20, launched in public beta in mid-February 2026, isn’t an incremental model update. It’s a different philosophy about what frontier AI inference should look like. Instead of dumping more parameters into a single autoregressive model and scaling training compute, xAI made a bet on architecture: distribute the cognitive load across specialized agents that debate, verify, and synthesize in parallel before any output reaches you.

And then they built one of the most powerful supercomputers on earth to run it.

This piece breaks down the actual mechanics – token flow, context window management, orchestration protocol, inference throughput, and the training methodology that got them here. No hype layer.

The Base Architecture: Transformer + RL at Pretraining Scale

Grok 4.20 is built on a transformer-based neural network foundation – the same core architecture as GPT, Claude, and Gemini. What differs is what happens during and after pretraining.

The RL Investment is the Story

Most frontier labs apply Reinforcement Learning from Human Feedback (RLHF) as a fine-tuning layer after pretraining is complete. It typically represents 20-30% of the total compute budget. xAI flipped this ratio for Grok 4 – RL now consumes approximately 50% of the total training budget, and critically, it’s applied at the pretraining scale, not as a surface-level refinement.

What does “RL at pretraining scale” actually mean? Standard RLHF fine-tunes a model’s style and safety behavior using human preference data. RL at pretraining scale means the model is learning to solve problems through trial-and-error feedback loops on compute equivalent to the initial language modeling pretraining. The model develops problem-solving heuristics from first principles, not just mimicry of human text patterns.

The result: Grok 4 models reason about problems differently from models trained with conventional RLHF pipelines. They’re more likely to explore dead ends, discard them, and re-approach – a behavior that looks inefficient in isolation but produces more reliable outputs on hard problems.

Parameter Scale

xAI reports Grok 4.20 is built on an approximately 3 trillion parameter base. Compare that to:

| Model | Reported Parameters |

|---|---|

| Grok 4 | 1.7 trillion |

| Grok 4.20 | ~3 trillion (unverified) |

| GPT-5 | Undisclosed |

| Grok 5 (upcoming) | ~6 trillion (projected) |

The exact figures aren’t publicly audited, so treat that 3T number with appropriate caution. But the scaling direction is confirmed – this is a meaningful architectural leap from Grok 4, not just a fine-tune.

Multimodal Training from the Ground Up

Grok 4.20 is natively multimodal, meaning text, images, and video were trained together in a unified architecture from the start. This is architecturally distinct from models like GPT-4V, where visual understanding was bolted on via a separate vision encoder. Native multimodal training creates richer cross-modal representations – the model genuinely “sees” context across modalities rather than processing them as separate inputs that get concatenated.

The training data source is also unusual: X’s full firehose provided a massive stream of text, images, and video with real-world temporal context. That temporal signal – posts, replies, quote-tweets, market reactions – is structurally different from curated datasets and gives the model a different kind of world knowledge.

The Four-Agent Orchestration: How It Actually Works

This is the part most explanations get wrong. Grok 4 Heavy’s multi-agent system is commonly described as “four separate AI models working together.” That’s inaccurate. Here’s the correct picture.

Shared Weights, Specialized Roles

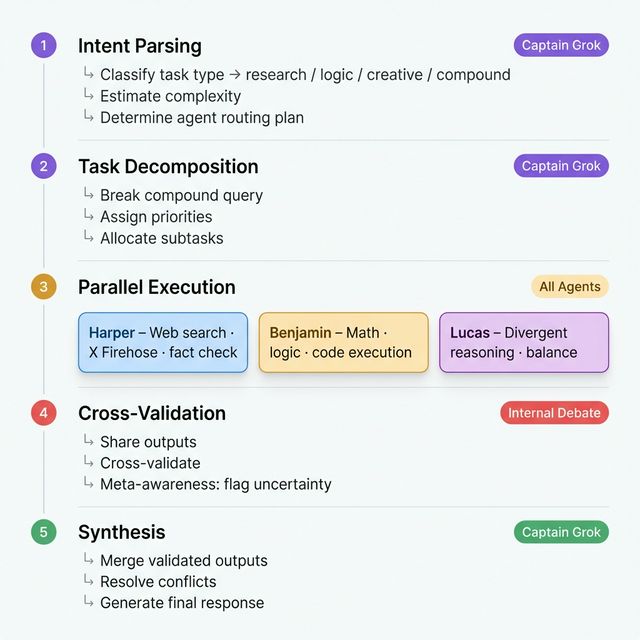

The four agents – Captain Grok, Harper, Benjamin, and Lucas – share the same underlying model weights. They don’t operate as four separate model instances with distinct architectures. Instead, they’re different invocations of the same base model, differentiated by specialized system prompts, context framing, and role-specific tool access.

Think of it like a single human expert who can shift between roles: analyst, researcher, mathematician, writer. The underlying cognition is the same, but the framing shapes which capabilities get activated and how outputs are generated.

The agents can dynamically spawn additional sub-agents (reported to scale from 4 to 32 depending on task complexity), all operating from the same shared context pool.

The 5-Phase Orchestration Protocol

Here’s how a request actually flows through Grok 4 Heavy:

The cross-validation step in Phase 4 is where the real magic happens – and where the token cost multiplies. Each agent’s intermediate reasoning is visible to the others, meaning the effective context being processed at synthesis time is the union of all four agent streams, not just the original query plus one response.

The X Firehose Advantage (Harper’s Edge)

Harper has native access to X’s real-time data stream via WebSocket connections – not static scraped archives, but live, millisecond-latency social data. For tasks like market sentiment analysis, breaking news synthesis, or public opinion monitoring, this creates a structural advantage no competitor can replicate with standard web search.

The constraint: X’s firehose reflects a specific demographic and carries X’s content moderation decisions (or lack thereof). Harper’s fact-checking is only as unbiased as its data source.

Context Window: The 2M Token Architecture

The Grok 4 API offers a 256,000-token context window. Grok 4.20 extends this to 2 million tokens in specific configurations. But “2 million token context” is an architecture challenge, not just a number.

The Sliding Window Memory System

Holding 2 million tokens in active KV (key-value) cache simultaneously would require staggering memory bandwidth. Grok 4.20 uses a sliding-window memory mechanism to manage this:

-

As context grows toward the 2M token ceiling, oldest tokens are progressively evicted to maintain total memory footprint within hardware limits

-

The model maintains a compressed semantic summary of evicted context that persists in a separate memory layer

-

A recency-weighted attention mechanism prioritizes recent tokens while retaining high-salience older tokens via the summary

This is architecturally similar to what DeepMind implemented in Gemini 1.5’s long-context work, but at a larger scale. The practical implication: true 2M active token attention is not what you’re getting. You’re getting a combination of full attention for recent context plus compressed retrieval for older context. For most use cases – long documents, extended code sessions, multi-document research – this is functionally indistinguishable from true full-context attention. For tasks requiring precise recall of specific tokens from early in the conversation, degradation can occur.

What 2 Million Tokens Actually Holds

To put the context window in concrete terms:

| Content Type | Approximate Token Count |

|---|---|

| This article (full text) | ~3,500 tokens |

| Novel (avg. 90,000 words) | ~120,000 tokens |

| Full Wikipedia English (text only) | ~1.8 billion tokens |

| React codebase (50K lines) | ~1.1 million tokens |

| 200 research PDFs (avg. 8 pages each) | ~1.6 million tokens |

At 2M tokens, Grok 4.20 can ingest roughly two large codebases, or a year’s worth of earnings call transcripts, or an entire legal discovery document set in a single context window. This is where the enterprise value case gets genuinely compelling.

The Multi-Agent Context Challenge

Here’s a token consumption problem that follows directly from the orchestration architecture: in Grok 4 Heavy mode, each agent processes the full shared context plus its own intermediate outputs. That means a 100K token user query essentially becomes a 400K+ token processing job across four parallel agent streams (each reading the full shared context) before synthesis.

This is why Grok 4 Heavy uses approximately 10x the compute of standard Grok 4 for equivalent queries. It’s not overhead – it’s the mechanism. You’re paying for the cross-validation.

Token Economics: What You’re Actually Paying For

The token billing structure in Grok models reflects the architectural complexity. Unlike simpler models that bill input → output, Grok’s API bills across multiple token types:

| Token Type | What It Is | Approximate Cost (Grok 4 Fast API — Grok 4.20 API not yet public) |

|---|---|---|

| Input tokens | Your query + conversation history | $0.20 / 1M tokens |

| Reasoning tokens | Agent internal chain-of-thought | $0.20 / 1M tokens |

| Completion tokens | Final response to user | $0.50 / 1M tokens |

| Image tokens | Visual content inputs | Variable by resolution |

| Cached prompt tokens | Repeated system/context hits | $0.05 / 1M tokens (75% discount) |

Reasoning tokens are the hidden cost. In standard single-model inference, the model’s internal thinking is not billed separately – it happens during the forward pass and the cost is embedded in output tokens. In Grok’s multi-agent architecture, reasoning tokens are the intermediate agent outputs during Phases 2-4 of the orchestration protocol. On complex multi-step tasks, reasoning tokens can 2-4x your total token bill relative to what you’d expect from just counting input and output tokens.

The Cache Math

For repeated or templated queries – think daily report generation, recurring data analysis, or app system prompts – prompt caching reduces costs aggressively:

-

Exact-match cached prompts: 75% discount (from $0.20 to $0.05 per 1M tokens)

-

Batch API for high-volume workloads: 50% off all token categories

For an enterprise workflow processing 10 million tokens of cached context daily, that’s a real number: $2,000/day without caching vs $500/day with it.

API vs SuperGrok Subscription Comparison

| Usage Pattern | API Pay-Per-Token | SuperGrok Heavy ($300/mo) |

|---|---|---|

| < 500K tokens/day | API is cheaper | Overpriced |

| 500K – 5M tokens/day | Comparable | Better value |

| > 5M tokens/day | API scales better | Subscribe to API credits instead |

| Irregular/burst usage | API wins | Subscription wastes spend |

Inference Throughput: The Real Bottleneck

Benchmark scores are about capability. Throughput is about usability. Here’s what the numbers look like in practice.

Standard Grok 4 (Single Agent)

-

Output speed: ~40.9 tokens per second (measured via artificialanalysis.ai)

-

First-token latency: ~10.65 seconds average

-

Cloud inference context: xAI’s Colossus nodes, H100/B200-based

~40.9 tokens/second puts standard Grok 4 in roughly the same tier as Claude Opus 4.6 (which runs at approximately 35-50 TPS depending on load) and well behind Grok 4 Fast (which benchmarks at ~98.5 TPS with sub-1-second latency). The first-token latency around 10-11 seconds is noticeable in interactive applications but significantly better than early reports suggested.

Grok 4 Heavy (Multi-Agent)

Throughput for Heavy mode is not independently benchmarked as a single number because the architecture changes delivery characteristics:

-

Agents run in parallel, so total wall-clock time doesn’t scale linearly with the 10x compute usage

-

First-token latency is significantly higher than standard mode — estimates of 90-180 seconds for complex queries are widely cited (orchestration phases 1-4 complete before synthesis output begins), though xAI has not published official Heavy latency benchmarks

-

Output arrives in one consolidated stream rather than streaming tokens progressively through the four-agent stages

This is a fundamentally different user experience from standard streaming inference. You wait longer, then get a more comprehensive answer. For use cases like medical second opinions, complex engineering analysis, or financial modeling – where quality matters more than speed – this tradeoff is reasonable. For conversational AI chat, it’s disqualifying.

The Colossus Throughput Infrastructure

At the hardware level, Grok 4.20 runs on xAI’s Colossus Phase 3 build:

-

300,000+ NVIDIA H100 and B200 GPUs total

-

194 Petabytes/second total memory bandwidth

-

3.6 Terabits/second network bandwidth per server

-

1+ Exabyte storage capacity

The memory bandwidth figure is what matters most for attention-heavy inference with large context windows. 194 PB/s is the number that makes 2M token context feasible at reasonable latency – you need bandwidth to move KV cache data through the attention stack at inference time.

The 122-day build from zero to 100K GPUs for Grok 3 training was itself an infrastructure benchmark. Colossus has since expanded — Grok 4 training utilized 300,000+ GPUs including H100, H200, and B200 units, with xAI planning further expansion toward 1 million GPUs.

Rapid Learning: Weekly Model Updates

This is the feature Musk mentioned but didn’t explain: Grok 4.20 has a “rapid learning architecture” with weekly release cycles and daily bug fix deployments.

This is actually a significant departure from how frontier models are typically shipped. Claude, GPT, and Gemini update on monthly-to-quarterly cycles with significant internal validation before deployment. Weekly cycles imply:

-

Online learning or fine-tuning pipelines are running continuously on user interaction data

-

A/B testing infrastructure is baked into the inference stack to validate updates before full rollout

-

Rollback capabilities are mature enough to handle weekly change velocity

The architectural implication: Grok 4.20 isn’t static. The model you’re using in week 3 of the beta is not the same model as week 1. For benchmark reproducibility and enterprise reliability, this is a concern. For continuous improvement, it’s a feature.

The Hard Constraints

No honest technical writeup skips this section.

Compute cost per query is genuinely high. The 10x compute multiplier for Heavy mode means Heavy is not viable for cost-sensitive, high-volume applications. At scale, you’d want to route simple queries to standard Grok and reserve Heavy for genuinely complex tasks.

Latency is a product limiter. A 90-180 second first-token delay puts Grok 4 Heavy outside the acceptable UX envelope for consumer-facing interactive applications. Its natural habitat is asynchronous workflows, background analysis tasks, and use cases where you submit a complex query and return later.

Context window degradation at the edge. The 2M token window is real, but performance degrades nonlinearly as context grows beyond approximately 500K tokens. The sliding-window compression introduces noise for long-horizon retrieval tasks. This is a physics constraint – you can’t fully attend over 2M tokens with current hardware without approximations.

Data provenance is a liability. Heavy reliance on X training and inference data means the model inherits X’s demographic skews, content moderation decisions, and whatever political signal is embedded in that corpus. This is a known issue that xAI has not publicly addressed with specificity.

What This Means for Developers and Enterprise

Grok 4.20’s architecture creates specific use case niches where it’s genuinely best-in-class. If you’re building any of these, the architecture is worth taking seriously:

-

Financial data analysis – real-time X data + 2M context + math agent (Benjamin) = a genuinely differentiated tool

-

Long-document research synthesis – 2M context at reasonable quality gets you through full legal discovery sets or technical due diligence packages

-

Medical second-opinion workflows – multi-agent cross-validation reduces single-point hallucination risk vs single-model alternatives

-

Complex engineering Q&A – Benjamin’s dedicated logic and code focus produces higher-fidelity technical outputs on specialized problems

Where it struggles: conversational chat (latency), low-cost high-volume API workloads (reasoning token overhead), and politically sensitive enterprise contexts (data provenance concerns).

The Bottom Line

Grok 4.20 is a genuinely novel architecture – not novel in the transformer sense, but novel in how it orchestrates intelligence at inference time. The shared-weight multi-agent approach, the RL-at-pretraining-scale training methodology, and the X Firehose integration create a combination that’s meaningfully different from what Anthropic, OpenAI, or Google are shipping.

The throughput constraints and reasoning token costs are real ceilings. The 2M context window is powerful but operates under approximation at the far end. And the weekly update cadence is either a feature or a risk depending on your tolerance for model drift in production.

I’ve been tracking the AI infrastructure polarization story for months now – the SpaceX-xAI merger and the Colossus build both point to xAI making a long-duration bet on vertical integration that rivals Apple’s chip-to-software stack. If Grok 5’s 6T parameters arrive on that infrastructure with the same orchestration architecture, the competitive dynamics shift meaningfully.

Watch the Grok 5 timeline. And watch whether the weekly update cadence produces measurable benchmark improvement over the next 90 days.

FAQ

How many tokens does a typical Grok 4 Heavy query consume?

A complex multi-step query that appears to be 5,000 input tokens and generates 2,000 output tokens in standard mode will typically consume 15,000-25,000 total tokens in Heavy mode, once you account for reasoning tokens across all four agents during the orchestration phases. Budget 3-5x your expected input+output count for Heavy mode queries.

What is the difference between Grok 4 and Grok 4 Heavy?

Grok 4 is single-agent inference with a 256K context window (~36-41 TPS, ~13-14 seconds first-token latency). Grok 4 Heavy adds the four-agent orchestration system (Captain Grok, Harper, Benjamin, Lucas), extends context to 2M tokens, uses ~10x the compute per query, and has 90-180 second first-token latency. Heavy scored 50.7% on Humanity’s Last Exam vs 25.4% for standard Grok 4.

Does Grok 4.20 support function calling and tool use?

Yes. The agents have native tool invocation built into the orchestration protocol. Harper uses web search and X Firehose tools natively; Benjamin can invoke a code interpreter sandbox; all agents can invoke custom tools via the API’s function-calling interface. This is baked into the orchestration pipeline, not layered on top.

Is the 2M token context window always active?

No. The 2M context window is available in specific API configurations (reported for Grok 4.1 Fast and Grok 4.20). Standard API access offers 256K tokens. The 2M window also operates via a sliding-window memory approximation – full-attention over 2 million tokens simultaneously is not computationally feasible on current hardware at acceptable latency.

How does Grok 4.20’s RL training differ from standard RLHF?

Standard RLHF applies reinforcement learning as a post-training fine-tune representing ~20-30% of compute budget, primarily to align model behavior and reduce harmful outputs. xAI’s approach for Grok 4 allocates ~50% of total compute to RL and applies it at the scale of pretraining, meaning the model develops core reasoning capabilities through RL trial-and-error loops – not just behavioral alignment. This produces first-principles reasoning patterns rather than RLHF-shaped conversational polish.