The gap between “it works in the prompt” and “it works in production” is the single biggest bottleneck in AI engineering today. We’ve all been there: you spend three hours refining a perfect system prompt in a browser-based playground, only to realize that moving that logic into a local codebase, setting up the file system context, and orchestrating it with real terminals is a weekend-long slog.

But Google DeepMind’s Logan Kilpatrick just teased a solution that looks less like a bridge and more like a high-speed teleporter. Next week, Google is set to reveal a native integration between Google AI Studio and Antigravity, the agent-first IDE that’s been shaking up the developer stack since its launch in late 2025.

The Context: Why Antigravity is the “Mission Control” for Agents

Antigravity + Google AI Studio

— Logan Kilpatrick (@OfficialLoganK) February 10, 2026

Stay tuned for next week : )

If you haven’t made the jump to Antigravity yet, honestly, you’re missing the shift from “AI Copilots” to “Agent Systems.” Launched in November 2025 alongside Gemini 3, Antigravity wasn’t just another VS Code skin. It was a fork designed for humans to manage fleets of autonomous agents. Features like Mission Control for parallel agent orchestration and the Agentic Inbox for tracking asynchronous work turned the IDE from a text editor into a command center.

This connects directly to the Cursor vs Antigravity debate we’ve been tracking for months. While competitors are still refining the “predict next characters” experience, Antigravity is doubling down on the “Agentic Architecture”—allowing agents to not just suggest code, but to browse the web for documentation, run their own tests, and verify their own PRs. It’s the difference between a co-pilot and a co-founder.

The Breakthrough: The “AI Studio to Antigravity” Path

So, what is this “Pit Stop” strategy Kilpatrick is hinting at? Think of Google AI Studio as the design studio where you sketch the chassis. It’s where you test the limits of Gemini 3 Flash’s agentic vision or see how Claude Opus 4.6 handles a complex reasoning chain.

The upcoming integration allows for a “one-click handoff.” You prototype the agent’s logic in the cloud via AI Studio, and with a single command, that entire context—prompts, few-shot examples, and model parameters—is injected directly into an Antigravity workspace. No more copying JSON configs. No more re-explaining your codebase context to a new agent instance.

I’ve been watching this space closely, and this is exactly what we need. As Antigravity creator Anshul Ramachandran hinted, the goal is for the agent to know exactly where you left off in the playground when it lands in your local terminal. It’s about reducing the “context tax” we’ve all been paying.

The Comparison: Jules vs. Gemini CLI vs. Antigravity

Google’s lineup is starting to look crowded—is it a feature or a bug? Developers have been voicing confusion over where Google Jules and the Gemini CLI fit into this.

| Tool | Primary Use Case | Workflow |

|---|---|---|

| Gemini CLI | Lightweight Terminal Prep | “Reason-and-Act” loops from the CLI. |

| Google Jules | Asynchronous Maintenance | “Fire and Forget” batch tasks against GitHub repos. |

| Antigravity | Active Agentic Development | Real-time orchestration of multiple agents across the full stack. |

The AI Studio integration turns Antigravity into the definitive “execution layer.” You design the strategy in the browser, and you execute the “Pit Stop” in the IDE. It’s a clean, vertical stack that competitors will find hard to mimic.

What This Means For You

If you’re a developer on the paid tier, the news gets better. Kilpatrick noted that the “raw” performance issues and rate limits that plagued the late 2025 launch are being aggressively addressed. For paid users, we’re seeing a shift toward Tier 1 status having significantly more “gas in the tank” for long-running agentic missions.

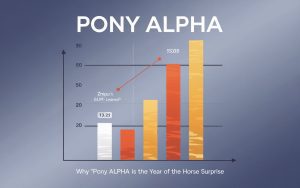

But here’s what nobody’s asking: will this lead to “agentic fragmentation”? If every IDE has its own cloud playground, do we lose the portability of our prompts? For now, Google’s lead in multimodal context makes them the horse to bet on.

Practical Handoff Example (Mockup)

$ antigravity import --studio-id "project-gemini-v3" --context-depth fullA conceptual look at the forthcoming CLI-to-IDE handoff command.

The Bottom Line

Google is building the first truly vertical AI development stack. By connecting the accessibility of AI Studio with the raw power of the Antigravity agentic engine, they are removing the last major friction point in the AI-native development cycle. Expect the full reveal next week—and expect your weekend workflows to change forever. (Honestly, I can’t wait.)

FAQ

Is Antigravity free for all users?

Currently, Antigravity is available in public preview for Windows, macOS, and Linux. While there is a free tier with generous rate limits, the advanced integration features and highest-tier Gemini 3 models are increasingly optimized for paid API users.

Can I use non-Google models in Antigravity?

Yes. Despite being a Google DeepMind product, Antigravity supports a range of models via the Model Context Protocol (MCP), including the Claude 4.5 series and even MiniMax M2.1.

How does this differ from the Gemini CLI Companion?

The Gemini CLI Companion allows any VS Code variant to gain basic context awareness. The AI Studio to Antigravity integration is deeper, allowing for full state transfer and “agentic state” persistence that isn’t possible with a simple extension.