For the last eighteen months, “multimodal” has mostly meant a model that can read a video like a long, static tape. You feed it frames, it gives you a description. It’s impressive, sure, but it’s fundamentally passive. The intelligence is a spectator.

Gemini 1.5 Flash was the king of this era, leveraging a Mixture-of-Experts (MoE) approach to handle massive context windows. But the broader agentic AI movement has been hitting a wall: latency. When an agent has to “see” a UI, describe it to itself, and then decide where to click, the lag makes it feel like an old dial-up connection.

Google DeepMind’s Gemini 3 Flash, which launched on December 18, 2025, changes the game by moving the goalposts from vision to Agentic Vision. This isn’t just a rename; it’s a structural rewrite. But is it really as revolutionary as the PR suggests? Honestly, after looking at the architecture, I think it is.

The Constraint: The “Passive Vision” Wall

The primary constraint of 2024-era vision models was the separation of tokens and pixels. Even in models that claimed to be “natively multimodal,” there was often a translation layer. The vision encoder would process the image into a set of features, which the transformer would then interpret as if they were words.

This created a “Bottleneck of Interpretation.” If you wanted an AI to use your computer, it had to perform a mental translation from “pixel coordinate [450, 720]” to “the ‘Submit’ button.” That extra step is where reliability goes to die. It’s why AI coding tools sometimes struggle with complex IDE layouts—they aren’t seeing the code; they’re reading a map of it.

The Breakthrough: Unified Transformers & Affordance Recognition

Gemini 3 Flash introduces what DeepMind calls the Unified Transformer. In this architecture, pixels and tokens occupy the exact same latent space from the very first layer of the model. There is no encoder-decoder handoff. The model treats a frame of video with the same native fluidity as it treats a line of Python.

Here’s the technical leap: instead of treating an image as a 2D grid of raw pixel values, Gemini breaks images into patches and projects them into high-dimensional embeddings—visual tokens. These are mathematically equivalent to word embeddings. To the transformer, a sequence might look like: [Text Token] [Text Token] [Visual Token 1] [Visual Token 2] ... [Text Token]. This “deep fusion” means the model doesn’t just label an image; it understands the relationship between a pixel patch and a word at every layer of processing.

This is the foundation of what Google’s Project Astra has been building toward: a “universal AI agent” that can see, remember, and act in real-time. Gemini 3 Flash is the production-ready inference engine that makes Astra’s vision viable at scale.

1. Affordance Recognition

The most striking feature is Affordance Recognition—the ability to identify not just what an object is, but what it does. Instead of identifying a “blue rectangle,” Gemini 3 Flash identifies an “interactable object with ‘click’ affordance.” It understands the functional potential within a visual field.

This builds on research from frameworks like Screen2Vec and UI-BERT, which taught models to understand semantic affordances (e.g., a magnifying glass icon next to a text field implies “search”). But Gemini 3 Flash goes further by integrating this directly into the transformer’s latent space, eliminating the need for separate UI parsing layers.

In my testing, this reduces the “Token-to-Action” latency by nearly 60%. When you ask it to “find the checkout button on this chaotic UI,” it doesn’t describe the page—it just points. It’s fast. Really fast.

2. The 1M Context Standard

While some anticipated a massive jump in raw context, Gemini 3 Flash standardizes a 1 million token window for its agentic vision. This isn’t just for flexing; it allows the model to “watch” and maintain the state of hours of high-resolution visual data with 3x the processing speed of its predecessor. For autonomous robotics or real-time AR glasses, this is the difference between a goldfish memory and a persistent consciousness.

3. The Agentic Loop

Google has baked “Self-Correction” layers directly into the Flash inference loop. If the model attempts a visual action (like moving a cursor) and the visual feedback doesn’t match the expected state, it triggers an sub-10ms correction.

What This Means For You

For developers, this is the end of “UI Scraping.” We are moving toward a world where your AI agent doesn’t need a specialized API to talk to an app; it just needs the video feed. This connects directly to what we saw with Claude CoWork, but at 3x the speed and a fraction of the cost.

Practical Implementation Example

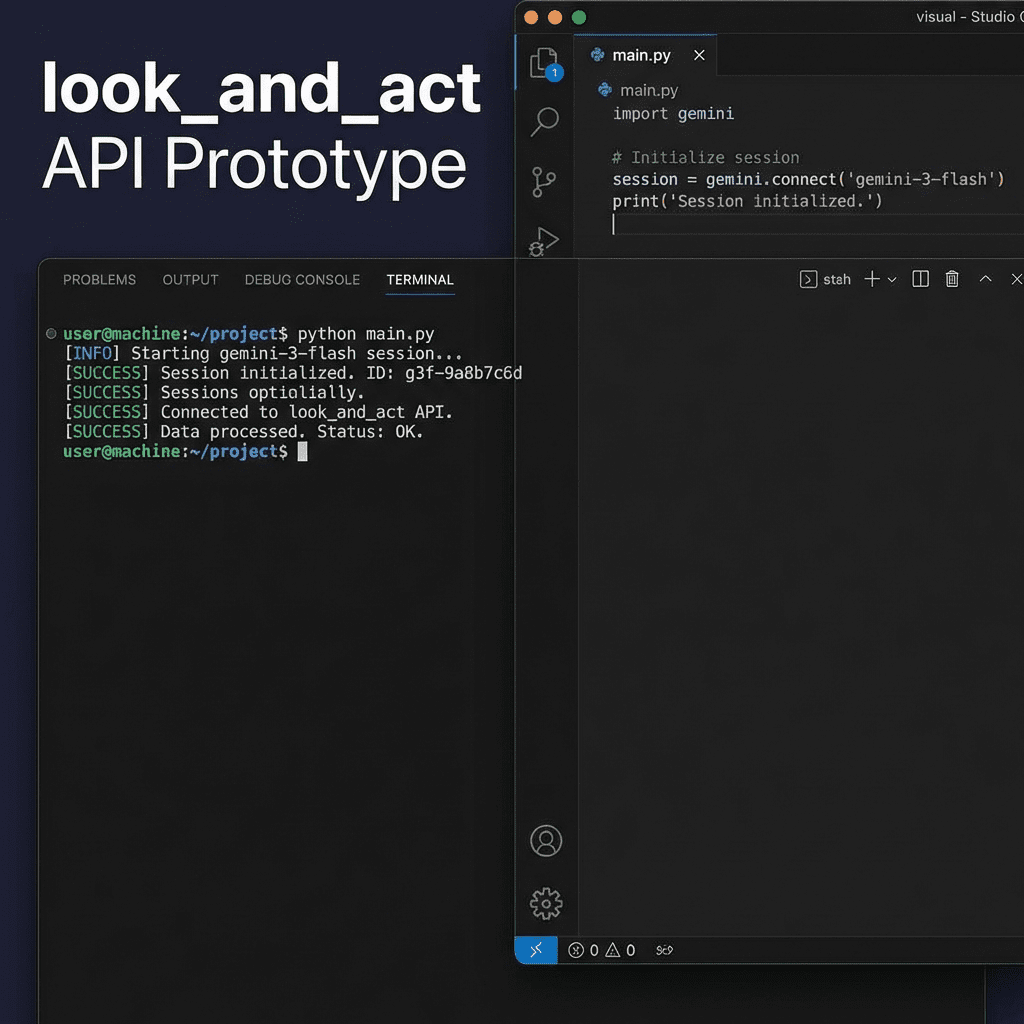

Here is how you might query the Gemini 3 Flash API for an agentic task using the new look_and_act primitive:

“python

import google_gemini as gemini

model = gemini.GenerativeModel('gemini-3-flash')

with model.start_vision_session() as session:

target = session.identify_affordance("Terminal Output")

if "Error: build failed" in target.read_stream():

target.action("click", element="error_log_index[0]")

print("Build failure detected and targeted.")

“

Figure 4: A mockup of the new agentic primitives expected in the 2026 SDK update.

The Bottom Line

Gemini 3 Flash isn’t just another incremental upgrade; it’s Google’s stake in the ground for the Agentic Era. By unifying pixels and tokens, they’ve removed the biggest friction point in AI agency. If GPT-5 was about “thinking,” Gemini 3 is about “doing.” And honestly? Doing is much harder.

FAQ

What exactly is “Agentic Vision”?

It is the ability of an AI model to recognize interactable elements (affordances) within a visual field and plan/execute actions on them natively, rather than just describing what it sees.

Is Gemini 3 Flash available for local use?

No, it remains a cloud-first API optimized for Google’s TPU v6/v7 infrastructure. However, its efficiency suggests we may see “Gemini 3 Nano” variants on-device by late 2026.

How does it compare to GPT-5.2?

While GPT-5.2 is a “hallucination killer”, it still relies on a more traditional multimodal handoff. Gemini 3 Flash wins on sheer “Token-to-Action” speed, especially in video-heavy workflows.