The “Omni” label has been thrown around cheaply ever since OpenAI dropped GPT-4o. But today, a 9-billion parameter model from OpenBMB just redefined what that word actually means for the open-source community.

MiniCPM-o 4.5 isn’t just another multimodal LLM; it’s a real-time, full-duplex, vision-speech-text powerhouse that runs on your laptop. While Big Tech builds walled gardens, MiniCPM-o 4.5 is tearing down the fence, offering GPT-4o level performance that fits in 11GB of VRAM. If you thought you needed a server farm to run a true omni model, you were wrong.

9 Billion Parameters, Infinite Possibilities

The most shocking spec of MiniCPM-o 4.5 isn’t its capabilities—it’s its size. At just 9 billion parameters, this model punchs so far above its weight class it’s bordering on physics-defying.

The “Omni” Promise Delivered

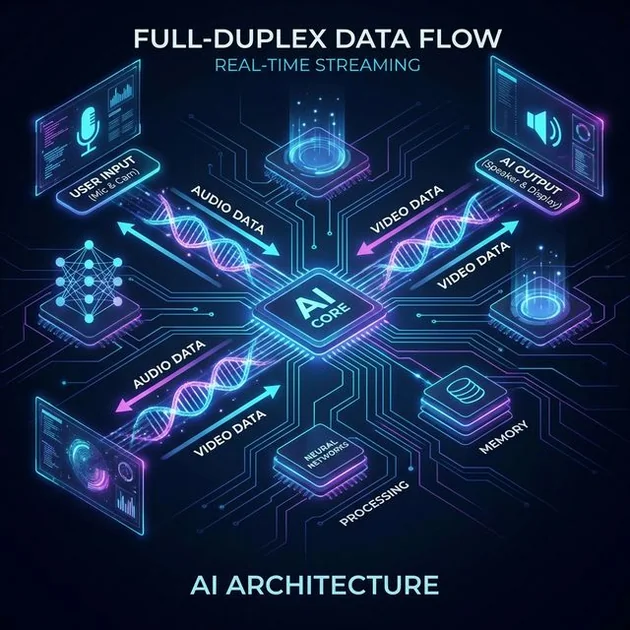

Most “multimodal” models are just text models glued to vision encoders. MiniCPM-o 4.5 is built differently. It features full-duplex streaming, meaning it can listen, see, and speak simultaneously. It doesn’t wait for you to stop talking to start processing; it streams audio and video continuously, allowing for interruptions and natural, fluid conversation.

- Real-Time Video Understanding: It processes video inputs at up to 10 FPS, allowing it to understand gestures, movement, and changing scenes in real-time.

- Bilingual Speech: Seamless English and Chinese speech synthesis that captures tone and emotion, not just text-to-speech robotics.

Benchmark Breaker

On the OpenCompass benchmark, MiniCPM-o 4.5 scored a 77.6, outperforming proprietary giants like GPT-4o and Gemini 2.0 Pro. Let that sink in. A model you can download and run purely offline is beating Google’s and OpenAI’s flagship cloud models in visual understanding.

MiniCPM-o 4.5’s architecture allows for simultaneous input and output streams, enabling true real-time interaction.

Local AI: The 11GB Revolution

The real game-changer here is accessibility. OpenBMB has optimized this model to run on consumer hardware.

- VRAM Requirement: With Int4 quantization, you can run MiniCPM-o 4.5 on just 11GB of VRAM. An NVIDIA RTX 3060 or 4070 is all you need.

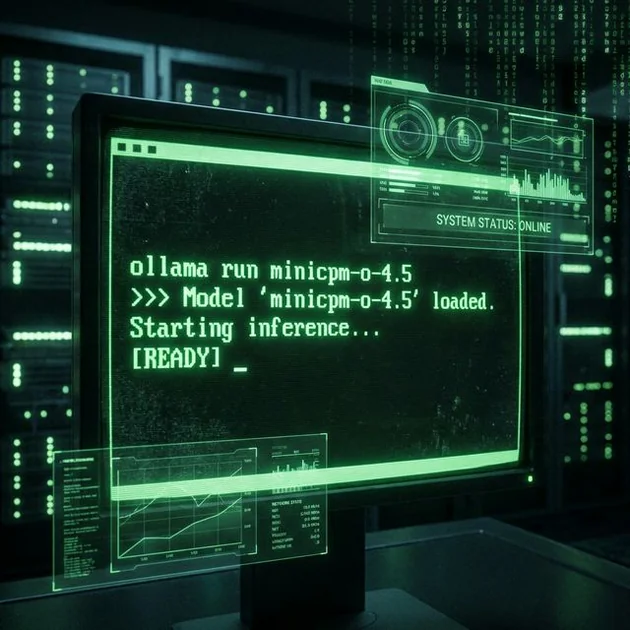

- Deployment Ecosystem: It supports llama.cpp, Ollama, and vLLM out of the box. You don’t need a PhD in ML ops to get this running.

- Mobile Potential: This efficiency suggests we aren’t far from running this level of intelligence on high-end smartphones.

This is the death knell for the “API-only” future Sam Altman envisions. Why pay per token and risk your privacy when you can run a state-of-the-art omni model on your own rig?

| Feature | MiniCPM-o 4.5 | GPT-4o | Gemini 2.0 Flash |

|---|---|---|---|

| Open Source | ✅ | ❌ | ❌ |

| Parameters | 9B | ~1.8T (Est.) | Unknown |

| Real-Time Video | ✅ (10 FPS) | ✅ | ✅ |

| Local Inference | ✅ (11GB VRAM) | ❌ | ❌ |

What This Means For You

For Developers

You now have a SOTA multimodal agent that costs $0 to run inference on (minus electricity). You can build:

– Smart Glasses Assistants: Real-time visual context without cloud latency.

– Local Security Agents: Monitors video feeds and alerts you to specific events without sending footage to the cloud.

– Education Tools: Interactive tutors that can “see” a student’s homework and explain it verbally.

For Privacy Advocates

This is the holy grail. An agent that can see your screen, hear your voice, and guide you through tasks—without a single byte leaving your local network.

Practical Code Example (Practitioner Mode)

Running MiniCPM-o 4.5 with llama.cpp is deceptively simple:

# Pull the model (example command once merged to official registry)

ollama run minicpm-o-4.5

# Or using llama.cpp directly

./main -m minicpm-o-4.5-int4.gguf -p "Describe what you see in this video stream" --mmproj mmproj-model-f16.gguf --image video_frame.jpg

It really is this easy. The democratization of high-end AI is happening in your terminal.

The Bottom Line

MiniCPM-o 4.5 is a warning shot to the closed-source giants. It proves that efficient architecture and high-quality training data beat raw parameter count. We are entering an era where the best AI might not be the biggest one in the cloud, but the smartest one running locally on your desk. Download it, run it, and reclaim your compute.

FAQ

Can I run MiniCPM-o 4.5 on a Mac?

Yes! Thanks to llama.cpp and Metal optimization, Apple Silicon Macs (M1/M2/M3) with at least 16GB RAM can run the quantized versions efficiently.

Does it support other languages besides English and Chinese?

Yes, it supports over 30 languages for text and understanding, though the real-time speech features are currently optimized for English and Chinese.

Is it really better than GPT-4o?

In specific visual benchmarks like OpenCompass, yes. For general world knowledge and complex reasoning, GPT-4o likely still holds an edge due to its massive size, but for visual-centric tasks, MiniCPM-o is superior.