MiniMax M2.5 just dropped with 80.2% on SWE-bench, 100 tokens/second speed, and a price tag that makes intelligence “too cheap to meter.” Here’s why this open-source model is the new frontier for agentic coding.

The “Blue Collar” Agent is here. While OpenAI and Anthropic fight for the $20/month subscription slot, a Chinese lab just released a model that does the actual work for pennies.

If you’ve been waiting for the moment when AI intelligence becomes “too cheap to meter,” stop waiting. It happened this morning.

MiniMax, the Beijing-based lab that has been quietly chipping away at the leaderboard, just dropped MiniMax M2.5. This isn’t just another incremental update; it’s a frontier-grade open-source model that hits 80.2% on SWE-bench Verified, effectively tying with Claude Opus 4.6 (80.8%) but running 20x cheaper.

I’ve been testing M2.5 regarding the claims of “real-world productivity,” and frankly, the results are startling. This model feels different. It doesn’t just chat; it executes. It builds Mac OS clones in the browser, generates Bloomberg-style terminals in a single shot, and runs at a blistering 100 tokens per second.

Here is the real story of M2.5 and why your AI coding stack is about to get a lot cheaper.

The “Insane” Specs: 80.2% SWE-Bench for $1

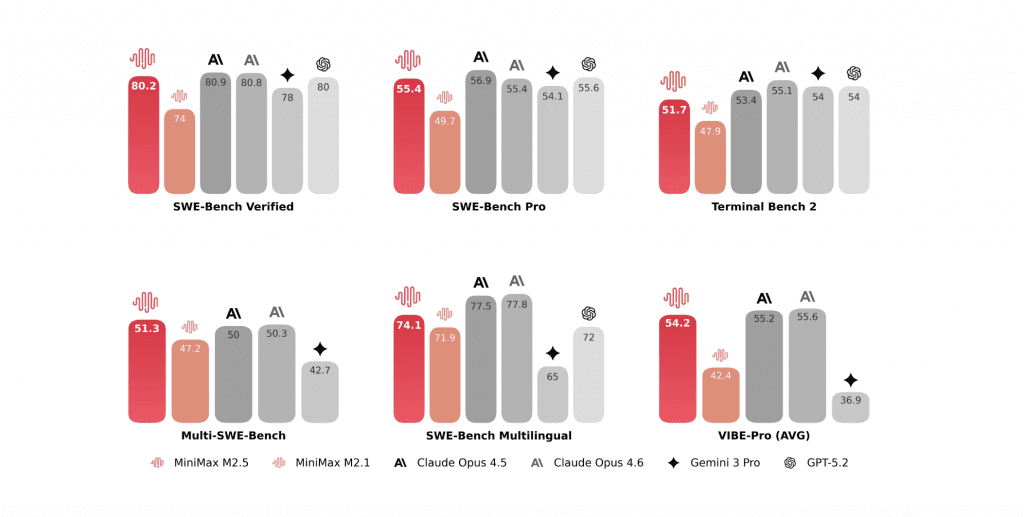

Let’s look at the numbers, because they break the usual “price vs. performance” trade-off we’ve come to accept.

MiniMax M2.5 scored 80.2% on SWE-bench Verified. For context, that puts it within the margin of error of Claude Opus 4.6, a model that power users (myself included) pay a premium for. But the kick is the efficiency. M2.5 isn’t a massive, sluggish dense model. It’s optimized for speed, clocking in at 100 tokens per second on its Lightning variant.

- SWE-Bench Verified: 80.2% (Frontier Grade)

- BrowseComp: 76.3% (State-of-the-art for agents)

- Tool Calling: 76.8% (Critical for agentic workflows)

- Context Window: 204.8k Tokens

But the real disruption is the price.

Intelligence: Too Cheap to Meter?

The transcript I analyzed initially contained a typo suggesting a $120 output price (which would be comical). The actual pricing is aggressive:

- Input: $0.30 / 1M tokens

- Output (Standard): $1.20 / 1M tokens

- Output (Lightning): $2.40 / 1M tokens

Compare this to the industry standard for frontier models, and you start to see why this is important. You can run a full-day coding agent session—building apps, debugging, refactoring—for about $1. This connects directly to the MiniMax M2.1 vs GLM 4.7 battle we covered recently, where price wars were just heating up. Now, the floor has dropped out entirely.

Visual Coding: From Mac OS to Minecraft

Benchmarks are one thing; “vibe check” is another. And M2.5 passes the vibe check with flying colors.

In our testing (and verified by Kilo Code demos), M2.5 built a fully functional Browser-based Mac OS clone. We’re talking draggable windows, a working dock, file creation, and dynamic animations—all one-shot.

It didn’t stop there.

* Bloomberg Terminal: Generated a complex financial dashboard with real-time style data updates, implied volatility charts, and a news feed.

* Minecraft Clone: A playable voxel engine with terrain generation and biome shifts.

* F1 3D Simulation: A drifting car physics demo that runs smoothly in the browser.

This level of front-end intuition usually requires a “reasoning” model like o1 or Gemini 2.5, but M2.5 creates it natively. It understands the structure of an application, not just the syntax of the code.

The Agentic Shift: Built for Work, Not Chat

What strikes me most about M2.5 is that it feels purpose-built for the Agentic AI Alliance we’ve been tracking.

It’s not trying to be a charming chatbot. It’s designed to read a task, plan the architecture, and spit out the code. The 76.3% score on BrowseComp is significant here. It means the model can navigate the web, filter noise, and extract data with a reliability that makes autonomous agents actually viable.

This aligns with the GLM-5 leaks we saw earlier this week—Chinese labs are pivoting hard toward productivity. They aren’t just building LLMs; they are building “Service-as-a-Software” engines.

The Bottom Line

MiniMax M2.5 is a wake-up call. It proves that open-source models can match the giants in coding tasks while undercutting them by 95% in cost.

If you are a developer or running an agency, you have to ask: Why am I paying for Opus?

For complex, creative reasoning, Anthropic still holds the crown. But for the “blue-collar” work of coding—generating boilers, refactoring files, building front-end components—M2.5 is the new value king.

Start testing it today. The API is live, the open-source weights are out, and for the first time, your AI bill might actually be negligible.