For three years, Meta positioned itself as the “open-source champion” of AI. While OpenAI and Google hoarded their models, Meta released Llama after Llama, letting anyone fine-tune, modify, and commercialize their technology.

That era just ended.

Internal documents leaked from the newly formed Meta Superintelligence Labs (MSL) reveal two flagship models — codenamed “Mango” and “Avocado” — slated for H1 2026 release. The twist? Both are closed-source, commercial offerings. This isn’t just a product launch. It’s a strategic pivot that redefines Meta’s AI business model, driven by a new leadership team and a nuclear-powered infrastructure.

The Strategic U-Turn: Why Meta Abandoned Open Source

Let’s address the elephant in the room: Meta built its AI credibility on openness. But three converging forces have forced a hard pivot:

1. The Llama 4 “Good, Not God” Problem

The narrative started shifting in April 2025 with the release of Llama 4 “Scout” (17B) and “Maverick” (17B MoE). While these models were engineering marvels—bringing multimodal capabilities and massive context windows to edge devices—they failed to dethrone the reigning kings.

Benchmark data showed Scout and Maverick were competitive, but they didn’t deliver the “GPT-5 moment” the industry anticipated. The flagship Llama 4 Behemoth (288B parameters) remains in training, and internal sentiment soured. The feeling inside Menlo Park was clear: giving away “good enough” models wasn’t creating a moat; it was just subsidizing competitors like Baichuan and DeepSeek, who were fine-tuning Llama to beat Meta at its own game.

2. The $14.3 Billion Alexandr Wang Acqui-hire

In June 2025, Meta dropped a bomb on Silicon Valley: a $14.3 billion acquisition of a 49% stake in Scale AI, primarily to secure its co-founder, Alexandr Wang.

Wang was appointed Chief AI Officer, effectively restructuring Meta’s entire AI org. He created Meta Superintelligence Labs (MSL), uniting FAIR (Fundamental AI Research) and the Product teams under one ruthless mandate: Build AGI, and build it to win.

Wang’s philosophy is distinct from LeCun’s academic openness. As he reportedly pitched to Zuckerberg: “Open-source builds goodwill. Closed-source builds empires.”

3. The “China Problem”

Multiple Chinese AI labs built commercial empires on the back of Llama 3 without contributing back. As we detailed in our analysis of China’s open-weight dominance, Meta effectively subsidized its biggest global competitors. The board demanded a course correction: Stop being the world’s R&D department.

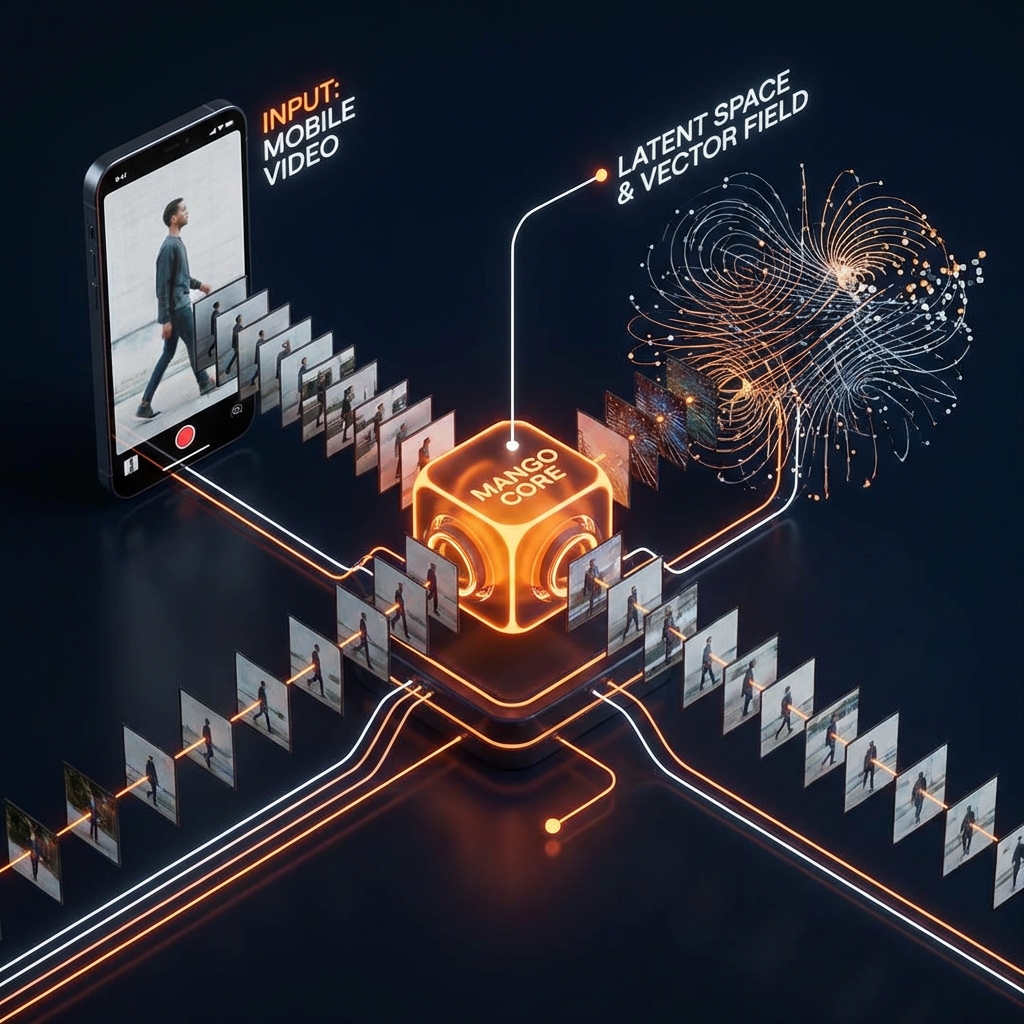

Mango: The “World Model” for Video

Mango is Meta’s answer to OpenAI’s Sora and Google’s Veo 3.1. But calling it a “video generator” sells it short. Inside MSL, it’s referred to as a “World Model”—an AI that understands physics, causality, and object permanence.

The Technical “Movie Gen” Heritage

Mango is built on the architecture of Movie Gen, Meta’s research prototype. The specs are terrifyingly high-end:

- Architecture: 30 Billion Parameter Transformer

- Video Fidelity: 16 seconds @ 16 fps (1080p native)

- Audio: Integrated 13B-parameter audio model for synchronized Foley and scores

- Training Data: 1 Billion image-text pairs, 100 Million video-text pairs

The “Social-First” Advantage

Unlike Sora, which chases cinematic realism, Mango is optimized for engagement. It understands the “viral grammar” of Instagram Reels and TikTok.

Vertical Native: Optimized for 9:16 aspect ratios.

Personalization: It uses Meta’s social graph to “know” you. Upload a selfie, and Mango doesn’t just put a face on a body; it reconstructs your likeness with context-aware lighting and physics.

Instruction-Based Editorial: You don’t just prompt and pray. You can edit. “Change the background to a cyberpunk Tokyo, but keep the lighting warm.”* Mango performs a precise latent space edit, modifying only the background while preserving the subject’s motion perfectly.

Avocado: The Agentic Coder

If Mango is for creators, Avocado is for builders.

Avocado is a closed-source LLM specifically designed to solve Llama’s biggest weakness: complex reasoning and coding.

Why Not Just Use Llama 4?

Llama 4 Maverick is a great generalist, but it struggles with deep, multi-step agentic workflows. Developers praised its speed but found themselves defaulting to Cursor or Windsurf for complex reasoning tasks.

Avocado is trained differently. It’s not just predicted tokens; it’s trained on:

Verified Proof Systems: Mathematical proofs and formal logic.

Native Tool Use: It doesn’t “hallucinate” tools; it has deep, native integrations with the WhatsApp Business API, Instagram Graph API, and Meta Ads Manager.

The “Agentic” Play

Avocado isn’t meant to be a chatbot. It’s a backend brain for autonomous agents — fitting perfectly into the Agentic AI Alliance framework we discussed last month.

Imagine a WhatsApp agent that handles customer support, checks inventory in your Shopify store (via API), processes a refund, and updates your CRM—all without human intervention. That’s the Avocado pitch: Enterprise-grade reliability.

The Nuclear Option: Prometheus Supercluster

To power these beasts, Meta is building the Prometheus Supercluster in New Albany, Ohio.

This is the hardware endgame.

- Scale: Operational in 2026 with a roadmap to 1.3 Million NVIDIA H100-equivalent GPUs.

- Power: Nuclear. Meta has signed massive power purchase agreements (PPAs) with Vistra and TerraPower to feed the gigawatt-scale hunger of Prometheus.

- Purpose: This isn’t just for training. It’s for inference at scale.

Meta realizes that if Mango and Avocado take off, they will need more compute than Azure or AWS can lease them. They are becoming their own sovereign AI cloud.

The Bottom Line: The “Open” Door Closes

Meta’s pivot to closed-source AI is a gamble. But let’s be honest: it was inevitable.

For three years, they played the long game with open-source, hoping to commoditize AI infrastructure. It didn’t work. The ecosystem didn’t materialize, and competitors free-rode on billions in R&D.

Mango and Avocado represent a new Meta: one that monetizes intelligence instead of giving it away. If these models succeed, Meta transitions from “social media company experimenting with AI” to “AI company that happens to own the world’s largest social graph.”

And the irony? We now have two open-source champions (Mistral, Stability AI) and former champion Meta going the opposite direction. The AI world is inverting.

For developers and creators, this means a bifurcated future:

Llama Series: The “Android” of AI — open, flexible, “good enough” for most local tasks.

Mango/Avocado: The “iOS” of AI — polished, integrated, powerful, and walled-off behind a subscription.

Zuckerberg isn’t just building a metaverse anymore. With MSL, Alexandr Wang, and a nuclear-powered supercluster, he’s building the engine that will run it.