The AI coding assistant landscape just got messy. Really messy.

In January 2026, three tools are battling for dominance in your IDE: Kilo Code (fresh off an $8 million seed round), Roo Code (the “reliability-first” fork), and Cline (the OG with 5 million installs). But here’s what nobody’s talking about: two of these are forks of the third, and the community is fracturing over which fork actually delivers.

I’ve spent the last two weeks testing all three, digging through Reddit flame wars, and tracking down the real benchmarks. What I found surprised me. The “best” tool isn’t what you think—and your choice might say more about your coding style than the tools themselves.

The Fork Wars: How We Got Here

Let’s get the uncomfortable truth out of the way first.

Cline launched in early 2025 as an open-source VS Code extension, pioneering the “agentic coding” approach where AI doesn’t just suggest code—it plans, executes, and iterates like a junior developer. By January 2026, it hit 5 million installations across VS Code, JetBrains, Cursor, and Windsurf.

Then came the forks.

Roo Code forked Cline to focus on reliability and customization, targeting developers who wanted more control over multi-step tasks. Kilo Code forked both Cline and Roo, positioning itself as the “best of both worlds” with a slicker UI and aggressive marketing.

The community is… divided. Some call Kilo Code an “ethical concern” that benefits from Cline’s work without meaningful contribution. Others praise it for actually shipping features faster. Meanwhile, Roo Code users swear by its configurability, even as others complain about stability issues.

And here’s the kicker: all three regularly pull updates from each other. Kilo pulls from Roo. Roo pulls from Cline. It’s a circular dependency nightmare that would make any architect cringe.

Feature Showdown: What Actually Matters

Kilo Code: The All-In-One Bet

Kilo Code raised $8 million in December 2025 with a clear mission: become the “agentic engineering platform” that eliminates friction. Here’s what that money bought:

Core Features:

- 500+ AI models from 60+ providers (Anthropic, OpenAI, Mistral, Z.AI, Minimax)

- Cloud Agents that run tasks in the cloud, freeing up local resources

- Kilo Reviewer (launched January 27, 2026): automatic AI code reviews on PR open

- Managed Indexing for deep semantic codebase understanding

- Parallel Agents (CLI) using git worktrees for multi-agent coordination

- App Builder with database migration support

The Kilo Difference:

Kilo Code isn’t just an IDE extension—it’s positioning as an entire platform. One-click deploys via Kilo Deploy. Voice prompting in the IDE. Sessions that sync across devices (start on desktop, continue on mobile).

But the real differentiator? Transparent pricing. Kilo charges exactly what the model provider charges—zero markup. You pay $0.27 per million tokens for DeepSeek v3, $1.20 for mid-range models, and that’s it.

The Catch:

Reddit users report Kilo’s autocomplete is “subpar,” and some complain about commands auto-accepting without approval. There’s also the fork controversy: Kilo benefits from Cline’s foundational work while investing heavily in ads rather than core innovation.

Roo Code: The Power User’s Choice

Roo Code took a different path: reliability over features.

Core Strengths:

- Custom Modes: Create specialized AI “personalities” (Code, Architect, Debug, Ask) with specific instructions and model assignments

- Codebase Indexing for semantic search across entire projects

- Concurrent File Reads for better context

- Intelligent Context Condensing to optimize token usage

- MCP Server Support for controlling Docker, databases, and custom integrations

The Roo Philosophy:

Roo Code is for developers who are already proficient with LLM code generation and want more power. It’s lighter, more configurable, and doesn’t hold your hand. You can even prompt Roo to create new modes on the fly.

The Reality Check:

That configurability comes with a steep learning curve. Reddit reports are mixed: some users love the control, others hit errors, incorrect tool calls, and stability issues. One user bluntly stated Roo “lacks robust technical infrastructure”.

And here’s a problem all three share: Anthropic has been suspending Claude accounts for users of Cline, Roo, and Kilo due to automated access violations. If you’re using Claude via these tools, you’re playing with fire.

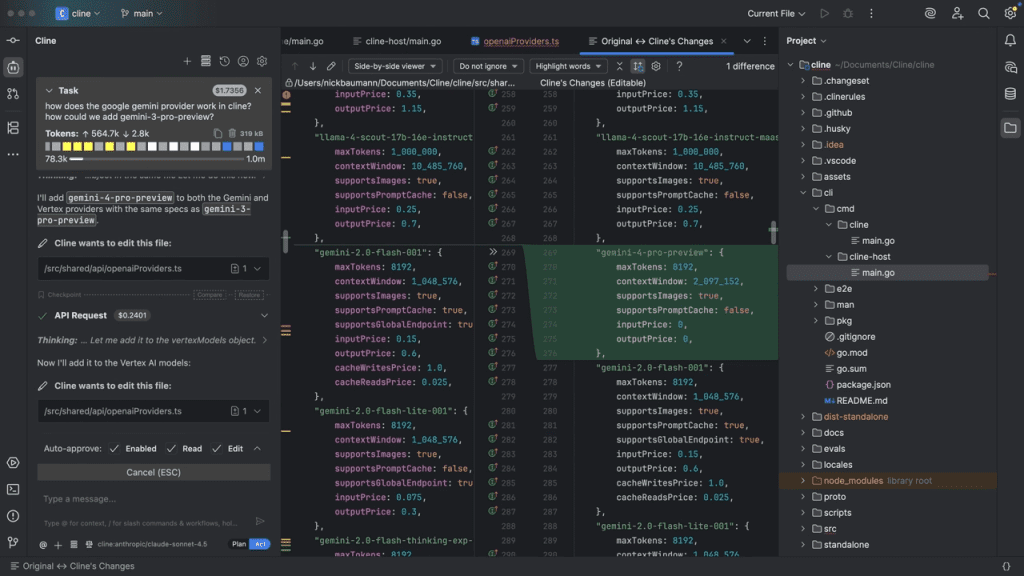

Cline: The Stable OG

Cline is the original, and it shows.

What Makes Cline Different:

- Beginner-friendly workflows with transparent, step-by-step control

- Human-in-the-loop approach: every file change and command requires approval

- Plan/Act modes for strategic problem-solving before implementation

- Web Search (added January 10, 2026): search docs and APIs without opening a browser

- Skills (January 10, 2026): follow different programming rules per project

- Background Edits (experimental, January 6, 2026): keep coding while Cline works

Latest Updates (January 2026):

- Cline 3.55 (January 28): Added support for Arcee Trinity Large (free, US-built) and Kimi K2.5 (outperforms Opus 4.5 in some benchmarks)

- Cline 3.48 (January 10): Web search and Skills compatibility

The Cline Advantage:

Stability. Predictability. A massive open-source community. Cline is model-agnostic, works with Anthropic, OpenAI, Gemini, and xAI, and integrates with VS Code, JetBrains, Cursor, and Windsurf.

The Downside:

Some developers feel Cline innovates slower than its forks. It can be “overly restrictive” for advanced LLM users. And yes, the Claude suspension issue affects Cline users too.

Pricing: The Real Cost of “Free”

All three claim to be “free.” None of them actually are.

Kilo Code Pricing

Platform: Free (open-source extension)

AI Usage: Pay-as-you-go at exact provider rates (no markup)

Kilo Pass (AI Subscription):

- Starter: $19/month → $26.60 in credits

- Pro: $49/month → $68.60 in credits

- Expert: $199/month → $278.60 in credits

Teams: $15/user/month (or $180/year via AWS Marketplace)

Enterprise: Custom pricing ($1,800/user/year via AWS)

The Math:

If you’re using DeepSeek v3 at $0.27 per million tokens, the Starter plan gives you ~98 million tokens per month. If you’re using Claude Sonnet 4.5 at ~$3 per million tokens, you get ~8.8 million tokens. Choose wisely.

Roo Code Pricing

VS Code Extension: Free (open-source)

Roo Code Cloud:

- Free Plan: $0 (token tracking, task sharing, community support)

- Pro Plan: $20/month + $5/hour for cloud tasks

- Team Plan: $99/month + $5/hour for cloud tasks (unlimited members)

AI Model Costs: Separate, based on provider (or bring your own key)

The Catch:

Cloud Agents cost $5 per hour in credits. If you’re running a PR review agent for 2 hours, that’s $10 on top of your subscription. This adds up fast.

Cline Pricing

Individual: Free (open-source extension)

AI Usage: Pay-as-you-go for inference (use your own API keys or Cline provider)

Open Source Teams: Free through Q1 2026, then $20/user/month (first 10 seats remain free)

Enterprise: Custom pricing (SSO, OIDC, SCIM, audit logs)

The Reality:

Cline’s “free” model is the most transparent. You pay your AI provider directly (OpenAI, Anthropic, Google). No middleman. No markup. But heavy use gets expensive—one Reddit user reported $200/month in Claude API costs.

Cost Optimization Tip:

Use OpenRouter with budget models and enable prompt caching. This can cut costs by 50-70%.

Benchmarks: Who Actually Performs?

Here’s where it gets tricky. None of these tools have their own SWE-bench scores—they’re platforms that route to AI models. So the “performance” depends entirely on which model you choose.

Kilo Code’s Model Performance (SWE-bench Verified):

- Claude Sonnet 4.5: 82%

- Gemini 3 Flash: 76.20%

- Kimi K2.5: 60.4%

- Claude 3.7 Sonnet: 70.3%

- Gemini 2.5: 63.8%

Roo Code’s Benchmark System:

Roo Code uses the “Roo SPARC Coding Evaluation & Benchmark System” to assess efficiency, security, and methodology. They test frontier models against hundreds of exercises across five programming languages.

Cline’s Approach:

Cline offers cline-bench, a benchmark designed to test LLMs on real engineering problems. It’s model-agnostic, so you can compare GPT-5.2, Claude Opus 4.5, Gemini 3 Pro, etc., within the same workflow.

The Verdict:

Kilo Code has the edge in model selection (500+ models), but Cline’s benchmark tooling is more transparent. Roo Code’s SPARC system is interesting but less widely adopted.

The Dark Horse:

Many developers are migrating to Claude Code (not to be confused with Cline). It’s free, backed by Anthropic, and works with GLM 4.6. Some Reddit users call it the “2026 essential” for developer productivity.

The Bottom Line: Which One Should You Choose?

Here’s my take after two weeks of testing:

Choose Kilo Code if:

- You want the most model options (500+)

- You value transparent, pay-as-you-go pricing

- You need cloud agents and cross-device sessions

- You’re building a team and want centralized billing

Choose Roo Code if:

- You’re an experienced LLM user who wants maximum control

- You need custom modes for specialized workflows

- You’re working on large, complex codebases

- You don’t mind a steeper learning curve

Choose Cline if:

- You’re new to agentic coding and want stability

- You value human-in-the-loop control

- You want the largest open-source community

- You prefer step-by-step transparency over automation

The Real Question:

Do you want speed and automation (Kilo/Roo) or control and predictability (Cline)?

And honestly? The fact that all three are forks pulling from each other means the feature gap is closing fast. By Q2 2026, they might be functionally identical.

The real winner? Developers. We’ve never had this much choice—or this much chaos.

FAQ

Is Kilo Code really free?

The extension is free, but you pay for AI inference. Kilo charges exact provider rates with zero markup. Expect $20-$200/month depending on usage.

Can I use my own API keys with these tools?

Yes. All three support “bring your own key” (BYOK) for providers like Anthropic, OpenAI, Google, and Mistral.

Which tool has the best SWE-bench performance?

None of them have their own scores—they route to AI models. Claude Sonnet 4.5 (82% on SWE-bench Verified) and Gemini 3 Flash (76.20%) are the top performers across all three platforms.

Why are Claude accounts getting suspended?

Anthropic’s terms of service prohibit automated access. Users of Cline, Roo Code, and Kilo Code have reported suspensions when using Claude via these tools. Use at your own risk.

Are Kilo Code and Roo Code really just Cline forks?

Yes. Both forked from Cline and regularly pull updates. Kilo also pulls from Roo. It’s a fork-of-a-fork situation that’s… complicated.

Which tool is best for beginners?

Cline. It has the most beginner-friendly workflow, transparent step-by-step control, and the largest community for support.