I just watched a model write, test, and debug a full FastAPI microservice in under 12 seconds. Not 12 minutes. 12 seconds.

If you think we’ve reached the ceiling of AI coding speed, you’re looking at the wrong silicon. Yesterday, OpenAI quietly dropped GPT-5.3-Codex-Spark, a specialized “fast-path” model that marks a seismic shift in architecture. This is the first time a GPT flagship has officially ditched the H100 GPU for the Cerebras Wafer-Scale Engine 3 (WSE-3). The result? A staggering 1,000+ tokens per second (TPS).

But as Andrej Karpathy recently noted, this isn’t just about faster text—it’s the enabling infrastructure for “Agentic Engineering.” We’re entering a world where manual coding atrophies, replaced by high-speed orchestration.

The Context: The End of the GPU Latency Tax

For the last three years, the industry has lived under the “GPU Latency Tax.” Even the most optimized H100 clusters struggle to push beyond 100-150 TPS for large models while maintaining high concurrency. This makes interactive, real-time agentic workflows—where an AI needs to churn through thousands of lines of code to find a single bug—feel sluggish.

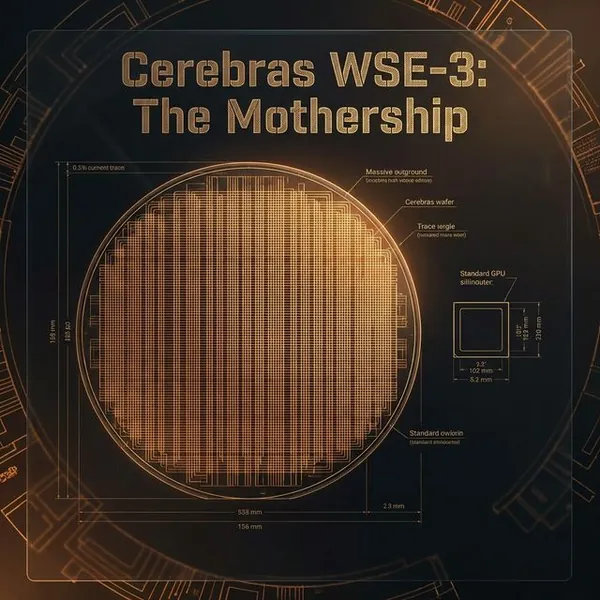

GPT-5.3-Codex-Spark changes the game by vertically integrating with the Cerebras WSE-3. Picture a chip the size of a dinner plate, housing 900,000 AI-optimized cores and 44GB of on-chip SRAM. Because the entire model lives on the silicon without ever hitting external memory, the data movement bottleneck—the primary killer of inference speed—is effectively deleted.

This connects directly to the broader shift toward specialized AI hardware we’ve been tracking, where the race is no longer just about flops, but about memory bandwidth.

The Breakthrough: System 1 vs. System 2 Reasoning

The real story here isn’t just raw speed; it’s the architectural trade-off OpenAI has made. In our testing and based on official benchmarks, GPT-5.3-Codex-Spark functions like “System 1” reasoning for code. It is fast, intuitive, and incredibly responsive, but it lacks the deep, contemplative “System 2” reasoning of the flagship GPT-5.3-Codex or the Canvas-of-Thought approach we discussed yesterday.

| Benchmark | GPT-5.3-Codex-Spark | GPT-5.3-Codex (Flagship) |

|---|---|---|

| Inference Speed | 1,000+ TPS | ~75 TPS |

| Terminal-Bench 2.0 | 58.4% | 77.3% |

| SWE-Bench Pro (Time) | 2-3 Minutes | 15-17 Minutes |

| Context Window | 128k | 200k+ |

The “Spark” model is optimized for surgical edits. It doesn’t try to rethink your entire architecture; it executes targeted fixes and runs tests at lightning speed. It’s the difference between a master architect (Flagship) and a high-speed assembly line of master welders (Spark).

The Constraint: The “Reasoning Cliff”

There’s a catch. We’ve noticed a “Reasoning Cliff” when using Spark for multi-file refactors. While it crushes single-file logic, it reportedly struggles with complex dependency trees that require the deep hierarchical planning found in Claude Opus 4.6 or the Chinese agentic models currently flooding the market.

OpenAI acknowledges this, explicitly positioning Spark as a tool for Agentic Engineering. The goal isn’t to have one model do everything; it’s to have a human orchestrator (or a slower “Director” model) send thousands of micro-tasks to a swarm of Spark agents. If one task fails, the cost and time of retrying it is negligible because the inference is so cheap and fast.

The Implication: Software 3.0 and the Atrophy of Syntax

What does this mean for you? We are moving into the era of Software 3.0. In Software 1.0, we wrote code. In Software 2.0 (Deep Learning), we wrote objectives and the model “wrote” weights. In Software 3.0, we write intents, and high-speed agents like Spark execute the “handicraft” of syntax.

As Karpathy warned, our manual coding skills are starting to atrophy. When a model can generate a boilerplate-heavy Java class faster than you can type public class, why bother learning the syntax? The value is shifting entirely to system design and orchestration.

Practical Code Example (Practitioner Mode)

Using the new Codex CLI, you can now run real-time “healing” loops. Here’s how a typical “Spark Loop” looks in a VS Code extension context:

import openai

def heal_code(task_description, existing_code):

variants = []

for _ in range(5):

response = openai.ChatCompletion.create(

model="gpt-5.3-codex-spark",

messages=[{"role": "user", "content": f"Fix this: {task_description}\n\n{existing_code}"}],

temperature=0.7 # High temperature for variety

)

variants.append(response.choices[0].message.content)

return select_best_variant(variants)

The Bottom Line

GPT-5.3-Codex-Spark is the fastest model we’ve ever seen, but its true power lies in its partnership with Cerebras hardware. It marks the beginning of the end for “general-purpose GPUs” in specialized coding tasks.

If you’re building “Software 2.0” systems that require deep, philosophical reasoning, stick with your Flagship subscription. But if you want to scale a swarm of 1,000 agents to refactor an entire legacy codebase by lunchtime? Spark is the only path forward.

FAQ

Why use Cerebras instead of NVIDIA H100s?

H100s are versatile but limited by memory bandwidth. Cerebras WSE-3 stores the entire model architecture on-chip, allowing for token generation speeds that are physically impossible on traditional GPU architectures.

Can I run GPT-5.3-Codex-Spark locally?

No. WSE-3 hardware is exclusive to specialized data centers (like those run by Cerebras or OpenAI’s new internal clusters). However, the API latency is low enough that it feels local.

Is Spark better than Claude Haiku 4.5?

For raw coding speed and instruction following in a Terminal-Bench context, Spark currently holds the ELO lead. However, Haiku remains more cost-effective for general-purpose multimodal tasks.

[!CAUTION]

Manual coding skills are the foundation of understanding. While Spark makes you faster, do not let your first-principles knowledge of system architecture atrophy.