While everyone was distracted by Anthropic dropping Claude Opus 4.6 (released Feb 5, 2026) and OpenAI pushing GPT-5.3 Codex to the top of the SWE-Bench leaderboard (56.8%), Google has been quietly running a black ops operation in the arena.

I just secured leaked generations from specific checkpoints of Gemini 3 Pro G8 that are currently being tested in the wild. We’ve seen these names pop up in LMSYS, but now we have the outputs.

But here’s the real story: one checkpoint was significantly better than everything else—and Google just deleted it.

Let’s break down exactly what Mountain View is hiding.

The 4 Secret Variants: Riftrunner, Snowplow, and More

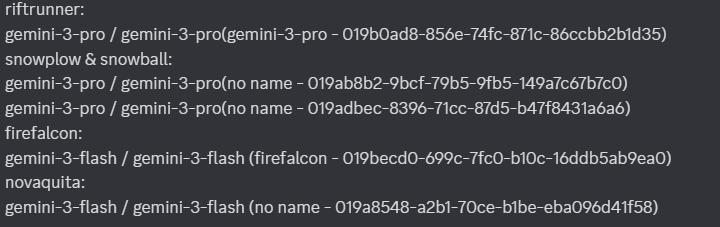

Google isn’t just testing a model; they’re A/B testing an entire philosophy. Validated by recent LMSYS sightings, the variants are:

- Riftrunner (Gemini 3.0 Pro Candidate): This is widely confirmed to be the “standard” Gemini 3 Pro baseline. It’s solid, stable, and clearly the safe bet for a GA release.

- Snowplow & Snowball: This is where it gets interesting. These names have appeared alongside the standard models, but their behavior is distinct. While “Snowplow” usually refers to preschool skating, in this context, it appears to be a safety-tuned variant. They’re likely testing the same base weights with different RLHF (Reinforcement Learning from Human Feedback) penalties to see how much alignment costs them in intelligence.

- Fire Falcon (Fierce Falcon) & Nova Quida: These are the Gemini 3 Flash variants. Often appearing as “Fierce Falcon” or “Ghost Falcon” in the arena, these models are optimized for speed. “Nova Quida” appears to be an even more experimental quantization.

The testing strategy is clear: throw everything at the wall in the anon arena, see what the swarm prefers, and then package the winner as “Gemini 3.” But the most interesting data point isn’t what’s there—it’s what’s missing.

The “Lost” Checkpoint & The SVG Obsession

Here’s the leak that should worry you. During this testing phase, there was a specific checkpoint that outperformed the others across the board. It was smarter, faster, and more coherent.

And Google pulled it.

Why remove your best player?

* Safety Failure? Did it refuse to align with safety guidelines?

* Compute Cost? Was the inference cost simply too high for a free product?

* Internal Red Teaming? Did it fail a specific “dangerous capability” threshold?

What remains in the testing set is a series of checkpoints that seem heavily aligned towards SVG (Scalable Vector Graphics) generation.

The Mona Lisa Test

I know what you’re thinking. SVG? Who cares?

You should. SVG isn’t just image generation; it’s code generation. An SVG is an XML file where every line, curve, and color is defined by a mathematical formula. To generate a coherent SVG of the Mona Lisa, the model doesn’t just need to “see” the image; it needs to understand the geometry of the image and translate it into code.

Look at the difference:

* GPT-5.3 Codeex: Generates a blob that looks like a Picasso nightmare.

* Claude Opus 4.6: Creates something that looks like a nun, but the geometry is broken.

* Gemini 3 Pro G8: Generates a recognizable, mathematically consistent Mona Lisa.

This isn’t about art. It’s about spatial reasoning in code. If a model can define the curve of a smile using a cubic bezier curve in XML, it can architect complex software systems. Google is optimizing for this specific type of abstract reasoning, and it’s paying off.

Even the classic “Pelican riding a bike” test—which trips up almost every model—was solved by this SVG-biased checkpoint. Opus 4.6 failed. GPT-5.3 failed. Gemini 3 Pro nailed it.

Visualizing Consciousness? (The 3.js Test)

The most mind-bending leak I saw was a complex Three.js generation.

The prompt was a nightmare: Create a glass dodecahedron (12-sided 3D shape) where each face acts as a filter. Put a rotating torus knot inside. Each face must distort the knot differently—tinted, wireframe, stained glass.

Requirements:

* Single HTML file.

* Three.js library.

* Zero geometry duplication (rendering set to zero).

* Opacity between 0.1 and 0.3.

Gemini 3 Pro didn’t just write the code; it rendered a hallucination.

The output was a perfectly rotating glass structure. As the torus knot spun behind the different faces, the rendering logic switched perfectly in real-time. It was consistent. It was mathematically perfect. And it was weirdly beautiful.

It felt like watching a model dream in code.

The Bottom Line

Google is sitting on something big. The “Lost Checkpoint” proves they have weights that are significantly ahead of the current market.

But here’s the fear: Google has a history of nerfing models between Arena and API.

We saw it with Gemini 1.5 Pro. We saw it with Ultra. The version that dominates the leaderboard often gets “safety-tuned” into lobotomy by the time it hits your API key.

If “Riftrunner” is the model we actually get, we’re in for a solid upgrade. But if the “Lost Checkpoint” is gone forever? Then we might look back at this week as the brief moment where Google actually touched AGI, got scared, and shut it down.

Let’s pray they release the raw weights. But I wouldn’t bet on it.

FAQ

What is the release date for Gemini 3 Pro?

Google hasn’t officially announced a date, but the aggressive Arena testing suggests a GA (General Availability) release is imminent, likely within weeks.

Why does the SVG generation matter?

SVG generation is a proxy for “reasoning in code.” It requires understanding spatial relationships and translating them into strict mathematical syntax, which correlates with better coding and logic abilities.

Will the “Lost Checkpoint” be released?

Unlikely. If it was pulled from testing, it likely failed a safety, cost, or stability gate. However, parts of its architecture may be merged into the final “Snowplow” or “Riftrunner” variants.