The era of “bigger is better” just hit a wall. A Klein bottle-shaped wall. On January 15th, Black Forest Labs (BFL) quietly rewrote the rules of local diffusion. While the world was busy arguing about 100B parameter LLMs, BFL dropped FLUX.2 Klein—a model family that doesn’t just aim to be smaller; it aims to be topologically superior.

If FLUX.1 was the sledgehammer that broke Midjourney’s monopoly, FLUX.2 Klein is the scalpel. It brings state-of-the-art fidelity into a footprint that doesn’t require a mortgage-backed security to run. We are talking about true photorealism on a consumer GPU.

Let’s tear down the specs.

The “Klein” Philosophy: A Unified Loop

Why “Klein”? It’s not just a cool German word for “small.” In topology, a Klein bottle is a non-orientable surface where the inside and outside are continuous.

This is the perfect metaphor for what BFL has achieved. Historically, if you wanted to generate an image, you used one model. If you wanted to edit it (inpaint/outpaint), you often needed a separate “inpainting” checkpoint or a clumsy ControlNet adapter.

FLUX.2 Klein unifies this.

The architecture is designed for a continuous workflow. You generate. You mask. You edit. You regenerate. It uses the same weights, the same understanding of light and texture, and the same flow. It is a “unified generative-editing backbone.”

This matters because “inpainting models” usually lag behind the main checkpoints by months. With Klein, the SOTA generator is the SOTA editor.

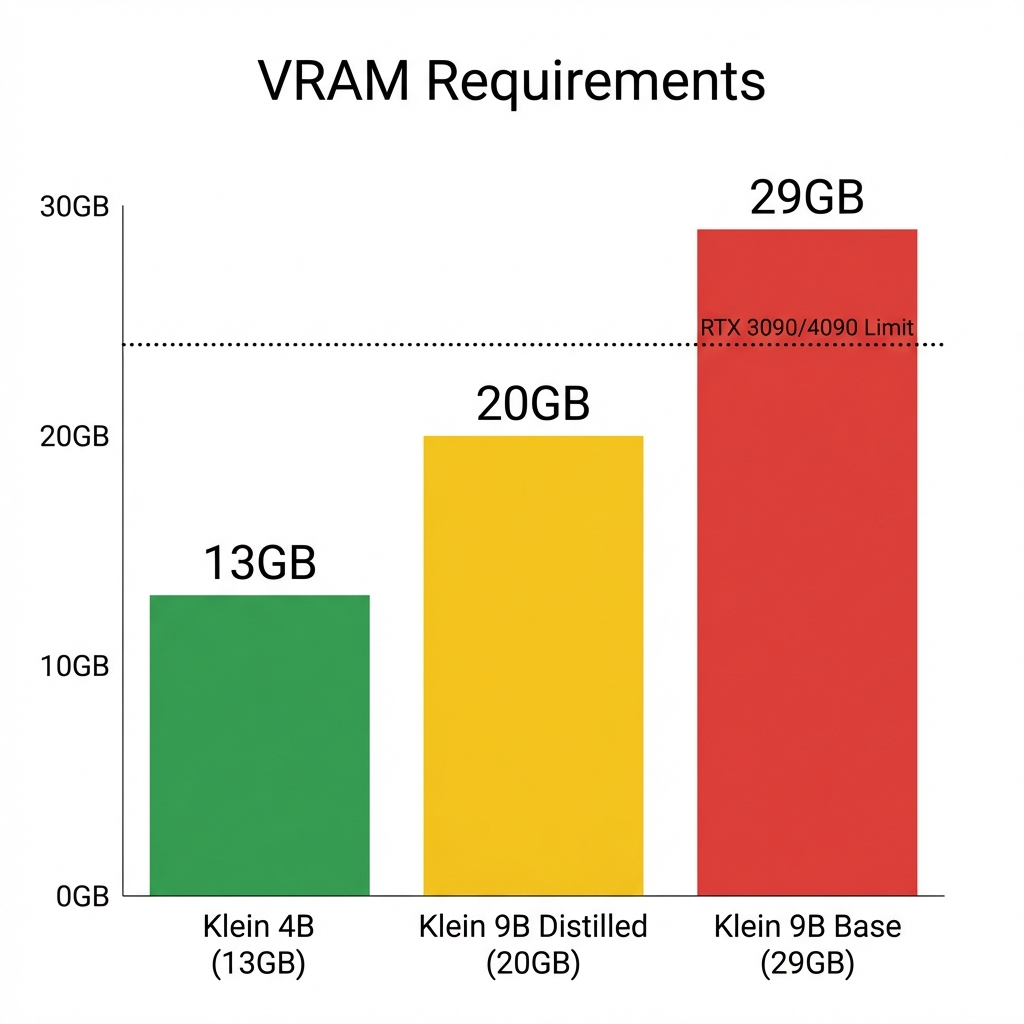

The VRAM Reality: 4B vs 9B

BFL released two distinct flavors of Klein. Understanding the difference is critical before you destroy your HuggingFace bandwidth.

The People’s Champion: Klein 4B

- Parameters: 4 Billion

- VRAM Requirement: ~13 GB

- License: Apache 2.0 (Open Source)

This is the headline story. A 4B parameter model that fits into 13GB of VRAM.

This means if you own an NVIDIA RTX 4070 Ti Super (16GB), an RTX 3090 (24GB), or even a strictly managed 4070 (12GB) with some quantization/offloading, you are in the game.

And the license? Apache 2.0. This is huge. It means startup founders, indie devs, and platform builders can deploy this without looking over their shoulder for BFL’s legal team. It is true open weights.

The Studio Beast: Klein 9B

- Parameters: 9 Billion

- VRAM Requirement: ~29 GB (Base) / ~20 GB (Distilled)

- License: FLUX Non-Commercial

The 9B is the “Pro” version. The fidelity is marginally higher, specifically in complex prompt adherence and typography. However, the VRAM cost is steep.

Base Model (29GB): You need an A6000 or an Apple Silicon Mac (M3/M4 Max) with unified memory to run this comfortably. An RTX 4090 (24GB) will weep unless you aggressively quantize.

Distilled (20GB): This fits on a 3090/4090, but barely.

Verdict: For 99% of users reading this, Klein 4B is the one you want. The delta in quality does not justify the 2x hardware cost.

Speed: Blink and It’s There

FLUX.2 is built on a Rectified Flow Transformer architecture. Without getting into the differential equations, rectified flow essentially finds the “straightest path” between noise and image.

Straight paths mean fewer steps.

Fewer steps mean speed.

Benchmarks (RTX 4090):

FLUX.1 [dev]: ~20-30 seconds (50 steps)

FLUX.2 Klein 4B (Distilled): ~1 second

Yes, you read that right. The distilled variants can produce high-quality output in roughly one second. We are approaching “real-time” generation. This changes the workflow from “Prompt -> Wait -> Coffee -> Review” to “Prompt -> See -> Tweak -> See.”

It feels closer to painting than programming.

Local vs Cloud: Why Download?

“But text-to-image is cheap API-wise!”

Sure. But latency is the killer of flow.

When you are editing—fixing a hand, shifting a light source, changing a shirt color—a 5-second round trip to the cloud breaks your concentration. A 1-second local inference keeps you in the flow state.

Furthermore, privacy. FLUX.2 Klein allows you to generate whatever you want (within its safety training/filters) on your own metal. You aren’t submitting your creative prompts to a corporate log file.

Conclusion: The “Tiny” Giant

FLUX.2 Klein 4B is the most important release of early 2026 for the local AI community.

It proves that we don’t need 100GB of VRAM to get world-class results. We needed better architecture. We needed unified editing. We needed rectified flow.

Black Forest Labs has handed us a 13GB key to the kingdom. Go download it