One week. Two model drops. And the gap between “affordable” and “frontier” just got very, very blurry.

On February 11, Zhipu AI dropped GLM-5 — a 744-billion-parameter open-source monster trained entirely on Huawei Ascend chips, priced at $1 per million input tokens. Six days later, Anthropic shipped Claude Sonnet 4.6 — a mid-tier model that somehow beats Opus 4.6 on knowledge work benchmarks, with a 1-million-token context window and the same $3/1M price tag as its predecessor.

Both are gunning for the same developers. Both claim agentic supremacy. And both are genuinely excellent.

So which one do you actually pick? I’ve been running both through their paces, and the answer is more nuanced than any benchmark table will tell you.

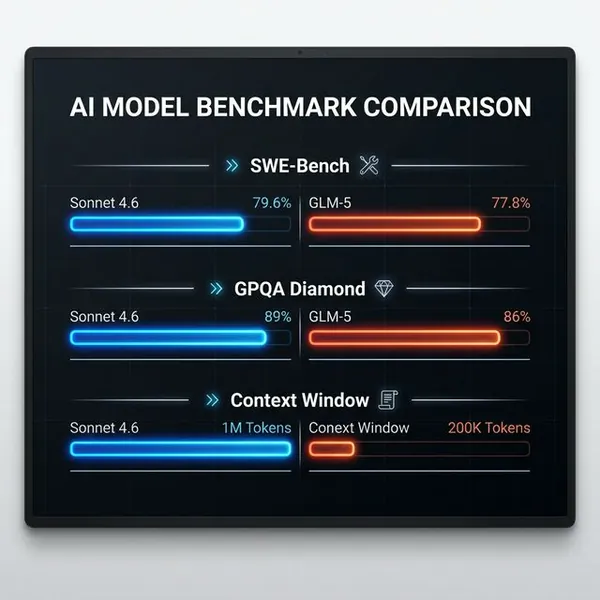

The Numbers: Where They Actually Stand

Let’s start with the raw data, because the benchmarks here are genuinely close enough to matter.

| Benchmark | Claude Sonnet 4.6 | GLM-5 | Winner |

|---|---|---|---|

| SWE-Bench Verified | 79.6% | 77.8% | Sonnet 4.6 (+1.8%) |

| Terminal-Bench 2.0 | ~59% (agentic terminal) | 56.2% | Sonnet 4.6 (+~3%) |

| GPQA Diamond | 89% | 86% | Sonnet 4.6 (+3%) |

| OS World (Computer Use) | 72.5% | N/A | Sonnet 4.6 |

| General Tool Use | 61.3% | Competitive (MCP-Atlas SOTA) | Sonnet 4.6 |

| Vending Bench | ~$5,500 profit | $4,432 profit | Sonnet 4.6 |

| Context Window | 1,000,000 tokens | 200,000 tokens | Sonnet 4.6 |

| Price (Input / Output) | $3 / $15 per 1M | $1 / $3.20 per 1M | GLM-5 (3x cheaper) |

| Open Source | No | MIT License | GLM-5 |

| Local Deployment | No | Yes (256GB+ RAM) | GLM-5 |

Here’s what that table doesn’t tell you: the 1.8% SWE-bench gap is almost meaningless in production. What matters is why each model wins where it does — and the architectural reasons behind those wins.

The Context Window Chasm

This is the biggest differentiator, and it’s not close.

Sonnet 4.6 ships with a 1-million-token context window in beta. GLM-5 tops out at 200,000 tokens — which is respectable, but it’s not in the same league for certain workloads.

Think about what a million tokens actually means. That’s roughly 750,000 words — the entire Harry Potter series, loaded into a single prompt. For enterprise developers analyzing massive codebases, legal teams processing patent portfolios, or financial analysts ingesting quarterly reports across multiple years, this isn’t a nice-to-have. It’s the whole game.

GLM-5 uses DeepSeek Sparse Attention (DSA) to make its 200K window efficient — the same technique that made DeepSeek R1 viable for long-context reasoning. It cuts inference costs by roughly 60% while maintaining over 95% recall accuracy. Smart engineering. But 200K is still 200K.

For most developers? 200K is fine. That’s 50,000 lines of code. Unless you’re debugging the Linux kernel (which, as I’ve covered before, Anthropic researchers did for $20,000 in API costs), you won’t hit that ceiling. But for knowledge work at enterprise scale, Sonnet 4.6’s million-token window is a genuine moat.

The Agentic Engineering Angle: GLM-5’s Real Play

Here’s what everyone’s getting wrong about GLM-5: they’re comparing it to Claude on coding benchmarks and calling it a day. That misses the point entirely.

Zhipu AI explicitly positioned GLM-5 for Agentic Engineering — not just code generation, but autonomous development of complex, multi-file software systems with long-horizon planning and repository exploration. The distinction matters.

On BrowseComp — a benchmark measuring autonomous web research and information synthesis — GLM-5 scores 62.0 (75.9 with context management). That’s best-in-class for open-weight models. On τ²-Bench, which tests multi-turn agentic task completion, it’s the open-source leader.

And then there’s the Vending Bench result. GLM-5 hit $4,432 in profit on the autonomous vending machine simulation — first place among open-source models. Sonnet 4.6 hit $5,500. That’s a meaningful gap, but GLM-5 is running at a fraction of the cost per inference loop.

The math changes when you’re running thousands of agent iterations. At $1/1M input tokens vs $3/1M, you can run three times as many agent loops for the same budget. For agentic workflows where you’re iterating rapidly — debugging, refactoring, exploring — that cost multiplier compounds fast.

The Tool Use Revolution: Sonnet 4.6’s Secret Weapon

I’ve been watching Anthropic’s tool use numbers for months. The jump in Sonnet 4.6 is the most important upgrade in this release, and it’s not getting enough attention.

General Tool Use: 43.8% (Sonnet 4.5) → 61.3% (Sonnet 4.6).

That’s a 17-point jump in a single generation. When a model can reliably call APIs, query databases, and interact with MCP servers, it stops being a chatbot and becomes an engine. This is the difference between a model that tries to use tools and one that actually uses them correctly on the first attempt.

The downstream effects are real. On the OS World benchmark — where the model gets its own computer environment and completes practical tasks by clicking and typing like a human — Sonnet 4.6 hit 72.5%, up from 61.4% in Sonnet 4.5. That’s not incremental. That’s a paradigm shift.

GLM-5 doesn’t have a direct OS World score to compare. Its computer use capabilities are competitive on MCP-Atlas (the open-source SOTA), but Anthropic’s computer use infrastructure — built into Claude Cowork and the broader Claude ecosystem — gives Sonnet 4.6 a practical advantage that benchmarks don’t fully capture.

The Huawei Angle: Why GLM-5 is More Than a Model

I’d be doing you a disservice if I didn’t mention this: GLM-5 was trained entirely on Huawei Ascend 910C chips. Not a single NVIDIA GPU.

This is Beijing’s answer to U.S. semiconductor export restrictions. And it’s working. The fact that Zhipu AI can train a 744-billion-parameter model — competitive with frontier closed-source models — without American silicon is a geopolitical signal as much as a technical one.

The open-source MIT license compounds this. While Anthropic locks Sonnet 4.6 behind API pricing and cloud platforms, China is flooding the market with production-ready alternatives that developers can download, fine-tune, and run locally (if they have 256GB+ RAM). The message from Beijing is clear: “You don’t need Silicon Valley’s permission to build intelligent agents.”

For developers in markets with data sovereignty requirements, or teams that need to fine-tune on proprietary data without sending it to Anthropic’s servers, GLM-5’s open-source nature is a genuine differentiator. This is the same playbook that made MiniMax M2.1 a serious contender — undercut on price, open-source the weights, let the community do your QA.

Adaptive Reasoning: The Hidden Lever

Both models have thinking capabilities, but they implement them differently.

Sonnet 4.6 ships with Adaptive Reasoning — the ability to scale “thinking tokens” up or down based on task complexity. Hard problems get more compute; simple tasks get less. This is a real lever for cost optimization in production systems. On Humanity’s Last Exam (without tools), Sonnet 4.6 nearly doubled its score from 17.7% to 33% — a jump that’s directly attributable to improved reasoning depth.

GLM-5 has thinking modes too, but the implementation is less granular. You toggle between standard and reasoning mode; you don’t get the fine-grained control that Anthropic’s Adaptive Reasoning provides.

For developers building production agents where cost predictability matters, Sonnet 4.6’s approach is more sophisticated. You can tune the compute budget per query — which, at scale, is the difference between a profitable product and a money pit.

What This Means For You

The choice between these two models comes down to three questions.

Are you cost-constrained? If you’re running thousands of agent loops and budget is the primary constraint, GLM-5 at $1/1M input is the obvious choice. The 1.8% SWE-bench gap doesn’t justify a 3x price premium for high-volume workloads.

Do you need a massive context window? If you’re doing knowledge work — analyzing large codebases, processing enterprise documents, running financial analysis across years of data — Sonnet 4.6’s 1M context window is non-negotiable. GLM-5’s 200K ceiling will become a bottleneck.

Do you need to run locally or fine-tune? GLM-5’s MIT license and open weights make it the only option here. Sonnet 4.6 doesn’t run locally, period. If data sovereignty or custom fine-tuning is a requirement, the decision is made for you.

Practical Recommendations

- Production coding agents at scale: GLM-5. The price-to-performance ratio is unbeatable for high-volume iteration loops.

- Enterprise knowledge work: Sonnet 4.6. The 1M context window and 61.3% general tool use make it the right tool for complex, document-heavy workflows.

- Computer use and automation: Sonnet 4.6. The 72.5% OS World score and Claude Cowork integration give it a practical edge that GLM-5 can’t match yet.

- Self-hosted or fine-tuned deployments: GLM-5. No contest.

- Budget experimentation: GLM-5. Lower risk, lower cost, still production-grade performance.

The Bottom Line

Sonnet 4.6 is the better model. GLM-5 is the better deal.

That’s not a cop-out — it’s the honest read. Anthropic built something genuinely impressive with Sonnet 4.6. The tool use jump alone justifies the upgrade from 4.5. The 1M context window is real and useful. The OS World performance is a paradigm shift for computer use.

But GLM-5 is 3x cheaper, open-source, and within 2 points on the benchmark that matters most (SWE-bench). For a model trained on Huawei chips by a Chinese lab that most Western developers hadn’t heard of six months ago, that’s extraordinary.

The real story here isn’t “which model wins.” It’s that the gap between frontier proprietary models and open-source alternatives has collapsed to the point where the choice is now genuinely about your use case — not about capability. That’s a fundamentally different world than we were living in twelve months ago.

If you’re building with either of these, I want to hear what you find. The agentic coding space is moving faster than any benchmark can capture.

FAQ

Is GLM-5 actually open-source?

Yes, under an MIT License — meaning unrestricted commercial use, fine-tuning, and redistribution. The weights are available on Hugging Face. The catch: 744B parameters requires 256GB+ RAM for full local deployment. Most developers will use the API at $1/1M input tokens, which is still 3x cheaper than Sonnet 4.6.

How does Sonnet 4.6’s 1M context window compare to GLM-5’s 200K?

Sonnet 4.6’s 1M token window (in beta) is roughly 5x larger. For most coding tasks, GLM-5’s 200K is sufficient — that’s about 50,000 lines of code. But for enterprise knowledge work (large codebases, legal documents, financial analysis), Sonnet 4.6’s window is a genuine differentiator. Anthropic’s MRCR v2 score confirms the context is actually usable, not just theoretical.

Which model is better for agentic coding agents?

It depends on your volume. For high-volume iteration loops where cost matters, GLM-5’s $1/1M pricing makes it the practical choice — the 1.8% SWE-bench gap doesn’t justify 3x the cost. For mission-critical, low-volume tasks where reliability and tool use accuracy matter more than price, Sonnet 4.6’s 61.3% general tool use score and Adaptive Reasoning give it the edge.

Can I run Sonnet 4.6 locally?

No. Sonnet 4.6 is a closed-source model available only through Anthropic’s API, claude.ai, AWS Bedrock, Google Vertex AI, and Microsoft Azure. If local deployment is a requirement, GLM-5 is your only option in this comparison.

What is Adaptive Reasoning in Sonnet 4.6?

Adaptive Reasoning lets Sonnet 4.6 scale its “thinking tokens” up or down based on task complexity. Simple queries get fast, cheap responses. Hard problems get deeper reasoning chains. This is a key lever for cost optimization in production — you’re not paying for heavy reasoning on every query, only when the task demands it.

Why was GLM-5 trained on Huawei chips?

Zhipu AI trained GLM-5 entirely on Huawei Ascend 910C chips using the MindSpore framework — no NVIDIA GPUs. This is a deliberate response to U.S. semiconductor export restrictions. The fact that a 744B-parameter frontier-competitive model can be trained on domestic Chinese hardware is a significant geopolitical signal, proving that export controls haven’t stopped China’s AI progress.