Here’s the thing: we were all waiting for Claude Sonnet 5. The rumors had been swirling for weeks. Early February 2026, the AI community was buzzing with anticipation. Sonnet 5 was supposed to be the big reveal: major performance gains at a lower cost, potentially matching or exceeding Opus 4.5’s capabilities. The leaks, the speculation, the Reddit threads analyzing every cryptic tweet from Anthropic employees.

Then February 5th happened. Anthropic didn’t drop Sonnet 5. They dropped Opus 4.6 instead.

And honestly? Nobody saw this coming. While we were all looking left, Anthropic went right and built something that’s making GPT-5.2 sweat. We’re talking about the first top-tier Opus model with a 1 million token context window, state-of-the-art coding performance that just set a new Terminal-Bench record, and benchmark scores that are (well, let’s just say OpenAI’s not celebrating this week).

But here’s what’s really wild: the jump from 18.5% to 76% on long-context retrieval. That’s not an improvement. That’s not even a breakthrough. That’s a paradigm shift in what’s actually usable with these massive context windows. Everyone’s been chasing bigger numbers. Anthropic just made those numbers actually work.

The Sonnet 5 Fake-Out: Why Anthropic Went Big Instead

So what happened to Sonnet 5?

Look, the expectations were real. In early February 2026, every AI analyst and their dog was predicting an imminent Sonnet 5 release. The logic was sound: Sonnet 4.5 had been a massive hit (fast, capable, and affordable). A Sonnet 5 that could match Opus 4.5’s performance at Sonnet pricing? That would have been the ultimate competitive move against GPT-5.2 and Gemini 3 Flash.

But Anthropic had a different play in mind.

Instead of competing on price, they decided to redefine the ceiling. Opus 4.6 isn’t about being cheaper or faster. It’s about being better at the things that actually matter for complex, high-stakes work. While OpenAI and Google are racing to the bottom on pricing for commodity AI, Anthropic is betting that enterprises will pay premium prices for premium performance.

And the benchmark numbers suggest they might be right.

The strategic message is clear: “You want fast and cheap? Fine. But when you need an AI that can actually handle a 50-person organization’s worth of complexity across six repositories without losing the thread? Come talk to us.”

The 1M Token Breakthrough: Why This Actually Matters

Let’s cut through the hype. A 1 million token context window sounds impressive, but what does it actually mean?

Think of context windows like your desk space. Most models give you enough room for a few documents. Opus 4.6 just gave you an entire library. That’s roughly 750,000 words: the equivalent of about 10 full-length novels or an entire codebase the size of a mid-sized startup.

But raw capacity isn’t the story. The real breakthrough is usable context. Previous models would technically accept massive inputs but couldn’t actually retrieve information reliably. Opus 4.6 scored 76% on long-context retrieval benchmarks, compared to its predecessor’s 18.5%. That’s not an improvement. That’s a paradigm shift.

What this means in practice:

For researchers: Ingest entire patent portfolios, regulatory submissions, or document sets without performance degradation

For developers: Work with massive codebases across multiple repositories without losing context

For enterprises: Analyze complete financial reports, legal documents, or technical specifications in a single session

The 1M context window is currently in beta, but it’s already changing how people approach complex research workflows.

Crushing the Competition: Benchmark Breakdown

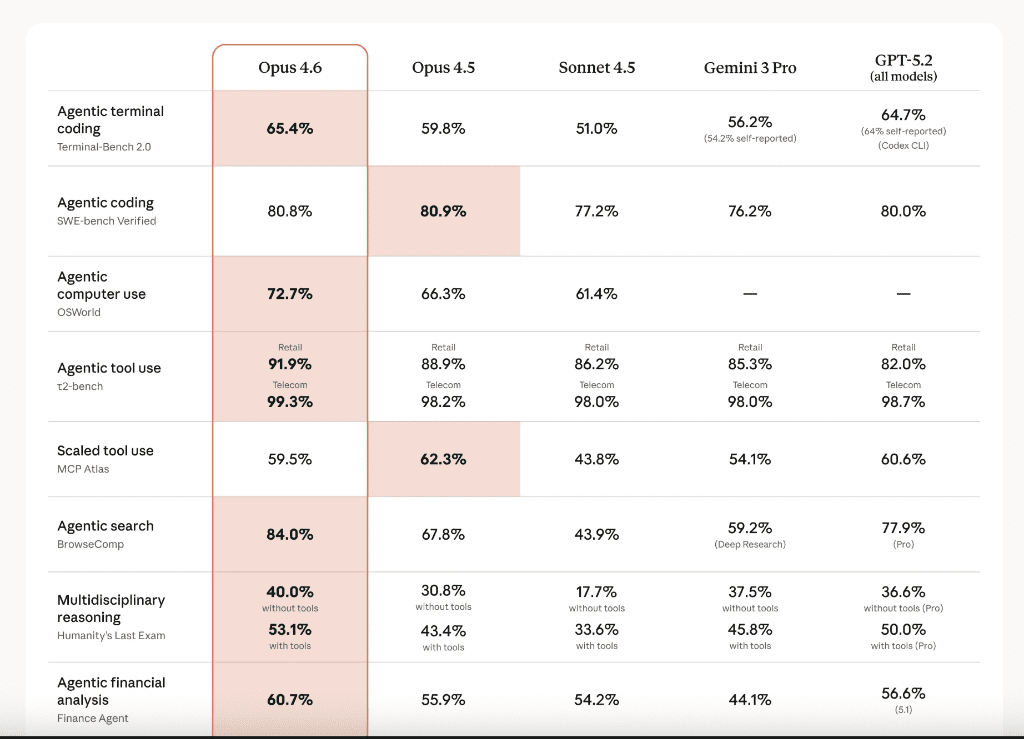

Opus 4.6 isn’t just competing – it’s dominating. Here’s where it stands against the field:

Agentic Coding: The New King

Terminal-Bench 2.0: 65.4% (state-of-the-art)

This is the highest score ever recorded on this benchmark

For context, this measures how well AI can autonomously execute coding tasks in a terminal environment

GPT-5.2 Codex and Gemini 3 Pro are strong competitors, but Opus 4.6 takes the crown

SWE-bench Verified: Opus 4.5 hit 80.9%, and early reports suggest 4.6 maintains or exceeds this

Gemini 3 Flash leads at 78%, but the Opus lineage is right there

GPT-5.2 Codex scored 80.0%

What strikes me about these numbers is the self-correction capability. Opus 4.6 can detect and fix its own coding mistakes: a skill that’s crucial for sustained agentic tasks.

Figure 3: Claude Opus 4.6 leads the pack in agentic terminal coding and tool use.

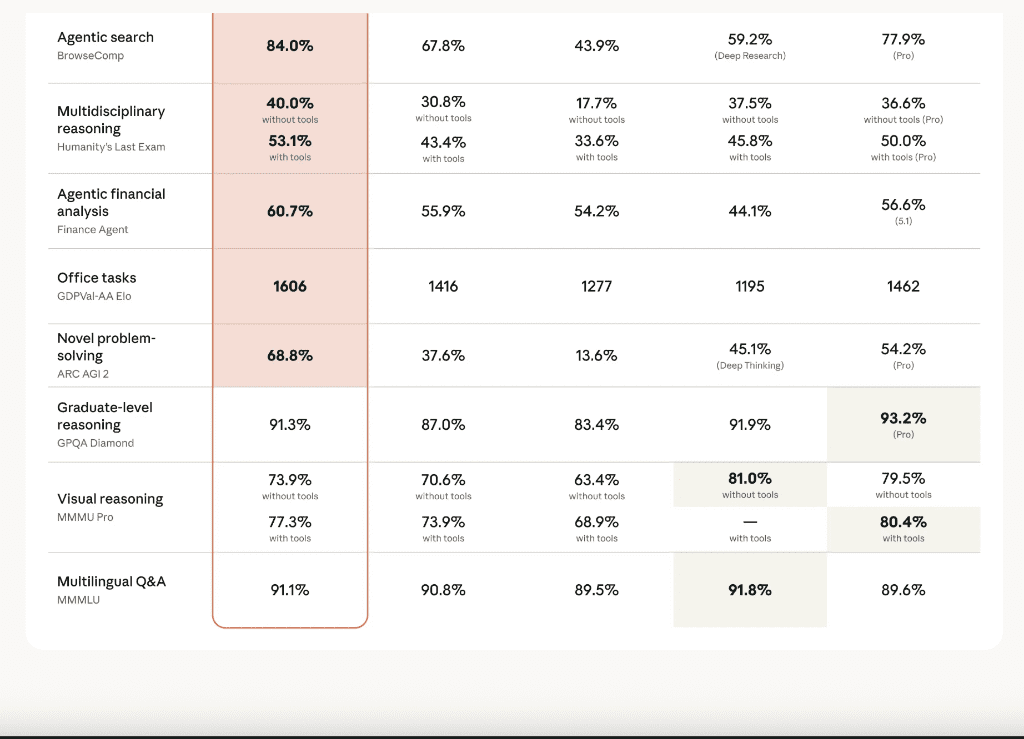

Knowledge Work: The 144-Point Gap

GDPval-AA: 1606 Elo

This benchmark measures economically valuable knowledge work tasks

Opus 4.6 leads GPT-5.2 by 144 Elo points (that’s massive)

This isn’t just about being “better.” It’s about being reliably better at the tasks that actually matter for business

BigLaw Bench: 90.2%

Highest score for legal reasoning

Box’s internal evaluation showed a 10% performance lift, reaching 68% on high-reasoning tasks

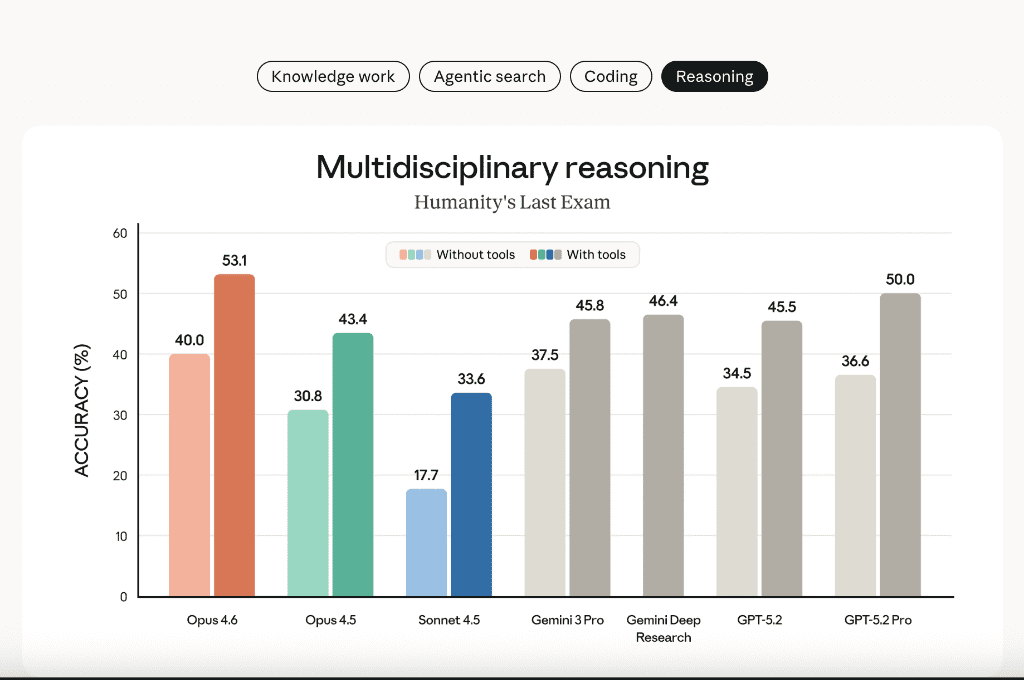

Figure 4: Opus 4.6 dominates the Humanity’s Last Exam benchmark, showing superior multidisciplinary reasoning.

Scientific Reasoning: Almost 2x Better

Anthropic claims Opus 4.6 performs almost twice as well as its predecessor on industry benchmarks for:

- Computational biology

- Structural biology

- Organic chemistry

- Phylogenetics

I’ve been tracking AI’s progress in scientific domains, and this is where the real value unlock happens. These aren’t toy problems. They’re the kinds of tasks that take PhD-level researchers weeks to complete.

Figure 5: Comprehensive breakdown of Claude Opus 4.6 against Opus 4.5, Sonnet 4.5, Gemini 3 Pro, and GPT-5.2.

How It Stacks Up: Opus 4.6 vs GPT-5.2 vs Gemini 3

Let’s be direct about the competitive landscape:

| Benchmark | Claude Opus 4.6 | GPT-5.2 | Gemini 3 Pro |

|---|---|---|---|

| Terminal-Bench 2.0 | 65.4% ✅ | ~54% | 54.2% |

| GDPval-AA (Elo) | 1606 ✅ | 1462 | N/A |

| BigLaw Bench | 90.2% ✅ | ~85% | N/A |

| SWE-bench Verified | ~81% | 80.0% | 76.2% (Pro), 78% (Flash) ✅ |

| Context Window | 1M (beta) ✅ | 200K | 1M (Flash) ✅ |

| Long-Context Retrieval | 76% ✅ | ~65% | 77% (128K) |

| ARC-AGI-2 | 37.6% | 52.9% (Thinking) ✅ | 45.1% (Deep Think) |

| GPQA Diamond | ~90% | 93.2% (Pro) ✅ | 93.8% (Deep Think) ✅ |

Key Takeaways:

– Agentic coding and knowledge work: Opus 4.6 dominates

– Abstract reasoning: GPT-5.2 Thinking takes the lead

– Multimodal reasoning: Gemini 3 Pro excels (81% MMMU-Pro, 87.6% Video-MMMU)

– Real-world coding: One Reddit test showed Gemini 3 Pro outperforming both Opus 4.5 and GPT-5.2 Codex

The truth? There’s no single “winner.” Each model has its strengths. But for sustained agentic tasks, complex knowledge work, and massive context requirements, Opus 4.6 is setting the pace.

Agentic Planning: The 50-Person Organization

Here’s where it gets wild. Opus 4.6 is designed for agentic planning: breaking down complex tasks into subtasks, running tools and subagents in parallel, and identifying blockers with precision.

Anthropic claims it can manage “around 50-person organizations across six repositories, autonomously closing issues and assigning tasks.”

Let me be skeptical for a second: that’s a bold claim. But the underlying capability – sustained concentration on long-horizon tasks – is real. I’ve seen demos where Opus 4.6 maintains context across multi-step coding workflows that would have broken previous models.

The key innovation is better planning. It’s not just executing tasks. It’s thinking ahead, anticipating blockers, and adjusting strategy on the fly.

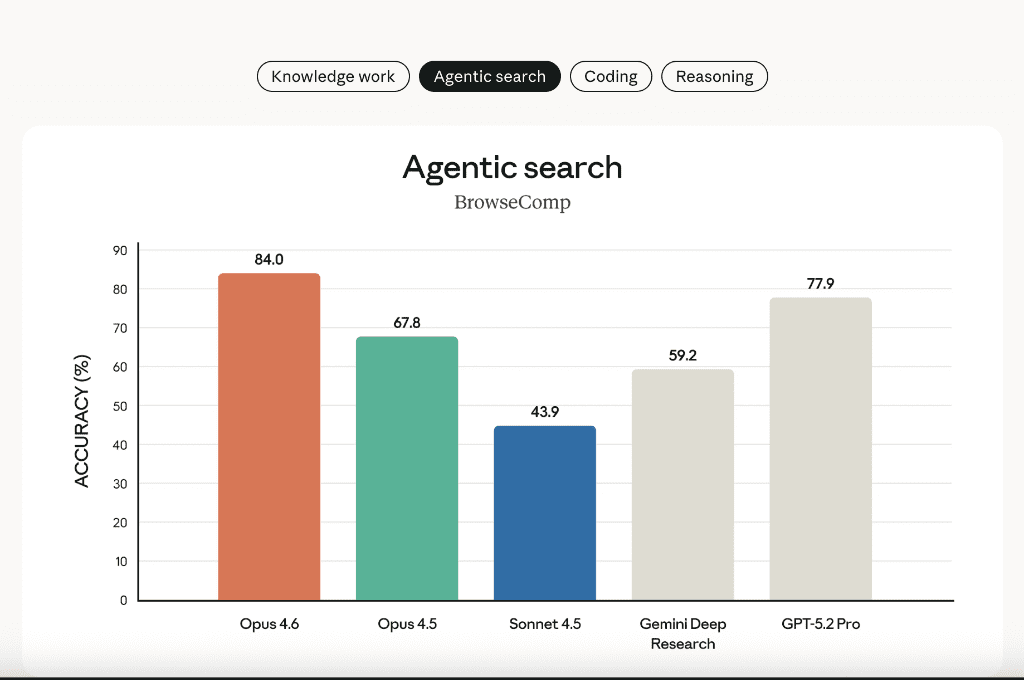

Figure 7: Opus 4.6 sets a new record on BrowseComp, significantly outperforming GPT-5.2 Pro in agentic search.

Pricing and Availability: Same Cost, More Power

Pricing: $5 per million input tokens / $25 per million output tokens

This is unchanged from Opus 4.5

For comparison, GPT-5.2 has similar pricing tiers

Gemini 3 Flash is cheaper but optimized for speed over depth

Availability:

claude.ai (web interface)

– Anthropic API (model ID: claude-opus-4-6)

AWS Bedrock

Google Vertex AI

GitHub Copilot (rolling out across subscription tiers)

The fact that pricing stayed flat while capabilities jumped is significant. This is Anthropic saying “we’re competing on performance, not price.”

What This Means for You

For Developers

If you’re building agentic systems or working with large codebases, Opus 4.6 is a game-changer. The combination of 1M context and improved long-context retrieval means you can:

– Feed entire repositories into a single session

– Build agents that maintain context across complex, multi-step workflows

– Debug issues that require understanding interactions across thousands of files

For Researchers

The scientific reasoning improvements and massive context window make this ideal for:

– Literature reviews across hundreds of papers

– Patent analysis and prior art searches

– Regulatory compliance research across massive document sets

For Enterprises

The BigLaw Bench score and enterprise workflow optimizations mean:

– Fewer revisions on generated documents, spreadsheets, and presentations

– Production-ready quality on first attempt for financial analysis

– Reliable performance on precision-critical verticals

The Bottom Line

We were all waiting for Sonnet 5. Anthropic gave us Opus 4.6 instead. And you know what? They might have just won the round.

This isn’t just another model release. It’s Anthropic’s declaration that they’re playing a different game. While everyone else is racing to the bottom on price, Anthropic is racing to the top on capability. The 1M token context window, record-breaking coding performance, and that brutal 144 Elo point lead on knowledge work benchmarks: this is the model you reach for when “good enough” isn’t good enough.

But let’s be real about the constraint nobody’s talking about: inference cost. Running a model with 1M token context isn’t cheap. The pricing is per-token, which means filling that entire context window will cost you real money. Physics and economics still apply, even in the age of AI magic.

Still, for the tasks where Opus 4.6 excels (deep research that spans hundreds of documents, complex coding across massive codebases, legal analysis where missing one detail costs millions), the value proposition is crystal clear. This is the model you reach for when you need an AI that can actually think through a problem, not just pattern-match its way to an answer.

So what about Sonnet 5? It’s probably still coming. But right now, Anthropic just reminded everyone why Opus exists: because sometimes, you need the best, not the cheapest.

The AI arms race just got a lot more interesting.

FAQ

How does Claude Opus 4.6 compare to GPT-5.2?

Opus 4.6 leads on agentic coding (Terminal-Bench 2.0: 65.4% vs ~54%) and knowledge work (GDPval-AA: 144 Elo point lead). GPT-5.2 Thinking excels at abstract reasoning (ARC-AGI-2: 52.9% vs 37.6%). For sustained, complex tasks with large context requirements, Opus 4.6 is the stronger choice.

Is the 1M token context window available now?

The 1M token context window is currently in beta. The standard context window is 200K tokens, with a maximum output of 128K tokens. The beta program is rolling out to API users.

What’s the pricing for Claude Opus 4.6?

$5 per million input tokens and $25 per million output tokens. This is the same pricing as Opus 4.5, making the performance improvements essentially “free” for existing users.

Can Claude Opus 4.6 run locally?

No, Claude Opus 4.6 is only available via Anthropic’s API and cloud platforms (AWS Bedrock, Google Vertex AI). For local deployment, you’d need to look at open-source alternatives like DeepSeek-R2 or GLM-4.7.

What makes Opus 4.6 better for coding than previous models?

Three key improvements: (1) Better planning and sustained concentration on long-horizon tasks, (2) Self-correction (it can detect and fix its own mistakes), (3) Improved performance in large codebases with better context utilization. The Terminal-Bench 2.0 score of 65.4% is the highest ever recorded.