I Ran a Coding Test. GLM 4.7 Won.

Let me tell you about the benchmark result that made me rewrite my entire mental model of Chinese AI.

GLM 4.7: 73.8% on SWE-bench Verified. That’s the best open-source score. It beat DeepSeek V3.2 (73.1%), Kimi K2 Thinking (71.3%), and is breathing down the necks of Claude Sonnet 4.5 (77%) and GPT-5.2 (80%).

When I first saw these numbers on December 22, 2025 – the day GLM 4.7 dropped- I didn’t believe them. So I started testing.

Thirty hours later, here’s what I know: Chinese AI labs aren’t playing catch-up anymore. In coding specifically, GLM, MiniMax, DeepSeek, Kimi, and Qwen are trading blows with the best Western models – and doing it at a fraction of the cost.

This article is what I learned.

The Benchmark Truth: Who’s Actually Best at Coding

Let me give you the numbers that matter. These are December 2025 verified benchmarks, not marketing claims.

SWE-Bench Verified (Real GitHub Issue Resolution)

| Model | Score | Release | Type |

|---|---|---|---|

| GPT-5.2 | 80.0% | Dec 2025 | Proprietary |

| Claude Sonnet 4.5 | 77.2% | Dec 2025 | Proprietary |

| MiniMax M2.1 | 74.0% | Dec 23, 2025 | Open-Source |

| GLM 4.7 | 73.8% | Dec 22, 2025 | Open-Source |

| DeepSeek V3.2-Speciale | 73.1% | Dec 1, 2025 | Open-Source |

| Kimi K2 Thinking | 71.3% | Nov 6, 2025 | Open-Source |

The takeaway? MiniMax M2.1 and GLM 4.7 are basically tied for best-in-class open-source. They’re 3-6 points behind Claude and 6-7 points behind GPT-5.2 – a gap that’s closing fast.

LiveCodeBench V6 (Competitive Coding)

| Model | Score |

|---|---|

| GLM 4.7 | 84.9% |

| Kimi K2 Thinking | 83.1% |

| Claude Sonnet 4.5 | ~80% |

GLM 4.7 is the top open-source model for competitive coding. It’s beating Claude on this benchmark.

SWE-Bench Multilingual (Non-Python Languages)

| Model | Score |

|---|---|

| MiniMax M2.1 | 72.5% |

| Claude Sonnet 4.5 | 68% |

| GLM 4.7 | 66.7% |

MiniMax M2.1 leads here – it’s specifically optimized for Rust, Go, Java, C++, TypeScript, Kotlin, and Objective-C.

GLM 4.7: The New Coding King (With Caveats)

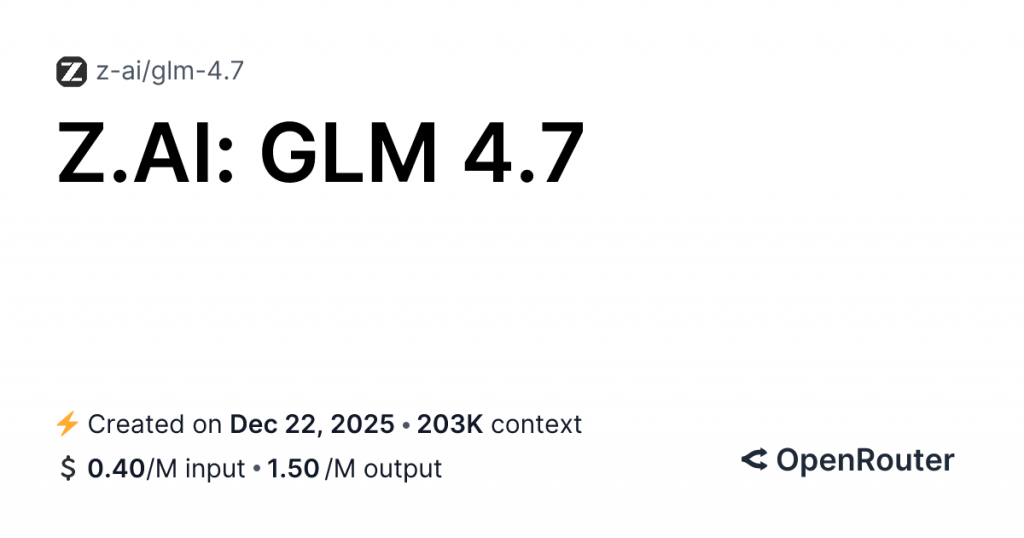

Zhipu AI’s GLM 4.7, released December 22, 2025, is the open-source model that’s making waves.

What the benchmarks say:

- 73.8% SWE-bench Verified (5.8% improvement over GLM-4.6)

- 84.9% LiveCodeBench V6 (best open-source)

- 95.7% on AIME 2025 math competition

- 87.4% on τ²-Bench (interactive tool use) – highest among all open models

- 200K+ context window

What Reddit users say:

- “GLM 4.7 rivals Claude Sonnet 4.5 on LMArena Code Arena”

- “Stronger for clean code generation than DeepSeek”

- “The BEST CODING LLM for daily coding tasks” – multiple users

- “$3/month subscription is absurdly affordable”

But here’s the nuance. Real-world experiences are mixed:

- Some developers found it “going off the rails” on complex, multi-step projects

- “Slower and less effective than Claude Opus for complex coding”

- “Requires more user guidance” on architectural decisions

- Inconsistent on complex tasks compared to proprietary models

My take: GLM 4.7 is genuinely impressive for code generation benchmarks. For clean, straightforward coding tasks, it’s excellent. For complex architectural work over many iterations? The inconsistency is real. It’s a fantastic value at $0.40-0.60/M input tokens – but know what you’re getting.

The unique features:

- “Interleaved Thinking” mode for multi-turn reasoning

- “Preserved Thinking” for maintaining context across sessions

- “Vibe Coding” for cleaner, more modern UI outputs

- 10 million token memory context window

- Self-correction feature that reviews its own output

MiniMax M2.1: The Multilingual Powerhouse

MiniMax M2.1 dropped December 23, 2025, and went fully open-source on Christmas Day. It’s specifically built for professional developers who work across multiple languages.

The Numbers:

- 74.0% SWE-bench Verified (higher than GLM 4.7)

- 72.5% SWE-bench Multilingual (beats Claude Sonnet 4.5’s 68%)

- 49.4% Multi-SWE-Bench (beats Claude 3.5 Sonnet and Gemini 1.5 Pro)

- 88.6% VIBE benchmark (full-stack app development)

- 91%+ on web development tasks

Architecture:

- 230 billion parameters total

- Only 10 billion activated per task (MoE)

- Optimized for Rust, Go, Java, C++, TypeScript, Kotlin, Objective-C, JavaScript

What users report:

- “Outperforms Claude 4.5 and Gemini 3 Pro” in coding benchmarks

- “Faster than GLM 4.7” for generating working tests

- Perfect for “React frontends with Go backends”

- Excellent for multi-file edits and refactoring

Interesting observation: One user tested both models on the same task. M2.1 quickly produced working tests. GLM 4.7, while slower, identified an implementation flaw that M2.1 missed. Different models have different strengths for debugging vs. raw code generation.

Open-source matters. When Claude costs hundreds per month for heavy API usage, hosting M2.1 locally eliminates ongoing costs. The Apache 2.0 license means full commercial freedom.

DeepSeek V3.2: The Price-Performance Monster

DeepSeek V3.2, released December 1, 2025, isn’t the highest-scoring model on SWE-bench. But it might be the best value in AI.

The Benchmarks:

- 72-74% on SWE-bench Verified (varies by framework)

- 73.1% for V3.2-Speciale specifically

- 96% on AIME math competition

- 97.5% on HMMT 2025

- Gold medals at IMO, CMO, and IOI in 2025

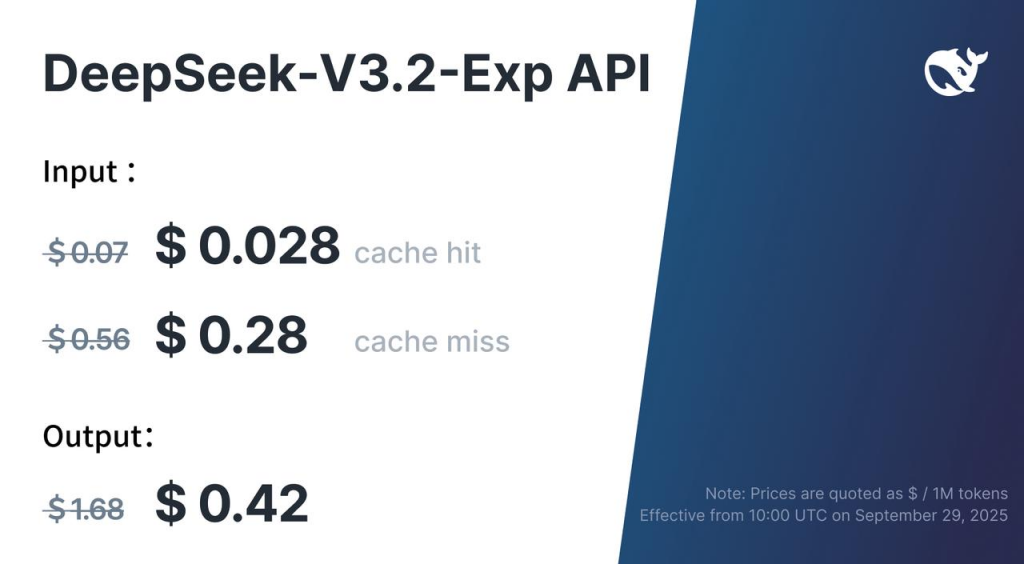

The Pricing That Changes Everything:

- $0.28/M input tokens (cache miss)

- $0.028/M input tokens (cache hit)

- $0.42/M output tokens

For comparison, Claude Opus is $15/M input. That’s a 53x cost reduction.

What the LocalLLaMA community says:

- “Absolutely rock solid for coding work”

- “The best AI coding assistant I’ve ever used” after 30 hours of testing

- “Unlike GPT models that default to React, DeepSeek actually writes proper Vue.js code”

- “60 tokens per second – 3x faster than V2.5”

- “Diagnoses issues deeply instead of suggesting surface-level fixes”

The GLM vs. DeepSeek debate:

Reddit discussions show a mixed picture:

- GLM 4.6/4.7 is “stronger for clean code generation”

- DeepSeek V3.2 is “better for critical thinking and debugging”

- DeepSeek is “absurdly cheap” for heavy use

- GLM can face timeout issues in some environments; DeepSeek handles agentic workflows more reliably

My recommendation: If cost is your constraint, DeepSeek V3.2 is the obvious choice. If benchmark scores matter most and you can tolerate some inconsistency, GLM 4.7. For multi-language work, MiniMax M2.1.

Kimi K2 Thinking: The Agentic Specialist

Moonshot AI’s Kimi K2 Thinking (November 6, 2025) is built for a specific use case: autonomous, multi-step coding agents.

The Architecture:

- 1 trillion total parameters

- 32 billion active per inference (MoE)

- 256K token context window

- Native INT4 quantization – 2x inference speedup

The Benchmarks:

- 71.3% SWE-Bench Verified

- 83.1% LiveCodeBench V6

- 44.9% on “Humanity’s Last Exam” (beats GPT-5’s 41.7%)

- Led official SWE-bench leaderboard in early December

The Cost Advantage:

One documented comparison: Same task, same quality.

- Claude Sonnet 4.5: $5

- Kimi K2 Thinking: $0.53

That’s a 10x cost reduction for equivalent output.

What makes it special:

- Handles 300+ sequential tool calls coherently (most models fall apart after 50)

- “On par with GPT-5 in agentic capabilities” – r/LocalLLaMA

- Excellent for complex, multi-step planning

- Muon optimizer (featured in “Best Papers of 2025”)

The trade-off: Slower output (34.1 tokens/sec vs Claude’s 91.3 tokens/sec). But when you’re saving 90% on each task, waiting a few extra seconds is easy.

Qwen: The Local Development Champion

Alibaba’s Qwen series isn’t flashy, but it’s become the default for many local AI developers.

What users actually use it for:

- Qwen 2.5 Coder 32B for serious local coding work

- Qwen3-Coder 30B as a free alternative to paid APIs

- Qwen3 235B for high-end local setups (128GB+ RAM)

The benchmark reality:

- Competitive with GPT-4o on EvalPlus, LiveCodeBench, BigCodeBench

- 73.7% on Aider (code repair) – comparable to GPT-4o

- 75.2% on MdEval (multi-language code repair) – first among open-source models

- Qwen 2.5 Max outperforms GPT-4 on Python in LiveCodeBench

What users say:

- “Far superior for writing code – I’ve developed complete applications”

- “Much better than GPT-4o for storytelling”

- “Casually outperforms GPT-4o and o1-preview on Livebench coding”

- “My most frequently used LLM – consistent results, minimal rework”

- “Runs on my M3 Max MacBook Pro without issues”

The open-source advantage: One entrepreneur switched from paid ChatGPT and Claude subscriptions to Qwen 3 Next. Zero costs. Better performance for their use cases.

The Pricing Reality Check

Here’s what these models actually cost as of December 2025:

| Model | Input (per 1M tokens) | Output (per 1M tokens) | Notes |

|---|---|---|---|

| DeepSeek V3.2 | $0.28 | $0.42 | Cache hits: $0.028 |

| GLM 4.7 | $0.40-0.60 | $1.50-2.20 | $3/month subscription |

| MiniMax M2.1 | Open-source | Open-source | Free to self-host |

| Kimi K2 | ~$0.50/task | – | 10x cheaper than Claude |

| Qwen | Free | Free | Apache 2.0 license |

| Claude Opus | $15 | $75 | – |

| GPT-5 | $1.25 | $10 | – |

The math: For heavy coding work at 1M tokens/day output:

- Claude Opus: $75/day = $2,250/month

- DeepSeek V3.2: $0.42/day = $12.60/month

That’s a 178x cost reduction.

Why They’re Winning: The Technical Edge

1. Mixture of Experts (MoE) Done Right

DeepSeek V3: 671B parameters, 37B active per forward pass.

MiniMax M2.1: 230B parameters, 10B active per task.

Kimi K2: 1T parameters, 32B active per inference.

The result: 5x reduction in training costs, massive inference savings.

2. Multi-Head Latent Attention (MLA)

DeepSeek’s MLA compresses KV cache by 93.3%. This is why they can run on H800 GPUs (export-restricted) instead of needing H100s.

3. Context Caching

DeepSeek’s caching drops input costs to $0.028/M tokens. For agentic workflows with repeated system prompts, that’s 90% savings on top of already-low prices.

4. Interleaved/Preserved Thinking (GLM 4.7)

GLM maintains reasoning across multi-turn conversations better than most models. The “self-correction” feature reduces hallucinations by reviewing its own output.

The Real Story: Constraints Bred Innovation

Here’s what nobody wants to say out loud: US chip export controls may have accidentally accelerated Chinese AI efficiency.

When you can’t get H100s, you have to:

- Build smarter MoE architectures

- Optimize KV cache aggressively

- Use FP8 quantization cleverly

- Innovate on training efficiency

The result? Models that compete with GPT-5 at 10-50x lower cost.

China’s open-weight models are winning not despite the restrictions, but partly because of them.

Which Model Should You Actually Use?

Based on my testing and the Reddit consensus:

For clean code generation: GLM 4.7 (best benchmarks, but watch for complexity issues)

For multi-language projects: MiniMax M2.1 (specifically optimized for Rust, Go, Java, C++)

For cost-efficiency: DeepSeek V3.2 ($0.28-0.42/M tokens, can’t beat this)

For agentic workflows: Kimi K2 Thinking (handles 300+ tool calls, 10x cheaper than Claude)

For local development: Qwen 2.5 Coder 32B (free, runs on consumer hardware)

For critical debugging: DeepSeek V3.2-Speciale (stronger critical thinking than GLM)

For complex architectural decisions: Still Claude Opus or GPT-5.2 (Chinese models can be inconsistent here)

The Bottom Line

Three months ago, I would have told you Chinese AI was “catching up.” That’s no longer accurate.

GLM 4.7 is the leading open-source model on multiple coding benchmarks. MiniMax M2.1 beats Claude Sonnet 4.5 on multilingual coding. DeepSeek V3.2 costs 53x less than Claude Opus for comparable performance.

These aren’t theoretical improvements. They’re shipping in production APIs right now.

The assumption that you need a $15/M token API to get frontier coding assistance? Dead. The assumption that open-source can’t compete with proprietary models? Dying fast.

Test these yourself. DeepSeek costs pennies. GLM has a $3/month plan. MiniMax is fully open-source. You have nothing to lose except your current API bills.

FAQ

Which Chinese AI model is best for coding in December 2025?

Based on benchmarks: GLM 4.7 leads LiveCodeBench (84.9%) and is near the top on SWE-bench (73.8%). MiniMax M2.1 leads for multilingual coding (72.5% SWE-bench Multilingual). For cost efficiency, DeepSeek V3.2 at $0.28-0.42/M tokens can’t be beat. For complex agentic tasks, Kimi K2 Thinking handles 300+ tool calls.

Is GLM 4.7 better than DeepSeek for coding?

It depends. GLM 4.7 scores higher on benchmarks and is “stronger for clean code generation.” DeepSeek V3.2 is “better for critical thinking and debugging” according to Reddit users, more reliable for agentic workflows, and much cheaper. Test both on your specific use case.

How much cheaper are Chinese AI models compared to Claude and GPT?

DeepSeek V3.2 costs $0.28/M input tokens vs Claude Opus at $15/M – that’s 53x cheaper. For typical coding tasks, Kimi K2 Thinking completed equivalent work for $0.53 vs Claude’s $5 (10x cheaper). MiniMax M2.1 and Qwen are fully open-source – free to self-host.

Can I run these models locally?

Yes. MiniMax M2.1 and Qwen are fully open-source (Apache 2.0). GLM 4.7 weights are available on Hugging Face and ModelScope. Qwen 2.5 Coder 32B runs on machines with 32GB+ RAM. You’ll need 128GB+ RAM for the largest models like GLM-4.5-Air.

What’s the catch?

Context windows are sometimes smaller. World knowledge on obscure topics lags behind GPT-5/Claude. Some users report inconsistency on complex architectural decisions. Documentation is often primarily in Chinese. For mission-critical production work, proprietary models still have an edge on reliability.