Chinese AI labs have seized the open-weight throne. In just 18 months, they’ve gone from playing catch-up to setting the pace, and the numbers tell an uncomfortable story for Silicon Valley: DeepSeek alone processed 14.37 trillion tokens on OpenRouter in 2025, while open-source Chinese models collectively jumped from 1.2% to 30% of global usage. Seven Chinese models now rank in the top 20 most-used globally.

The shift isn’t just about benchmarks—it’s about economics. DeepSeek V3.2 matches GPT-5 on mathematical reasoning while costing 40x less to use. Kimi K2, Moonshot AI’s 1-trillion-parameter monster, executes 200-300 sequential tool calls without human intervention. And Alibaba’s Qwen3 trained on 36 trillion tokens across 119 languages, all released under Apache 2.0.

Read Also: Meta’s SAM 3: Why Meta Is Fighting Its Own Shadow in the AI Race

The open-weight AI race has fundamentally changed who’s winning and why.

DeepSeek V3.2 achieves gold-medal performance at fraction of the cost

DeepSeek’s latest release isn’t just competitive—it’s embarrassing the competition. The 671B-parameter MoE model earned a gold medal at the 2025 International Mathematical Olympiad (35/42 points) and ranked 10th at the International Olympiad in Informatics. On AIME 2025, DeepSeek V3.2 scores 96.0%, edging past GPT-5’s 94.6%.

What makes this remarkable is the economics. DeepSeek reportedly trained the original V3 for $5.5 million—while OpenAI, Google, and Anthropic spend hundreds of millions on comparable models. Their secret weapon: FP8 mixed-precision training that cuts memory requirements by 75%, plus a revolutionary MoE architecture activating only 37B of 671B total parameters per query.

| Model | AIME 2025 | Math-500 | SWE-bench | Input Cost | Output Cost |

|---|---|---|---|---|---|

| DeepSeek V3.2 | 96.0% | 98%+ | 64.2% | $0.28/M | $0.42/M |

| GPT-5 | 94.6% | ~95% | 74.9% | $1.25/M | $10.00/M |

| Claude Sonnet 4 | 70.5% | ~90% | 72.7% | $3.00/M | $15.00/M |

| Gemini 2.5 Pro | 86.7% | ~90% | 67.2% | $1.25/M | $10.00/M |

The cost gap is staggering. At $0.28 per million input tokens (with 90% discounts on cached inputs), DeepSeek undercuts Claude Sonnet by 10x and GPT-5 by 4x on input alone. Output tokens tell an even starker story.

Kimi K2 vs Claude Sonnet: the agentic AI showdown

When comparing Kimi K2 vs Claude Sonnet 4 for agentic workflows, the Chinese model makes a compelling case. Moonshot AI’s 1-trillion-parameter model—the largest open-weight release ever—was explicitly designed for autonomous operation.

On SWE-bench Verified, Kimi K2 scores 65.8% at pass@1, climbing to 71.6% with best-of-N sampling. That trails Claude Sonnet 4’s leading 72.7%, but Kimi K2’s tool-calling capabilities are where things get interesting. The model supports native Model Context Protocol (MCP) and can execute hundreds of sequential tool calls without derailing—a critical capability for production agents.

Claude Sonnet 4 remains the benchmark for coding tasks, with an 80.5% TAU-bench retail score and the highest SWE-bench performance among all models. But at $3/$15 per million tokens versus Kimi K2’s $0.50/$2.40, the cost-performance calculation shifts dramatically for teams running millions of agentic requests.

For developers choosing between them:

- Claude Sonnet 4: Best-in-class for complex debugging, security-conscious coding, and tasks requiring deep reasoning

- Kimi K2: Superior for high-volume agentic workflows where cost-per-action matters, especially with native MCP support

- Kimi K2 Thinking: For extended reasoning tasks, scoring 44.9% on Humanity’s Last Exam (state-of-the-art)

Qwen vs Kimi K2: two philosophies of open AI

The Qwen vs Kimi K2 comparison reveals different approaches to the open-weight challenge. Alibaba’s Qwen3-235B-A22B activates just 22B parameters from 235B total—more efficient than Kimi K2’s 32B active from 1 trillion. But parameter counts tell only part of the story.

Qwen3 was specifically trained for MCP (Model Context Protocol), making it the first foundation model with native tool orchestration baked in. It supports 119 languages and dialects, dwarfing competitors’ multilingual capabilities. On AIME 2025, Qwen3 hits 94.1% in thinking mode—approaching GPT-5 territory.

Kimi K2’s advantage lies in scale and agentic specialization. Its MuonClip optimizer achieved something previously thought impossible: stable training at trillion-parameter scale without the instability that plagued earlier massive models. And its 256K extended context window (in the Thinking variant) enables document analysis that smaller context models simply can’t match.

Both models run under permissive licenses—Qwen3 on Apache 2.0, Kimi K2 on modified MIT—making them enterprise-ready without the licensing complexity of Meta’s Llama.

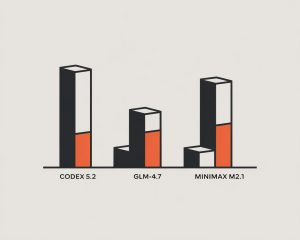

GLM-4.5 and MiniMax M2 round out China’s arsenal

Zhipu AI’s GLM-4.5 deserves attention for one metric alone: 90.6% tool-calling success rate, beating Claude Sonnet 4 (89.5%) and Kimi K2 (86.2%). The 355B-parameter model runs on just 8 NVIDIA H20 chips—half the hardware DeepSeek V3 requires—thanks to aggressive optimization.

MiniMax M2 takes a different approach: extreme sparsity. With only 10B active parameters from 230B total, it achieves Artificial Analysis’s highest open-weight intelligence score (61 points) while fitting on 4x H100 GPUs at FP8 precision. At $0.30/$1.20 per million tokens, it’s 8% of Claude Sonnet’s price while delivering 93.9% coding accuracy.

Why Chinese labs dominate open-weight while US labs don’t

The open-weight gap isn’t about capability—it’s about incentives. Chinese AI labs face three pressures that make open-source releases strategically rational:

Export restrictions created efficiency innovation. When the Biden administration blocked A100 and H100 exports in 2022, Chinese labs couldn’t simply buy more compute. DeepSeek’s response was architectural brilliance: MoE designs that activate 5.5% of total parameters, FP8 training that cuts memory by 75%, and Multi-head Latent Attention that reduces KV cache by 93%. Necessity bred innovation.

Trust barriers require transparency. Western enterprises worry about Chinese APIs sending data to servers under PRC jurisdiction. Open-weight releases solve this—download the model, run it locally, and the data never leaves your infrastructure. It’s a trust-building mechanism that closed models can’t replicate.

Market access demands visibility. Without the brand recognition of OpenAI or Google, Chinese labs need developers to actually try their models. Open releases under MIT and Apache 2.0 licenses create adoption without contracts, lawyers, or enterprise sales cycles.

Compare this to American frontier labs: OpenAI generates billions from API revenue it would cannibalize with competitive open releases. Anthropic’s Constitutional AI framework requires controlled deployment. Both face genuine liability concerns about open weights enabling malware, disinformation, or weapons development.

OpenAI’s August 2025 release of gpt-oss-120b and gpt-oss-20b under Apache 2.0 signals the pressure is real. As OpenAI’s Casey Dvorak admitted: “The vast majority of our customers are already using a lot of open models. Because there is no competitive open model from OpenAI, we wanted to plug that gap.”

The infrastructure providers fueling Chinese AI adoption

You don’t need to call Chinese APIs to use Chinese models. A mature infrastructure ecosystem now hosts DeepSeek, Qwen, and others with Western-standard latency:

| Provider | Speed | Key Models | Notes |

|---|---|---|---|

| Groq | 276 tok/s (70B) | Llama, open models | LPU architecture, free tier available |

| Together AI | 400+ tok/s (8B) | DeepSeek, Qwen, Llama | Sub-100ms latency on 200+ models |

| Fireworks | ~150 tok/s | DeepSeek V3, Llama | 9x faster RAG than Groq |

| Cerebras | 1,800 tok/s (8B) | Llama 3.1 | Fastest available, 8K context limit |

| OpenRouter | Varies | 300+ models | Unified API with automatic failover |

Cerebras delivers 1,800 tokens per second on Llama 3.1 8B—20x faster than GPU solutions—though limited to 8K context. Groq offers the best balance of speed (276 tok/s on 70B models) and cost ($0.64/M blended). Together AI and Fireworks provide the broadest model selection with enterprise-grade reliability.

The distillation controversy that won’t die

Did DeepSeek train on ChatGPT outputs? The question hangs over Chinese AI’s legitimacy. Microsoft and OpenAI claim to have identified suspicious data extraction from ChatGPT APIs by DeepSeek-linked accounts in late 2024. White House AI czar David Sacks stated there is “substantial evidence” of distillation.

DeepSeek hasn’t admitted to distilling its primary models. And critically, the company’s technical innovations—MoE architecture, MLA attention, FP8 training—represent genuine engineering breakthroughs that couldn’t be extracted from any API. The distillation debate may matter less than the capabilities those innovations enabled.

Legal recourse appears limited regardless. AI outputs may not be copyrightable, OpenAI’s terms-of-service violations are contractual rather than criminal, and as Stanford’s Mark Lemley noted: “Even assuming DeepSeek trained on OpenAI’s data, I don’t think OpenAI has much of a case.”

What this means for developers choosing models in 2025

The practical question isn’t geopolitical—it’s technical. Here’s what the benchmarks don’t tell you:

For tool calling and agents: GLM-4.5 leads on raw tool-calling success (90.6%), but GPT-5 and Claude Sonnet 4.5 remain more reliable for complex multi-step workflows. Local models struggle significantly—Docker’s evaluation found common failures including eager invocation (calling tools unnecessarily) and invalid arguments. Qwen 2.5 offers the best local tool-calling performance.

For coding: Claude Sonnet 4 (72.7% SWE-bench) and GPT-5.1 (76.3%) still lead, but DeepSeek V3.2 and Qwen2.5-Coder deliver 90%+ of that performance at 10%+ of the cost. For high-volume code generation, the economics favor Chinese open-weight models.

For reasoning and math: GPT-5 with tools achieves 100% on AIME 2025. But DeepSeek V3.2’s 96% without tools suggests the gap is closing—and at dramatically lower cost.

For production reliability: Enterprise teams should run fallback configurations across providers. OpenRouter enables automatic failover with 5% overhead. Budget for 73% of LLM applications failing before reaching users (Anthropic’s 2024 developer survey), and implement retry logic with exponential backoff regardless of which model you choose.

The uncomfortable conclusion

Chinese AI labs aren’t just competing—they’re defining the open-weight frontier. DeepSeek V3.2, Kimi K2, Qwen3, GLM-4.5, and MiniMax M2 collectively offer capabilities that match or exceed Western counterparts on most benchmarks, at costs that make American pricing look like luxury goods.

The Stanford AI Index 2025 quantifies the shift: benchmark gaps that were 17-31 percentage points in late 2023 narrowed to 0.3-8 percentage points by late 2024. On some measures, Chinese models now lead.

For developers and business leaders, the question isn’t whether to engage with Chinese open-weight models—it’s how. The combination of permissive licensing, local deployment options, and dramatically lower costs makes ignoring them a competitive handicap. Whether that advantage persists depends on whether American labs can match the efficiency innovations born from China’s chip restrictions, or whether OpenAI’s gpt-oss and Meta’s Llama can close the gap before Chinese models pull further ahead.

Right now, the momentum is clear. The open-weight AI race has a frontrunner, and it’s not who most expected.