Here’s something that most people glossed over when iOS 26 dropped: Apple didn’t just ship a prettier interface. It shipped a 3-billion parameter language model inside every device running Apple silicon — and then quietly handed developers a set of Swift APIs to build on top of it. No API keys. No monthly bills. No data leaving the device.

That’s not a minor footnote. That’s a fundamental shift in what “app intelligence” means.

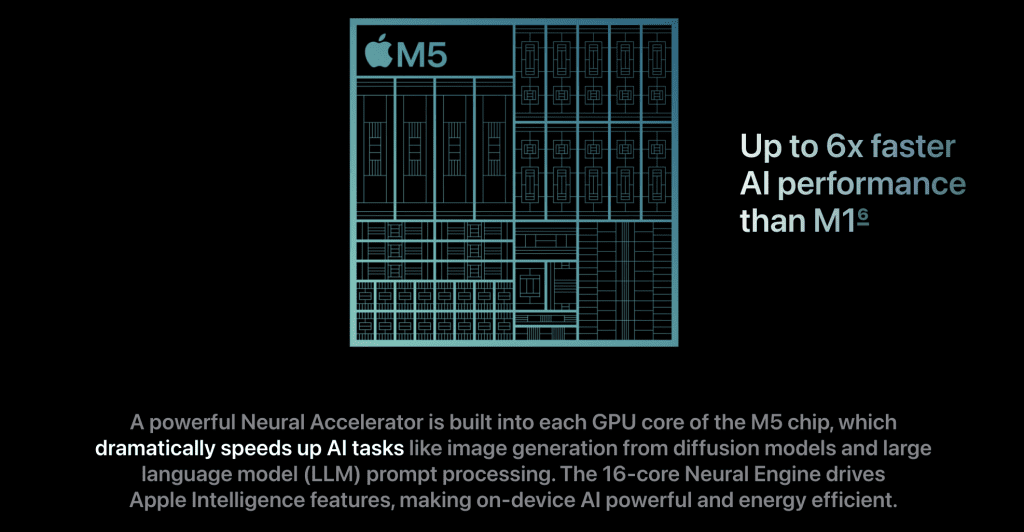

I’ve been watching Apple’s AI stack evolve for years, and the Foundation Models framework announcement felt like the moment the pieces finally clicked. This isn’t Apple catching up to OpenAI or Google. It’s Apple playing an entirely different game — one where the rules are privacy, performance, and permanent offline access. And with the M5 chip’s dedicated Neural Accelerators, the hardware now matches the ambition.

Let’s get into what’s actually here, how it works, and what it means if you’re building apps in 2026.

The 3B Model: Small Number, Massive Implication

Three billion parameters sounds small next to GPT-5’s trillions or even the 70B models people run locally on AirLLM. But those comparison are structurally misleading.

Apple’s on-device model isn’t competing on raw size. It’s engineered for a specific task: running inference at sub-100ms latency on a phone or laptop, entirely offline, with zero cloud dependency. To do that, every parameter is quantized to 2 bits — meaning the model’s effective memory footprint is a fraction of what a 3B model would normally require. Think of it like compressing a lossless audio file to a highly efficient codec. Quality drops slightly, but what you gain is the ability to play it on any device, anytime, without a streaming connection.

The results? Apple reports sub-100ms time-to-first-token on M-series devices. On M5 hardware, the new Neural Accelerators — one per GPU core — handle matrix multiplications at over 45 TOPS (Trillion Operations Per Second), roughly 3.5x the AI throughput of M4. That’s not incremental progress. That’s a generation jump.

The 153.6 GB/s unified memory bandwidth on M5 is the silent hero here. LLM inference is memory-bandwidth-bound, not compute-bound. It doesn’t matter how many TOPS you have if the data pipeline can’t feed the accelerator fast enough.

Apple’s unified memory architecture, where the CPU, GPU, and Neural Engine all share the same memory pool, sidesteps the bottleneck that plagues discrete GPU setups. And it does so in a way that sequential attention-style optimizations can fully exploit — no data copying between VRAM and system RAM.

What Developers Actually Get: Core ML, Metal 4, and the Foundation Models API

This is where it gets concrete. Apple’s developer AI stack in 2026 is a three-layer sandwich:

Layer 1: Foundation Models API (highest level)

The Foundation Models framework exposes the on-device 3B LLM directly via Swift. You create a LanguageModelSession, attach instructions and tools, and start generating text. Here’s the minimal working example:

import FoundationModels

// Initialize a session with system instructions

let session = LanguageModelSession(

instructions: "You are a helpful assistant for a recipe app. Be concise."

)

// Single-turn response

let response = try await session.respond(to: "What can I make with chicken and lemon?")

print(response.content)

That’s it. No API key, no URLSession call, no authentication dance. The model runs entirely on-device, and the result is in your hands in under 100ms on any M-series device.

It gets more interesting with guided generation. Using the @Generable macro, you can force the model to output structured Swift types — not strings that you then have to parse:

@Generable

struct RecipeSuggestion {

@Guide(description: "Name of the dish")

var name: String

@Guide(description: "Estimated cooking time in minutes", .range(5...120))

var cookingTimeMinutes: Int

@Guide(description: "Difficulty level")

var difficulty: DifficultyLevel

}

let suggestion = try await session.respond(

to: "Suggest a quick chicken dish",

generating: RecipeSuggestion.self

)

// suggestion.cookingTimeMinutes is guaranteed to be between 5-120

// No JSON parsing. No hallucinated keys.

This is exactly the kind of structured output that Anthropic’s tool calling overhaul was chasing with its programmatic approach — except Apple baked it into the type system itself. The @Guide macro acts as a constraint layer, so the model literally cannot produce outputs that violate your schema. That’s a reliability guarantee that cloud APIs still struggle to match consistently.

Layer 2: Core ML (mid-level)

For developers who want to bring their own models — custom fine-tunes, vision classifiers, segmentation networks — Core ML is the conversion-and-deployment layer. You convert from PyTorch or TensorFlow, and Core ML handles routing execution to the right hardware: ANE (Apple Neural Engine) for models it recognizes, GPU for everything else.

With M5, Core ML automatically routes workloads to the new per-core Neural Accelerators, not just the central 16-core Neural Engine. This is a significant change. Prior to M5, only the Neural Engine natively handled ML operations.

Now, every GPU core has a dedicated accelerator capable of running tensor operations. The practical effect: parallel ML workloads no longer compete with the Neural Engine for bandwidth. A vision model and a language model can run simultaneously without stepping on each other’s feet.

Layer 3: Metal Performance Shaders + Metal 4 (lowest level)

For the real power users — people building custom training loops or inference kernels — Metal 4 introduced first-class tensor support directly into the GPU shading language. This is a big deal. Previously, running neural network inference in a Metal shader required workarounds and performance compromises. Now, tensor types are native citizens in the Metal API.

Metal Performance Shaders (MPS) has similarly evolved. The MPS Graph framework lets developers build computational graphs that execute across the Neural Engine, GPU, and CPU in a coordinated schedule — the runtime decides the optimal routing.

And because Metal 4 can embed inference operations directly into graphics pipelines, a game rendering a scene can now also run AI post-processing (upscaling, NPC behavior, animation refinement) in the same command buffer pass, without context-switching overhead.

The Privacy Architecture: Why This Matters More Than the Benchmark Numbers

Let me be direct: the performance story is impressive, but the privacy architecture is the actual moat.

Every call to the Foundation Models API stays on-device by default. Apple’s Private Cloud Compute (PCC) handles more complex requests that need more power than the device can supply — but even then, the data is processed on Apple silicon servers, not stored, and not accessible to Apple employees. Independent security researchers can audit the PCC code running in production. That’s a verifiable privacy guarantee, not just a marketing claim.

Compare that to the alternatives. Calling GPT-5.3-Codex-Spark via API? Your data leaves the device, passes through OpenAI’s infrastructure, and is subject to their retention and training data policies. Calling Gemini? Same story. These aren’t bad choices for every use case — the cloud models are dramatically more capable for complex reasoning tasks — but they’re not appropriate for apps handling health data, legal documents, personal conversations, or financial information.

Apple updated its App Review Guidelines in late 2025 to require explicit user consent before apps share data with third-party AI systems. That’s a regulatory fence around the market for on-device use cases. Any iOS app that needs AI features for private data essentially has to use Foundation Models unless users actively opt in to external processing.

That’s not a coincidence. That’s a strategic moat.

What’s the hard constraint here? The 3B on-device model has real limits. It’s not going to write a legal brief, analyze 200-page financial filings, or achieve Claude-level reasoning on complex multi-step tasks.

The Canvas-of-Thought reasoning architectures that are making cloud models smarter require far more parameters and context than a 3B on-device model can support. Apple knows this — which is why PCC exists as a fallback. But for the 80% of app AI use cases that need classification, structured extraction, summarization, and conversational interfaces on local data, the Foundation Models API is more than sufficient.

What This Actually Changes for Developers in 2026

The practical implications flow in three directions.

For iOS/macOS developers: The cost calculus for building AI features just changed. Instead of paying per-token API costs that scale with user volume, you pay nothing for on-device inference after shipping the base model with iOS. An app with 10 million active users doing 100 AI queries per day isn’t facing a $300K monthly API bill. That’s the difference between a viable indie app and one that needs VC money to survive.

For enterprise app builders: Regulated industries that couldn’t touch cloud AI — healthcare, legal, government — can now build AI features that stay within their compliance boundaries. The Sarvam Kaze-style intelligence that on-device AI enables for smart glasses applies equally well to hospital-floor tablets and legal workstations.

For the broader ecosystem: Apple’s approach puts competitive pressure on every cloud-first AI service provider. When basic LLM capabilities are free, offline, and private as a platform feature, the pitch for “just use our API” gets harder. This connects directly to the broader agentic AI movement — as MCP (Model Context Protocol) support lands in Xcode 26.3 with Claude and Codex integration, Apple is quietly building the developer infrastructure for on-device agentic workflows that don’t require a data center.

I’ve been tracking the GLM-5 and local model benchmarks for a while. The reality is, on-device models have historically lagged cloud models by about two generations. Apple’s 3B model with Neural Accelerator routing on M5 is the closest the gap has ever been for everyday tasks. It won’t match Claude Opus 4.6’s reasoning depth or GLM-5’s coding performance — but for intent classification, entity extraction, smart search, and conversational UI? It’s now competitive.

The Bottom Line

Apple didn’t release Foundation Models because it wanted to compete with OpenAI. It released it because on-device AI is the only viable path to AI features that work without network access, respect user privacy without policy gymnastics, and scale to hundreds of millions of users without crushing your infrastructure budget.

The 3-billion parameter number is intentionally modest. The architecture under it — 2-bit quantization, M5 Neural Accelerators, Metal 4 tensor shaders, per-session context management — is the real engineering story. And the business model innovation — zero marginal cost for developers, zero data exposure for users — might be harder to copy than any benchmark number.

The constraint is real: this model has a ceiling. Complex reasoning, long-context analysis, and creative generation at the highest level still need cloud. But Apple’s bet is that the ceiling is high enough for the majority of what people actually need AI to do in an app. Looking at the developer adoption curve since iOS 26 shipped, that bet is looking increasingly correct.

FAQ

What devices support the Foundation Models framework?

The Foundation Models framework requires Apple silicon. This means iPhone models with A17 Pro or newer, all iPad Pro and iPad Air with M-series chips, all Mac models with M1 or later, and Apple Vision Pro. Devices must be running iOS 26, iPadOS 26, macOS 26, or visionOS 3 and later.

Can I use the Foundation Models API for free?

Yes. There are no API costs for the Foundation Models framework. Inference runs entirely on-device using the device’s Neural Engine and GPU Neural Accelerators. You pay no per-token, per-call, or subscription fee to Apple for using the framework. Standard Apple Developer Program membership ($99/year) is required for app development and distribution.

How does the Apple on-device 3B model compare to running Llama locally?

The Apple model is smaller (3B vs 70B for Llama 3.1) but is specifically tuned and quantized for Apple silicon efficiency. It runs faster (sub-100ms first token vs 3-10+ seconds for 70B) and requires no external infrastructure. For generalist tasks — summarization, classification, entity extraction — the Apple model performs comparably. For complex coding, math, or long-form generation, open-weight models like those covered in our local model guide will outperform it, but they require much more hardware headroom.

Does Private Cloud Compute mean Apple can see my data?

No. Apple’s Private Cloud Compute architecture is designed so that data processed in the cloud is not stored by Apple and is not accessible to Apple employees. The code running on PCC servers can be audited by independent security researchers. Only the minimal data needed for the specific request is sent, and it is never used to train Apple’s models.

What’s the difference between the Foundation Models API and Core ML?

The Foundation Models API gives you access to Apple’s pre-built 3B on-device language model with a high-level Swift interface for tasks like text generation, structured output, and tool calling. Core ML is for integrating your own custom-trained machine learning models (classifiers, object detectors, custom LLMs) into your app. They’re complementary: Foundation Models for language tasks using Apple’s model, Core ML for custom model deployment.