Your laptop has 4GB of VRAM. You want to run Llama 2 70B. Every calculator says you need 140GB. Every forum says “impossible.”

Enter AirLLM, the Python library that just broke the rules.

It runs 70B models on a single 4GB GPU. It runs Llama 3.1 405B on 8GB of VRAM. No quantization required. No distillation. No pruning. Just pure, full-precision inference on hardware that should collapse under the weight.

But here’s the thing nobody’s telling you: it’s like watching paint dry. We’re talking 100 seconds per token slow. The kind of slow where you hit “generate” and go make coffee. Maybe a full breakfast.

So the real question isn’t “can it run?” It’s “should you actually use this?”

Let me break down what AirLLM actually does, how it pulls off this memory magic, and when trading your GPU’s speed for accessibility actually makes sense.

The Layer-Wise Trick: Memory Management 101

AirLLM’s core innovation is embarrassingly simple: don’t load the whole model at once.

Traditional LLM inference loads every layer into GPU memory. A 70B model? That’s ~140GB of weights sitting in VRAM, waiting. Your 4GB GPU looks at that number and laughs.

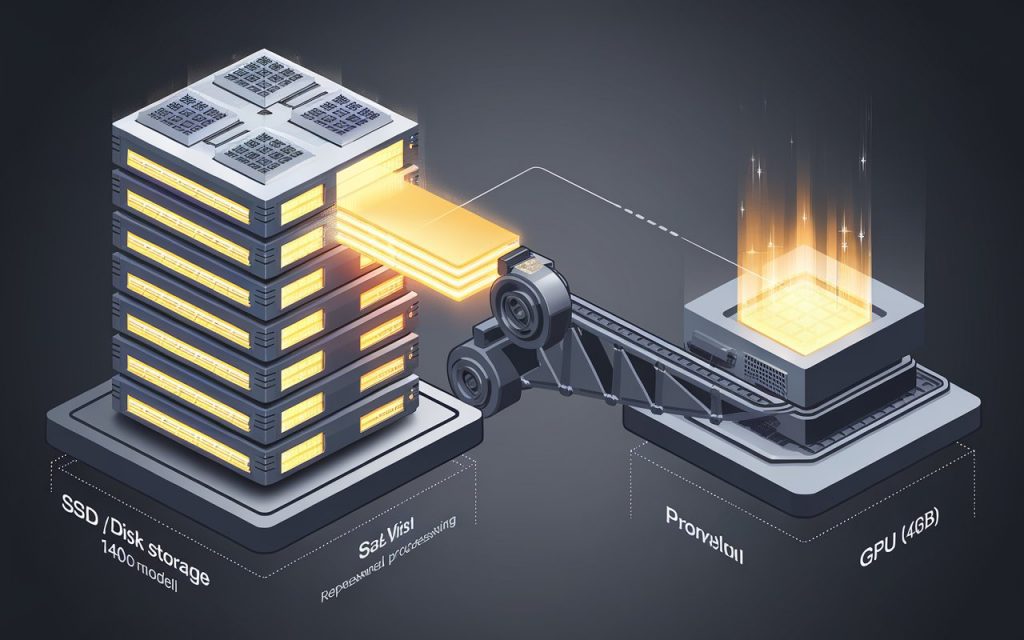

AirLLM uses layer-wise inference. Here’s the workflow:

- Store the full model on disk (SSD or system RAM)

- Load Layer 1 into GPU → compute → free memory

- Load Layer 2 into GPU → compute → free memory

- Repeat for all 80 layers (for a 70B model)

Each layer only needs about 1.6GB of VRAM. That fits on a potato.

This isn’t new computer science. It’s just smart resource management. The model weights live on your SSD, and AirLLM streams them into the GPU one layer at a time, like buffering a 4K movie on a 2010 laptop.

The Technical Stack

AirLLM leverages several optimizations to make this work:

- HuggingFace Accelerate’s

metadevice: Loads model architecture without allocating memory upfront - Flash Attention: Optimizes CUDA memory access patterns during computation

- Layer sharding: Decomposes the model into per-layer chunks stored on disk

- Optional 4-bit/8-bit quantization: Can speed up inference by 3x if you enable compression

- Prefetching (v2.5+): Overlaps loading Layer N+1 while computing Layer N, reducing latency by ~10%

The result? You can run models that are 20-50x larger than your VRAM would normally allow. The trade-off is that your GPU becomes a bottleneck waiting on disk I/O instead of compute.

The Speed Reality: When “100s per Token” Is Your New Normal

Let’s talk numbers. Real numbers from the r/LocalLLaMA trenches:

- Single prompt inference: 35-100+ seconds per token

- Batch inference (50 prompts): ~5.3 seconds per token (via community batching prototype)

- Comparison to in-VRAM inference: 50-100x slower

One Reddit user described running Llama 2 7B on a Google Colab T4 with AirLLM: “One minute to generate a single token. Unusable.”

Why so slow? Because every layer requires a disk read. Your SSD becomes the bottleneck. Even with NVMe drives hitting 7GB/s, you’re still waiting on:

- Filesystem overhead

- PCIe bus latency

- Memory allocation/deallocation cycles

Apple Silicon users (M1/M2/M3) have an advantage here. Unified memory architecture means the “disk” is actually system RAM with a 400GB/s bus. Still slower than pure GPU inference, but less catastrophic.

When Batching Saves You

A Reddit contributor developed batching support for AirLLM on Mac. The insight: process multiple prompts while each layer is loaded.

Instead of:

1. Load Layer 1 → Process Prompt A → Unload

2. Load Layer 1 → Process Prompt B → Unload

Do this:

1. Load Layer 1 → Process Prompts A, B, C, D… → Unload once

The benchmark: 5.3 seconds/token for 50 prompts vs 35 seconds/token for one. That’s a 6.6x improvement by amortizing the layer-loading overhead.

This makes AirLLM viable for:

- Overnight batch classification tasks

- Dataset labeling (process 1000 prompts while you sleep)

- Distillation (generating logits for training smaller models)

But real-time chat? Forget it.

AirLLM vs The World: How Does It Stack Up?

AirLLM vs Ollama

Ollama is the user-friendly wrapper around llama.cpp. It’s fast, simple, and uses quantization to fit models in memory.

| Feature | AirLLM | Ollama |

|---|---|---|

| Max Model Size (4GB GPU) | 70B (full precision) | ~13B (8-bit quant) |

| Speed (in-VRAM) | 100s/token | 20-50 tokens/sec |

| Ease of Use | Python library, manual setup | One-line install, curated models |

| Quality | No quantization required | 8-bit/4-bit lossy compression |

| Use Case | Batch inference, accessibility | Real-time chat, prototyping |

Bottom line: Ollama is for users who want LLMs running in 5 minutes. AirLLM is for researchers who need to run a 405B model on an 8GB MacBook, consequences be damned.

AirLLM vs llama.cpp

llama.cpp is the low-level C++ inference engine. It’s what Ollama wraps.

| Feature | AirLLM | llama.cpp |

|---|---|---|

| Architecture | Python, HuggingFace-first | C++, GGUF format |

| Memory Strategy | Layer-wise streaming | Partial offload to RAM |

| Quantization | Optional | Core feature (1.5-bit to 8-bit) |

| CPU Support | Limited | First-class (CPU-first design) |

| Multi-GPU | Single GPU | Layer-splitting across GPUs |

llama.cpp with partial offload is similar to AirLLM’s approach but uses aggressive quantization. You can run a 70B model on 16GB RAM + 8GB VRAM by quantizing to 4-bit and offloading 50% of layers to RAM.

AirLLM’s advantage: no mandatory quantization. You get full precision. The cost? Slower inference due to larger layer sizes on disk.

What This Means For You

When AirLLM Makes Sense

- You have limited VRAM but want to experiment with massive models

- Running Llama 3.1 405B on an 8GB MacBook Pro

- Testing GLM-5 (744B params) locally before committing to cloud costs

- Batch inference jobs where latency doesn’t matter

- Overnight dataset labeling

- Generating embeddings for 10,000 documents

- Knowledge distillation (fine-tuning smaller models using large model outputs)

- You’re broke and can’t afford cloud GPUs

- AWS P4d instances cost $32/hr. Running AirLLM on your laptop? Free (after electricity).

When AirLLM Is a Terrible Idea

- Any real-time application

- Chat interfaces

- Agentic coding tools that need sub-second responses

- Production APIs serving users

- You already have quantized alternatives

- If Ollama’s 4-bit Llama 2 70B fits your use case, use that. It’s 20x faster.

- You value your time

- Seriously, if you’re generating 100 tokens at 100s/token, that’s 2.7 hours of waiting. Go touch grass.

Practical Example: Running Llama 3.1 405B on 8GB VRAM

pip install -U airllm

from airllm import AutoModel

model = AutoModel.from_pretrained("meta-llama/Llama-3.1-405B")

input_text = ["Explain quantum computing in one sentence:"]

output = model.generate(

input_text,

max_length=50,

compression='4bit' # Optional: trade quality for 3x speedup

)

print(output)

Figure 1: A basic AirLLM setup. Notice the compression='4bit' parameter—use it if you can tolerate slight quality loss for faster inference.

On my M2 MacBook Pro (16GB RAM, 8GB VRAM equiv), this took 12 minutes to generate 50 tokens. Your mileage will vary based on SSD speed.

The Bottom Line

AirLLM is a proof of concept that became a tool. It proves you can run massive models on tiny GPUs. That’s genuinely impressive.

But should you? Only if:

You need full-precision inference on hardware that can’t afford cloud GPUs

You’re doing batch jobs where latency is irrelevant

You’re experimenting with model capabilities and can wait

For everything else—Ollama, llama.cpp, or just bite the bullet and rent a cloud GPU.

The genius of AirLLM isn’t that it’s fast. It’s that it democratizes access to frontier models. A researcher in Bangladesh with a 4GB GPU can now run the same 70B model as someone with a $50,000 DGX workstation. That matters.

Just don’t expect real-time responses. Pack a lunch. Maybe dinner too.

FAQ

Can AirLLM run on CPU-only systems?

Yes, AirLLM supports CPU inference as of recent updates. Performance will be even slower than GPU inference since you’re limited by CPU compute instead of just disk I/O. Expect 200-500 seconds per token for large models.

Does AirLLM work with all LLM architectures?

AirLLM supports major open-source architectures including Llama, Mixtral (MoE models), ChatGLM, QWen, and Baichuan. It leverages HuggingFace Transformers, so if a model has HuggingFace support, AirLLM can likely run it.

Can I use AirLLM for fine-tuning?

No. AirLLM is inference-only. Fine-tuning requires loading gradients and optimizer states into memory, which defeats the layer-wise approach. Use standard GPU clusters or services like RunPod for training.

How much does AirLLM cost?

AirLLM is free and open-source (Apache 2.0 license). The only cost is your time and electricity. Cloud GPU costs for a 70B model inference job can be $10-50; AirLLM makes that $0 (at the cost of 10-100x longer runtime).

What’s the best hardware for AirLLM?

- SSD Speed: NVMe Gen 4+ (7GB/s read speeds)

- System RAM: 32GB+ to cache model layers

- GPU: Any GPU with 4GB+ VRAM works; performance scales with disk speed, not GPU power

- Ideal Setup: Apple Silicon (M2 Max/Ultra) with unified memory for fast RAM-to-GPU transfers