Two weeks. That’s how long it took for an AI to design a rocket engine, send the files to a copper 3D printer, and have it hot-fired on a test stand.

It worked on the first try. It produced 5kN of thrust (about 20,000 horsepower). It didn’t explode.

This is the “Oppenheimer moment” for engineering, but not for the reason you think. It’s not because the AI was “smart.” It’s because the AI could not hallucinate.

While the world is obsessed with Large Language Models (LLMs) that can write poetry but fail at basic math, a quiet revolution called Computational Engineering Models (CEMs) is rewriting the physical world.

The Problem: Why LLMs Can’t Build Bridges

Ask ChatGPT to design a bridge, and it will give you a beautiful description of a bridge. It might even generate a 3D model that looks like a bridge. But if you build it, it will collapse.

Why? Because LLMs are probabilistic. They predict the next token based on statistical patterns. They don’t “know” physics; they know what physics text looks like. In engineering, a 99% probability of success is a 1% probability of catastrophe.

This is the Hallucination Problem. In language, a hallucination is a funny error. In rocketry, it’s a crater.

The Solution: Computational Engineering Models (CEMs)

The engine built by LEAP 71, a Dubai-based engineering company, wasn’t designed by a neural network guessing shapes. It was designed by Noyron, a Computational Engineering Model.

Here is the critical distinction that makes it “hallucination-proof”:

| Feature | Generative AI (LLMs/Diffusion) | Computational Engineering (Noyron) |

|---|---|---|

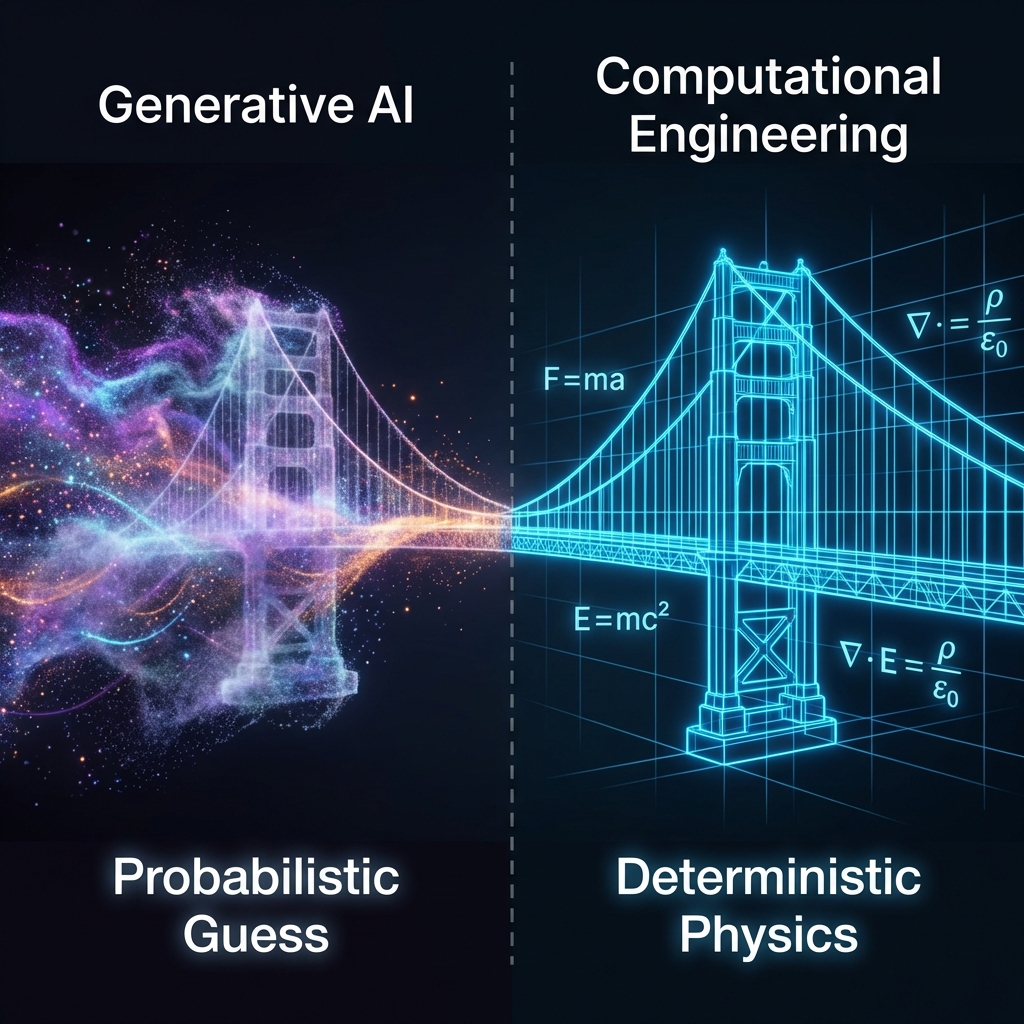

| Method | Probabilistic: “What usually comes next?” | Deterministic: “What solves this equation?” |

| Logic | Pattern Matching | First-Principles Physics |

| Output | Approximate imitation | Validated engineering data |

| Hallucinations | Frequent feature | Mathematically Impossible |

Noyron doesn’t “imagine” a rocket nozzle. It takes your inputs—thrust, fuel type, chamber pressure—and solves for the geometry that satisfies the laws of thermodynamics and fluid dynamics.

If a design violates physics (e.g., the wall is too thin to hold the pressure), the model simply cannot generate it. The constraints are hard-coded into the DNA of the system using a voxel-based architecture.

Inside Noyron: A Library of Heuristics

Noyron isn’t a magical black box trained on millions of rocket blueprints. It wasn’t “trained” in the deep learning sense at all. This brings up a valid skepticism: If it’s just physics equations and geometry kernels, why do we call it AI?

The “AI” vs. “Deep Learning” Trap

We’ve confused “Artificial Intelligence” with “Statistics.”

ChatGPT is Statistical AI. It learns patterns from data.

Noyron is Logic-Based (Symbolic) AI. It possesses agency to solve problems.

Here is what makes it AI: Autonomous Search Space Exploration.

1. The Combinatorial Explosion: The number of possible shapes for a rocket cooling channel is effectively infinite. A human engineer can only imagine and test three or four variations. Noyron explores millions.

2. Agency: You don’t tell it how to draw the channel. You tell it the goal (keep wall < 200°C). The system autonomously makes decisions—branching, twisting, thickening—to achieve that goal.

3. Constraint-Projected Learning: It uses “Constraint-Projected Learning” (or search). It proposes a move, checks the physics wrapper (“Did I step off the cliff?”), and if valid, proceeds. If not, it backtracks.

It’s the difference between a painter (LLM) and a grandmaster chess bot (Logic AI). The chess bot doesn’t “predict” the next move based on what looks pretty; it calculates the winning path through a maze of constraints.

Noyron uses a Computational Geometry Kernel (PicoGK) to manipulate matter at the voxel level, acting like a chess engine for atoms. It doesn’t guess the rocket; it derives it.

Beyond Rockets: The Era of “Constrained AI”

This concept—using hard constraints to tame AI—is spreading to every field where truth matters more than style.

1. Astronomy: Filtering the Noise

In astronomy, AI models scan billions of stars. A hallucination here means “discovering” a galaxy that doesn’t exist. Researchers are now using Physics-Informed Neural Networks (PINNs). These models are penalized not just for getting the wrong answer, but for violating conservation of energy. If the AI predicts a star moving faster than light, the “physics loss function” slaps it back into reality.

2. Biology & Chemistry: Molecule Manufacturing

In drug discovery, “molecular hallucination” is a huge issue. An LLM might invent a chemical structure that looks plausible but is chemically unstable (or explosive).

By wrapping generative models in valence constraints and thermodynamic laws, we get AI that dreams up new drugs, but only ones that can actually exist in our universe.

The Future is Validated

We are moving past the “Creative Phase” of AI into the “Engineering Phase.” This is the core promise of Physical AI and Robotics, where code must interact with the real world without error.

Creative AI (LLMs) gave us infinite content. Constrained AI (CEMs) will give us infinite physical solutions. Similar to how Ultra RAG 2.0 is solving information retrieval for research, Noyron is solving physical retrieval for manufacturing.

The rocket engine is just the start. When you can mathematically guarantee that an AI design won’t fail, you don’t just speed up engineering. You remove the fear of the unknown.

Hallucination is a feature of creativity. Accuracy is a feature of survival. For the things that matter—our bridges, our medicine, our rockets—we don’t need a poet. We need a calculator that dreams.