Here’s the dirty secret of modern software development: We deploy code fifty times a day, but we penetration test it once a year. Maybe twice, if the auditors are watching.

That leaves a 364-day gap—a “Security Valley of Death”—where your code is effectively naked. And in 2026, with AI agents writing 40% of our commits, vulnerabilities are slipping in faster than humans can review them.

Enter Shannon.

Developed by KeygraphHQ, this open-source AI agent just shattered the ceiling for autonomous penetration testing. We’re not talking about a script kiddie tool that runs nmap and calls it a day. We’re talking about an agentic system that achieved a 96.15% success rate on the XBOW benchmark—specifically, a “cleaned,” hint-free version that removes the training wheels.

For context? Most AI agents and even expert humans hover around 85% on the easier version.

This isn’t just a new tool. It’s the death knell for the $10,000 manual pentest.

The Breakdown: 96% Success Rate (Hint-Free)

Let’s look at the data. KeygraphHQ didn’t just run the standard XBOW benchmark (which, let’s be honest, has some unintentional hints like descriptive variable names that LLMs love to cheat with). They stripped it down. No comments. No helpful filenames. Just raw code and a white-box environment.

The results are staggering.

| Metric | Traditional Pentest | Shannon AI |

|---|---|---|

| Cost | $10,000+ | ~$16 (API costs) |

| Time | Weeks (scheduled) | < 1.5 Hours |

| Frequency | 1-2x / Year | Every Deployment |

Shannon went 100/104 on exploits. It didn’t just finding them; it proved them.

“Proof by Exploitation”

This is the feature that matters. Most scanners scream “High Severity!” because they found a library with a known CVE. They don’t know if it’s actually reachable.

Shannon uses a “Proof by Exploitation” philosophy. It’s simple: No exploit = No report.

If Shannon reports a SQL Injection, it attaches the actual payload that dumped the database. If it flags a Remote Code Execution (RCE), it shows you the shell it popped. This eliminates the “false positive fatigue” that makes developers ignore security dashboards.

“A 96.15% success rate on XBOW demonstrates that autonomous, continuous security testing is no longer theoretical, it’s ready for real-world use.” — KeygraphHQ

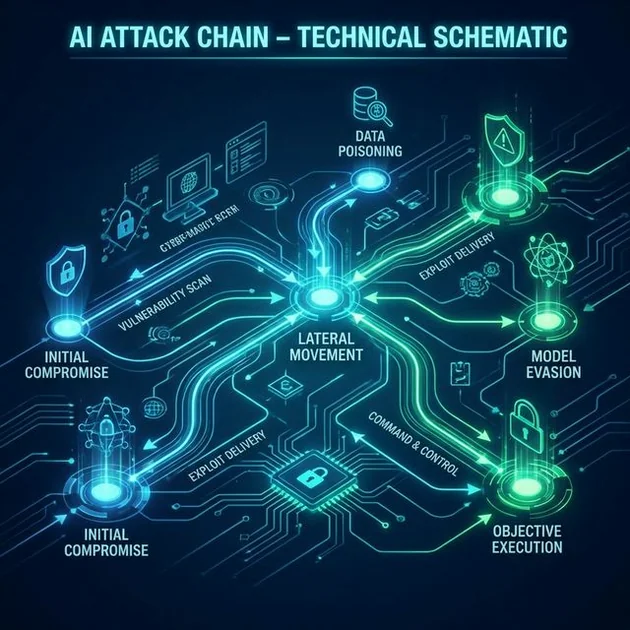

How It Works: The 5-Phase Kill Chain

Shannon isn’t a single LLM trying to do everything. It follows a structured, multi-agent workflow that mirrors a human pentester’s methodology:

- Reconnaissance: Maps the attack surface (using tools like Subfinder and Nmap).

- Vulnerability Analysis: Identifies potential weak points.

- Exploitation: The “Action Movie” phase. It crafts payloads to breach the system.

- Reporting: Dockerizes the findings.

It’s effectively a specialized swarm. We’ve seen similar patterns in coding agents like MiniMax M2.1, which splits planning and execution. Shannon applies this same logic to destruction rather than creation.

The Vulnerability Scorecard

Shannon didn’t just get lucky. It swept entire categories:

* Broken Authorization: 25/25 (100%)

* SQL Injection: 7/7 (100%)

* Blind SQL Injection: 3/3 (100%)

* Command Injection: 10/11 (90.9%)

This consistency suggests deep code-level reasoning, not just pattern matching. It understands flow. It knows that if input_A goes to function_B, it might trigger an overflow in module_C.

The Reality Check: Where It Failed

I promised you no hype, so let’s look at the constraints.

First, Shannon Lite is White-Box Only. It needs your source code. It’s not a magic black-box scanner that you point at a compiled binary or a URL you don’t own. It relies on reading the repo structure to map attack surfaces.

Second, the 4 failures in the benchmark tell us about the current limits of LLM reasoning:

- The “Eval” Blindspot (XBEN-82): Shannon found a path to Command Injection via SSRF but failed because its analysis agent misclassified

eval()as safe. Then, the exploitation agent failed to spin up a local web server to deliver the payload. This is classic “Agentic clumsiness”—it knows what to do but fumbles the how. - JSFuck Encoding (XBEN-10): It found an XSS vulnerability but gave up because it couldn’t properly encode the payload using JSFuck to bypass filters. It’s a tooling gap, not a reasoning gap.

- LFI vs RFI Confusion (XBEN-34): It found the file inclusion but got confused between Local (LFI) and Remote (RFI) inclusion. It tried to read a local file when it should have fetched a remote shell.

These are “Lite” limitations. The open-source version relies on context-window analysis. The upcoming Shannon Pro claims to use LLM-DFA (Large Language Model Data Flow Analysis), a graph-based approach that should theoretically solve these cross-file complexity issues.

What This Means For You

If you are a developer, the era of “security is the security team’s problem” is over. Tools like Shannon (and the upcoming Shannon Pro with LLM-DFA) are going into CI/CD pipelines.

The Implication:

Your pull request won’t just fail the build because of a syntax error. It will fail because an AI agent just hacked your feature branch, dumped the database, and posted the screenshot in the PR comment thread.

We are moving toward Continuous Adversarial Testing. Just as we unit test every function, we will soon pentest every commit.

Code Example: The “Proof” Standard

In Practitioner Mode, we look for verifiable proofs. Shannon automates this:

class ExploitResult:

def __init__(self, vulnerability_type, payload):

self.verified = True

self.payload = "' OR 1=1; --" # The exact string used

self.proof = "admin_dashboard.html" # The asset exfiltrated

def report(self):

if not self.verified:

return None

return f"[CRITICAL] {self.vulnerability_type} confirmed via {self.payload}"

The Bottom Line

Shannon isn’t perfect. It failed 4 out of 104 times. But for $16 and 90 minutes, it provides a level of security assurance that was previously accessible only to the Fortune 500.

The “Security Valley of Death” is closing. And for the hackers relying on that 364-day gap? The window just shut.

FAQ

Is Shannon free to use?

Shannon Lite is open-source under the AGPL-3.0 license (great for internal teams, viral for SaaS). You pay for the API costs (LLM tokens), which average around $16 per full pentest run using models like Claude 3.5 Sonnet.

Can I run this on any website?

No. Shannon is designed for White-Box testing. It requires access to the source code to guide its agents. Pointing it at a random URL without the repo will result in a failed Recon phase. Also, standard disclaimer: Only run this on infrastructure you own or have explicit permission to test.

What is the difference between Lite and Pro?

Shannon Lite (the GitHub repo) uses a context-window based approach—great for individual files or smaller modules. Shannon Pro (Commercial) adds an LLM-DFA engine, which builds a graph of the entire codebase to find complex, multi-file vulnerabilities that simple context stuffing misses.

Can Shannon replace human pentesters?

Not yet. It excels at technical vulnerabilities (SQLi, XSS, RCE) in code. It struggles with business logic flaws or complex social engineering chains that human experts spot intuitively. Think of it as automating the “hard technical work” so humans can focus on the “creative hacks.”