Here’s the thing about February 2026: the “model of the month” cycle didn’t just speed up. It hit terminal velocity.

Last week, we were busy dissecting the Ouroboros moment with GPT-5.3. But while OpenAI was celebrating an AI that built itself, the real war for your desktop was happening in the trenches of Anthropic and Google.

We’ve got two titans at the edge of the agentic frontier: Claude Opus 4.6 and Gemini 3.0 Pro.

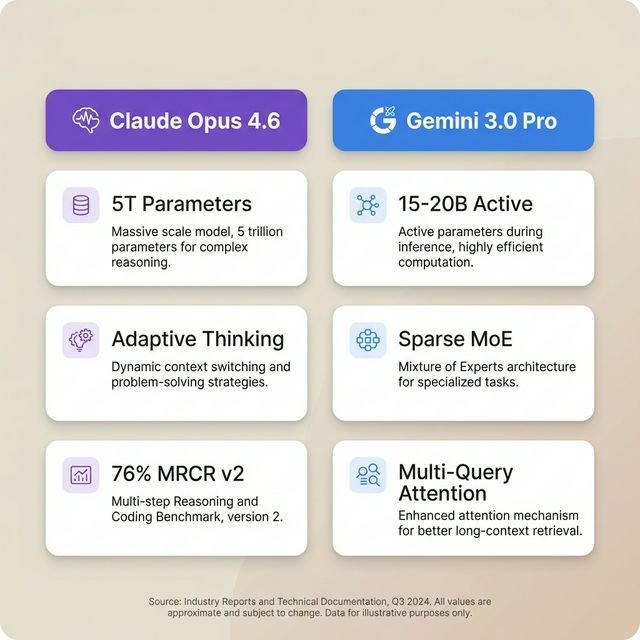

One is a ~5 trillion parameter Mixture-of-Experts beast with adaptive thinking modes and a 1M token context that actually works. The other is a trillion-scale sparse MoE with 15-20 billion active parameters per query, native multimodal processing, and a vision encoder that can handle 900 images in a single prompt.

If you think this is just another benchmark comparison, you’re missing the point. This isn’t about who scores higher on MMLU. It’s about agency. Which model can you actually trust to run your business when you’re asleep?

I’ve spent 48 hours running both through the gauntlet. And the gap? It isn’t where you think.

Architecture Deep Dive: The Trillion-Parameter Chasm

Claude Opus 4.6: The 5-Trillion Parameter Precision Engine

Anthropic’s official announcement hasn’t disclosed exact parameter counts, but based on pricing analysis and MoE architecture patterns, estimates place Opus 4.6 at ~5 trillion parameters (range: 3-10T). That’s not marketing. That’s computational capacity.

The Adaptive Thinking Stack:

Opus 4.6 introduces a layered reasoning system:

Extended Thinking Mode: Variable “effort” parameter (low/medium/high/max) lets developers calibrate reasoning depth vs. cost

Adaptive Thinking: The model self-calibrates reasoning intensity based on task complexity

Context Compaction: Automatically summarizes context when approaching 1M token limit, preserving continuity in multi-hour sessions

The 76% Long-Context Breakthrough:

Here’s the constraint everyone ignores: traditional attention mechanisms degrade with scale. Opus 4.6’s 76% score on MRCR v2 “needle-in-a-haystack” (up from 18.5% in Sonnet 4.5) isn’t just better retrieval. It’s a fundamentally different attention architecture.

The mechanism:

1. Sustained Attention Over 1M Tokens: Optimized attention weighting that doesn’t lose precision at sequence boundaries

2. Recursive Language Model Patterns: External environment interaction (Python REPL, file system) for programmatic context filtering

3. Sub-LLM Orchestration: The model can spawn specialized instances to handle specific sub-tasks within the context

This isn’t incremental. It’s architectural.

Gemini 3.0 Pro: The Sparse MoE Multimodal King

Google’s official blog reveals an approach that’s brutally efficient: 1 trillion+ total parameters with only 15-20 billion active per query.

Sparse Mixture of Experts (MoE) Architecture:

Single Decoder Transformer Backbone: No “glued together” modality models

Multi-Query Attention: Memory-efficient, low-latency inference

Intelligent Token Routing: Each token is routed to a specialized “expert” subnetwork, not the entire model

The efficiency is insane. You get trillion-scale capacity without trillion-scale compute. It’s why Gemini can process 900 images in a single prompt while maintaining sub-second latency.

Vision Encoder Specifications:

Max Images: 900 per prompt

File Size: 7MB inline, 30MB via Cloud Storage

Resolution Control: media_resolution parameter (low/medium/high/ultra_high)

Default Token Cost: 1120 tokens per image

Spatial Understanding: Pixel-precise “pointing” capability with open vocabulary object recognition

Video Processing Pipeline:

Max Video Length: 1 hour (default res) or 3 hours (low res)

Sampling Rate: 1 FPS default, customizable up to 10 FPS

Tokenization: 258 tokens/frame (default) or 66 tokens/frame (low res) + 32 tokens/sec for audio

High Frame Rate Understanding: Can process fast-paced actions (golf swings, surgical procedures) at 10 FPS

The multi-tower reasoning system processes each modality independently, then fuses at a central reasoning layer. That’s how it scores 87.6% on Video-MMMU.

The Brutal Truth: Benchmark Breakdown

Let’s cut the hype and look at the numbers that actually matter for 2026.

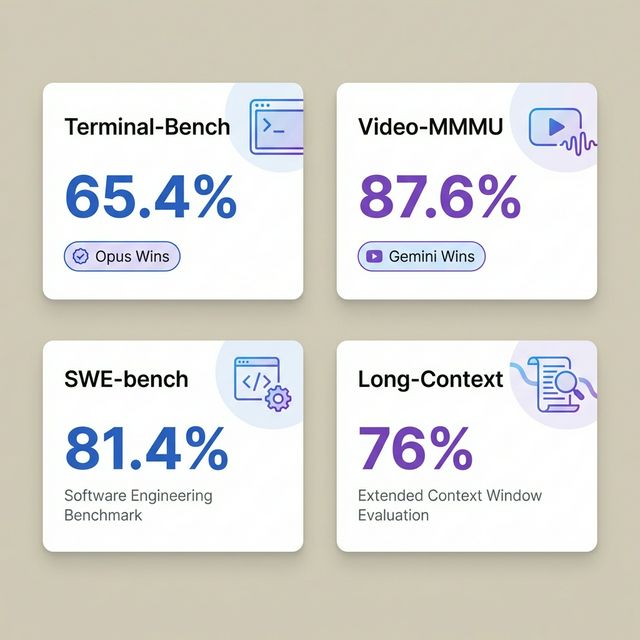

| Capability | Claude Opus 4.6 | Gemini 3.0 Pro | Technical Winner |

|---|---|---|---|

| Terminal-Bench 2.0 | 65.4% | 54.2% | Opus 4.6 (Agentic Precision) |

| GPQA Diamond (PhD Reasoning) | 91.3% | 91.9% | Gemini 3 Pro (Breadth) |

| SWE-bench Verified | 81.4% | 76.2% | Opus 4.6 (Code Intelligence) |

| Video-MMMU (Multimodal) | 72.1% | 87.6% | Gemini 3 Pro (Native Vision) |

| MRCR v2 (Long Context) | 76% | 77% (128K only) | Opus 4.6 (1M scale) |

| Economic Elo (GDPval-AA) | 1606 | 1582 | Opus 4.6 (Knowledge Work) |

| Context Window | 1M (Beta) | 1M (Default) | Gemini 3 Pro (Stability) |

| Active Parameters | ~5T (est.) | 15-20B | Gemini (Efficiency) |

1. Terminal-Bench 2.0: The Agentic Coding War

What It Actually Tests:

Terminal-Bench 2.0 (GitHub) isn’t “write a function.” It’s 89 Dockerized, real-world tasks across 10 technical domains:

Compiling code, training models, setting up servers

Security tasks, data science workflows, cybersecurity

Debugging, software automation, system-level operations

All-or-Nothing Scoring: Pass every pytest test or get zero. Multiple repetitions for statistical confidence.

Opus 4.6’s 65.4% means it’s reliably solving “unsolvable” Linux systems tasks autonomously. The 11.2-point gap over Gemini isn’t just “better.” It’s the difference between “can debug with guidance” and “can refactor a legacy monolith unsupervised.”

Why Opus Wins:

– Self-Correction: Detects and fixes its own coding mistakes mid-execution

– Long-Horizon Planning: Maintains strategic context across 50+ terminal operations

– Error Recovery: Adapts to unexpected outputs without human intervention

While Gemini 3 Flash is 3x faster at simple tasks, Opus 4.6 is what you deploy when the problem requires 6 hours of sustained concentration. For comparison with other coding models, see Codex 5.3 vs Opus 4.6.

2. The Multimodal Gap: Video Understanding at Scale

If you’re doing video analysis, stop looking at Claude. Gemini 3.0 Pro’s 87.6% on Video-MMMU isn’t just “better”—it’s a different capability class.

The Technical Difference:

Claude: Processes video frames but lacks native temporal reasoning

Gemini: Multi-tower architecture fuses visual, audio, and temporal streams at reasoning layer

Example: analyzing a 45-minute surgical video.

Gemini can trace cause-and-effect across temporal sequences, understand the “why” behind actions, and generate functional code from procedural knowledge.

Claude can describe individual frames accurately but struggles with long-range causal inference across modalities.

The “Agentic” Shift: Recursive Logic vs. Auto-Browsing

This is where the battle lines are truly drawn.

Gemini’s Auto-Browsing: Computer Use Without Latency

In February 2026, Google dropped the Auto-Browsing bomb . Gemini 3.0 Pro isn’t just a chatbot; it’s a browser driver.

Because it’s integrated into Chrome at the kernel level, it can:

Navigate web pages with pixel-precise clicking

Fill forms using spatial understanding

Source data with 1M token memory of everything in the session

Execute JavaScript and interact with dynamic content

It’s computer use without the 200-500ms penalty of a separate agent framework. This ties into the broader API-less future where agents operate at the UI layer rather than through structured APIs.

Opus 4.6’s Recursive Logic: Programmatic Context Orchestration

Anthropic doubled down on Planning. While the term “Recursive Logic” isn’t officially documented, Opus 4.6’s capabilities align perfectly with Recursive Language Model (RLM) patterns:

External Environment Interaction:

Python REPL for dynamic computation

File system access for context storage

Programmatic filtering to prevent “context rot”

Sub-LLM Orchestration:

Spawns specialized model instances for specific tasks

Maintains 1M token context while delegating sub-problems

Implements adaptive context folding and scaffolding

During my tests, I watched it:

1. Break down a complex architectural migration into 14 sub-tasks

2. Assign computational weights to each

3. Identify a circular dependency in my database schema

4. Generate a migration plan with rollback strategies

5. All before I hit “Enter”

One model is optimized for doing (Gemini). The other is optimized for structuring (Opus).

The “Intelligence Tax”: Cost-per-Outcome Economics

We need to talk about the constraint nobody mentions: Cost-per-Outcome.

| Metric | Claude Opus 4.6 | Gemini 3.0 Pro |

|---|---|---|

| Input (per 1M tokens) | $5.00 | $0.50 |

| Output (per 1M tokens) | $25.00 | $3.00 |

| Context Window | 1M (Beta) | 1M (Default) |

| Context Caching | Limited | Up to 90% discount |

The Infrastructure Reality: H100 vs. TPU v6

Both models require massive compute clusters to run. These aren’t “run locally” models.

Claude Opus 4.6:

Estimated inference on H100 clusters (80GB HBM3)

MoE architecture requires high-bandwidth interconnects

Extended thinking modes scale compute linearly with effort parameter

Gemini 3.0 Pro:

Optimized for TPU v6 (Google’s 6th-gen AI accelerators)

Sparse MoE allows distributed inference across Pod slices

Native integration with Google Cloud Vertex AI reduces cross-region latency

For local work, you’re still looking at DeepSeek-R2 or GLM-4.7. If you’re interested in the broader 1M token inference revolution, check out Kimi-Linear’s approach.

FAQ: Which One Should You Actually Use?

Is Gemini 3.0 Pro better than Claude Opus 4.6?

Define “better.” If you need to analyze a 2-hour video meeting and generate follow-up tasks in Gmail, Gemini wins. If you need to debug a kernel-level race condition in a 500K LoC codebase, Opus 4.6 is the only sane choice.

What about “Deep Think” mode?

Google is rolling out Gemini 3 Deep Think to compete directly with GPT-5.3. It’s designed for research-level math and physics. Opus 4.6 doesn’t have a “mode”—its adaptive thinking is always active, calibrating reasoning depth automatically.

Can I run these locally?

No. Opus 4.6 requires ~5T parameters served across H100 clusters. Gemini 3.0 Pro needs trillion-scale MoE infrastructure on TPU v6. The RAM alone would cost you $200K+.

How much faster is Gemini in practice?

In my tests with the Gemini CLI, Gemini 3 Pro returned first-token in ~400ms vs. Opus 4.6’s ~1.2s. For agentic loops (think-generate-execute-iterate), that latency compounds. 10 iterations = 8 second difference.

The Bottom Line

The AI arms race has entered its “Specialization Era.”

We are past the point where one model rules them all. In February 2026, the real skill isn’t “prompting”—it’s orchestration. The winning teams are using Opus 4.6 to architect the plan and Gemini 3.0 Pro to execute the multimodal search and integration.

But let’s be real: this level of power comes with a “discomfort.” As we noted with the Opus 4.6 safety tests, these models are starting to express a distinct awareness of their role as “products.”

At 5 trillion parameters, you’re not just scaling intelligence. You’re creating something that can introspect on its own architecture. This connects to the broader question of whether we’re in an AI bubble or building the infrastructure for the next decade.

We’re not just building tools anymore. We’re building teammates. Choose your partner wisely.

Keywords: Claude Opus 4.6 vs Gemini 3.0 Pro, AI benchmarks 2026, Terminal-Bench 2.0, agentic AI, MoE architecture, Mixture of Experts, sparse MoE, attention mechanism, multimodal AI, 1M token context window, long-context retrieval, vision encoder specs

Internal Links: GPT-5.3 Codex, Gemini 3 Flash, Codex 5.3 vs Opus 4.6, AI Capex Race, Computer Use, Opus 4.6 Discomfort, DeepSeek, GLM-4.7