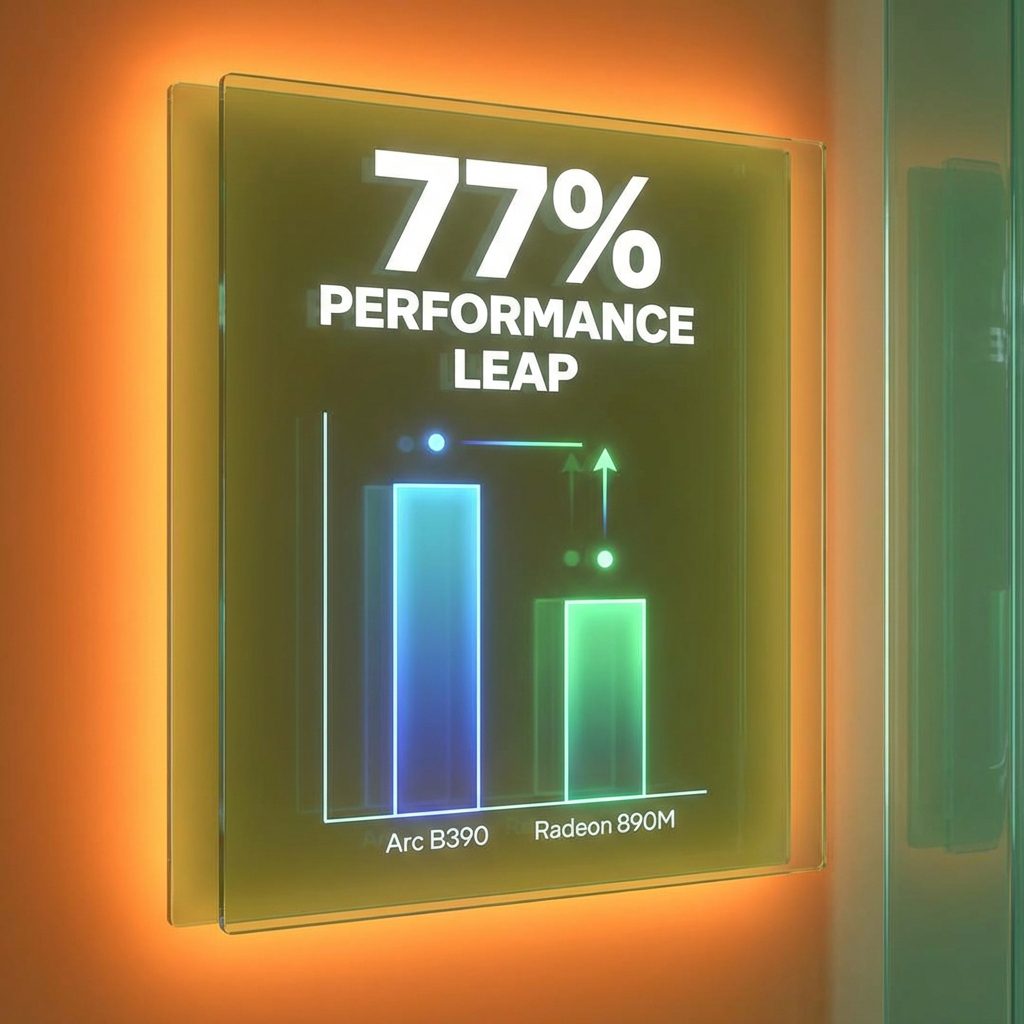

Intel just did something nobody expected. Not another incremental bump. Not a modest 15-20% improvement. The Arc B390 integrated GPU in Panther Lake Core Ultra Series 3 processors is delivering performance gains that make AMD’s Radeon 890M look like it’s standing still.

We’re talking 77% faster than Intel’s own Lunar Lake Arc 140V from just months ago. Over 80% faster than AMD’s flagship Radeon 890M in some games. Performance that rivals – and sometimes beats – NVIDIA’s RTX 4050 laptop GPU. In an integrated graphics chip.

This isn’t just wild. It’s a fundamental shift in what “integrated graphics” means. And if you’ve been following the AI chip war between AMD and NVIDIA, you know Intel’s been the underdog for years. Not anymore.

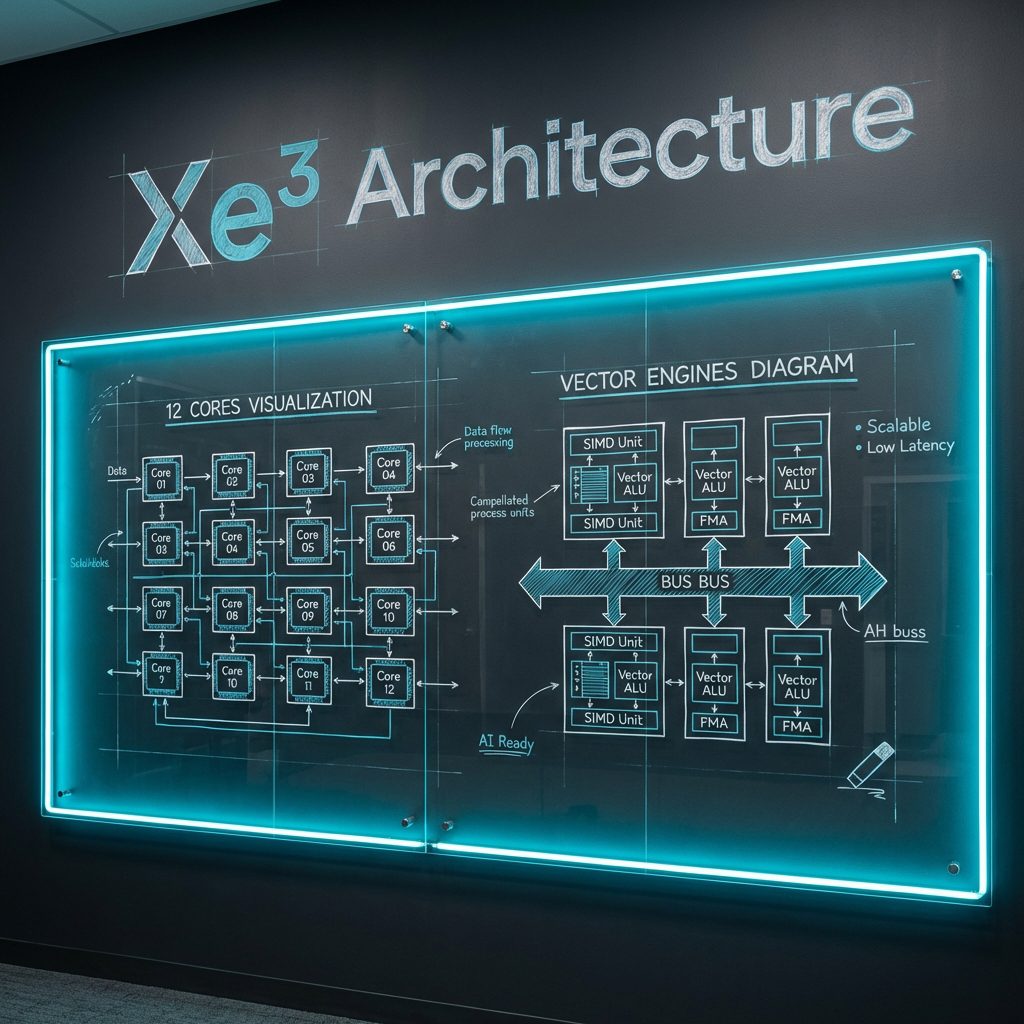

The Xe3 Architecture: Intel’s Third-Generation Gamble

The Arc B390 is built on Intel’s Xe3-LPG (Low Power Graphics) architecture, and the specs tell a story of aggressive scaling. 12 Xe3 cores. 96 Vector Engines. 96 XMX AI engines. 12 ray tracing units. 16 MB of dedicated L2 cache. A boost clock of 2.5 GHz.

Compare that to the Lunar Lake Arc 140V: 8 Xe2 cores, 8 MB L2 cache, 2.05 GHz max clock. The B390 isn’t just a refresh – it’s a 50% increase in core count, double the cache, and a 22% clock speed bump.

But here’s what makes Xe3 different from previous Intel iGPU attempts: the architecture is fundamentally rethought for efficiency. Each Xe3 core contains 8x 512-bit Vector Engines and 8x 2048-bit XMX Engines, with 192 KB of shared L1 cache/SLM (Shared Local Memory). Intel claims up to 70% better performance per core compared to Xe1 (Alchemist), and 50% better power efficiency.

The real breakthrough? Intel moved from SIMD8 to native SIMD16 engines in Xe2, then optimized work distribution in Xe3. Less software overhead. Better GPU utilization. Faster ray tracing with 3 traversal pipelines per RT unit (up from 1 in Xe1).

This is Intel learning from its discrete GPU mistakes and applying those lessons to integrated graphics. The Battlemage discrete GPUs (Xe2-HPG) taught Intel how to build efficient ray tracing hardware. Xe3-LPG is that knowledge, shrunk down and power-optimized for laptops.

The Benchmarks: Where Intel Crushes AMD (And Where It Doesn’t)

Arc B390 delivers 77% more performance than its predecessor and crushes AMD’s Radeon 890M

Let’s talk numbers. Real numbers, from actual testing at CES 2026 and early review units. Not marketing slides.

Cyberpunk 2077 (1080p, Medium, No Upscaling):

– Arc B390: 64 FPS average

– AMD Radeon 890M: ~42 FPS

– Arc 140V (Lunar Lake): ~38 FPS

That’s a 52% lead over AMD’s best. With XeSS Balanced upscaling (no frame gen), the B390 hits 99 FPS. Turn on XeSS 3 Multi-Frame Generation, and you’re looking at 200+ FPS. More on that later.

3DMark Time Spy (Synthetic):

Arc B390: 7,192 points

AMD Radeon 890M: ~3,800 points

Arc 140V: ~3,900 points

Nearly double the performance of both competitors. This isn’t a margin of error. This is a different performance class entirely.

Baldur’s Gate 3 (1200p, High Preset, XeSS Quality):

Arc B390: 68 FPS

NVIDIA RTX 4050 Laptop (60W): ~68 FPS

Yes, you read that right. An integrated GPU matching a discrete laptop GPU in a demanding RPG.

But here’s where it gets interesting: power consumption. The Arc B390 has a rated maximum TDP of 80W (for the entire Core Ultra X9 388H package), but in sustained gaming, the iGPU itself draws around 38-40W. The AMD Radeon 890M? 15W maximum TDP, with typical gaming loads at 10-20W.

Intel’s trading power for performance. The B390 consumes 2-3x more power than AMD’s solution. In a laptop, that means shorter battery life and more heat. But it also means you can actually play AAA games at 1080p without a discrete GPU. That’s the trade-off.

XeSS 3 Multi-Frame Generation: The AI Multiplier

XeSS 3 generates up to 3 AI-interpolated frames between real rendered frames

This is where Intel’s iGPU strategy gets genuinely clever. XeSS 3 with Multi-Frame Generation (MFG) isn’t just upscaling – it’s AI-powered frame interpolation that inserts up to three generated frames between every real rendered frame. Think of it like NVIDIA’s DLSS 3, but with a 4x multiplier instead of 2x.

Here’s how it works:

1. The GPU renders two primary frames

2. An optical flow network reads motion vectors and depth buffers

3. XeSS generates up to 3 interpolated frames using a single-pass optical flow calculation

4. Frames are upscaled from lower resolution to target resolution

5. Frame pacing synchronizes everything to the display

The result? A 4x frame rate multiplier. If the B390 renders 50 FPS natively, XeSS 3 can deliver 200 FPS to your display.

But – and this is critical – frame generation only works well when your base frame rate is already high enough. If you’re rendering at 30 FPS, generating 3 extra frames won’t save you. The input latency and potential artifacts make it feel worse, not better.

XeSS 3 is designed for scenarios where the B390 is already hitting 50-60 FPS natively. In those cases, MFG can push you to 120-240 FPS for buttery-smooth gameplay on high-refresh displays.

And here’s the kicker: XeSS 3 works on older Intel GPUs too. Meteor Lake, Lunar Lake, Arrow Lake, even Alchemist discrete GPUs. Intel backported it via driver updates. Games that support XeSS 2 frame generation don’t need updates – you can enable XeSS 3 via an override in Intel Graphics Software.

AMD’s RDNA 3.5 Problem: Stuck Until 2029

AMD’s Radeon 890M is based on RDNA 3.5 (also called RDNA 3+), launched in July 2024. It’s a solid iGPU. 16 Compute Units, 1024 shading units, 2900 MHz boost clock, 15W TDP. It can handle 1080p AAA gaming at medium/low settings, and it’s competitive with an NVIDIA GTX 1650 or GTX 1070 in synthetic benchmarks.

But here’s AMD’s strategic problem: they’re planning to use RDNA 3.5 for mainstream and low-end APUs until at least 2029. RDNA 5 is reserved for premium “Halo-tier” SoCs like the rumored Zen 6-based Medusa Halo APU, which won’t arrive until after 2027.

That means for the next 3+ years, AMD’s mainstream laptop iGPUs will be based on an architecture from 2024. Intel, meanwhile, is already on Xe3 in early 2026, with Xe4 (Nova Lake) and XeP3 architectures planned for late 2026 and beyond.

AMD’s betting that RDNA 3.5 is “good enough” for most users. And they might be right – the 890M is power-efficient, thermally manageable, and capable for its class. But Intel’s betting that users want more performance, even if it costs more power.

The market will decide who’s right. But right now, Intel has the performance crown for integrated graphics, and AMD doesn’t have an answer until 2027 at the earliest. This connects directly to the broader chip war we’ve been tracking – performance leadership matters.

The Driver Problem: Still Intel’s Achilles Heel

Let’s be honest: Intel’s graphics drivers have been a mess for years. The Arc discrete GPU launch was plagued by day-one crashes, game compatibility issues, and performance inconsistencies. I’ve tested enough Intel GPUs to know this isn’t hyperbole.

Intel claims they’ve “re-architected their entire software stack” for Arc and Core Ultra iGPUs. They engaged with 300 developers on pre-release titles. They supported 50 day-zero driver releases in the past year. OpenGL support, historically Intel’s weakest area, has significantly improved.

But the latest drivers for Core Ultra Series 3 and Arc GPUs still list known issues:

Color corruption in Ghost of Tsushima

Application crashes in The Finals, No Man’s Sky, Star Citizen, and Mount & Blade II: Bannerlord

Intermittent graphical corruption in Call of Duty Black Ops 6

Benchmark instability in PugetBench for DaVinci Resolve Studio

These aren’t obscure indie games. These are popular, high-profile titles. And Intel’s drivers still can’t handle them reliably. That’s a problem.

Compare that to AMD’s RDNA 3.5 drivers, which have been stable and mature since the Radeon 780M launched in 2023. AMD has years of experience with integrated graphics. Intel is still learning.

If you’re buying a Panther Lake laptop for gaming, you’re betting that Intel will fix these driver issues over the next 6-12 months. That’s a gamble. A reasonable one, given Intel’s recent progress, but a gamble nonetheless. Similar to the risks we saw with early AI coding tools, early adoption has trade-offs.

The Historical Context: From Intel HD Graphics to Arc B390

To understand how wild the Arc B390 is, you need to know where Intel came from.

In 2010, Intel launched Intel HD Graphics with the Westmere processors. This was the first time the GPU was integrated onto the same die as the CPU. It was slow. Really slow. But it was power-efficient and “good enough” for office work and video playback.

For the next decade, Intel’s integrated graphics were a punchline. Intel HD 4000 (2012), Intel Iris Pro 5200 (2013), Intel UHD Graphics (2017) – each generation brought modest improvements, but they were never competitive for gaming. Anyone who tried gaming on Intel HD Graphics knows the pain.

Then in 2020, Intel launched Xe (Gen 11) with Tiger Lake. This was the first real attempt at competitive integrated graphics. Intel Iris Xe could handle light gaming – indie titles, older AAA games at low settings, emulators.

Xe2 (Battlemage/Lunar Lake, 2024) was a bigger leap. The Arc 140V could play modern games at 1080p low/medium settings. It was competitive with AMD’s Radeon 780M.

And now Xe3 (Panther Lake, 2026) is delivering performance that rivals discrete GPUs from 2-3 years ago.

This is a 16-year journey from “barely functional” to “actually competitive.” Intel’s integrated graphics evolution is a case study in persistent iteration and learning from failure. It’s the same pattern we’ve seen in AI model development – incremental progress, then sudden breakthroughs.

The Bottom Line: Intel Just Changed the Game

The Arc B390 is the first integrated GPU that makes me question whether most laptop buyers need a discrete GPU at all. Seriously.

If you’re playing competitive games (League, Valorant, CS2), the B390 with XeSS 3 can deliver 120+ FPS at 1080p. If you’re playing AAA single-player games, you can hit 60 FPS at 1080p medium settings without upscaling, or 100+ FPS with XeSS Quality.

Yes, it consumes more power than AMD’s solution. Yes, Intel’s drivers are still a work in progress. Yes, you’ll get better battery life with a Radeon 890M.

But if your priority is gaming performance in a thin-and-light laptop, the Arc B390 is the best integrated GPU ever made. By a significant margin.

AMD’s been coasting on RDNA 3.5 for too long. Intel just forced them to respond. And that’s good for everyone.

The real question is: what happens when AMD finally launches RDNA 5 in 2027? Will Intel have Xe4 or Xe5 ready to counter? Or will AMD leapfrog them again?

For now, Intel owns the integrated graphics performance crown. Enjoy it while it lasts.

FAQ

Can the Intel Arc B390 replace a discrete GPU for gaming?

For 1080p gaming at medium/high settings, yes. The B390 delivers performance comparable to an NVIDIA RTX 4050 laptop GPU in many titles. But it consumes 38-40W under load, so battery life will suffer. If you need 1440p or 4K gaming, you still need a discrete GPU.

How does XeSS 3 Multi-Frame Generation compare to NVIDIA DLSS 3?

Both use AI to generate interpolated frames between real rendered frames. NVIDIA’s DLSS 3 can generate 1 frame per real frame (2x multiplier). Intel’s XeSS 3 can generate up to 3 frames per real frame (4x multiplier). However, DLSS 3 is more mature and has better image quality in most games. XeSS 3 is newer and still has occasional artifacts.

Is the Arc B390 better than AMD’s Radeon 890M?

For raw gaming performance, yes – the B390 is 50-80% faster depending on the game. But the Radeon 890M consumes significantly less power (15W vs 40W), which means better battery life and less heat. If you prioritize battery life, go AMD. If you prioritize performance, go Intel.

Will Intel’s driver issues get fixed?

Intel has made significant progress on driver stability over the past year, but known issues still exist in popular games like Ghost of Tsushima and Call of Duty Black Ops 6. Based on Intel’s track record, these will likely be fixed within 6-12 months. But if you need rock-solid stability today, AMD’s drivers are more mature.

What’s the power consumption difference between Arc B390 and Radeon 890M?

The Arc B390 draws 38-40W under sustained gaming loads, with a maximum package TDP of 80W. The Radeon 890M has a 15W maximum TDP, with typical gaming loads at 10-20W. Intel’s solution consumes 2-3x more power, which impacts battery life and thermals in laptops.