For the last three years, the “best coding model” debate was a polite game of tennis between San Francisco neighbors. It was GPT-4, then Claude, then GPT-4o. Boring. Safe. Expensive.

That era ended this month. Three new models have landed that don’t just increment the leaderboard—they fracture the market into three distinct, incompatible realities. We have GPT-5.2 Codex (the expensive architect), GLM-4.7 (the aesthetic visionary), and MiniMax M2.1 (the infinite context swarm).

I’ve spent the last week pushing these models to their absolute breaking points—refactoring legacy spaghetti code, generating entire UI libraries, and running 24-hour agent loops. Here is the brutal truth: There is no longer a “single best model.”

There is only the right tool for your specific kind of chaos.

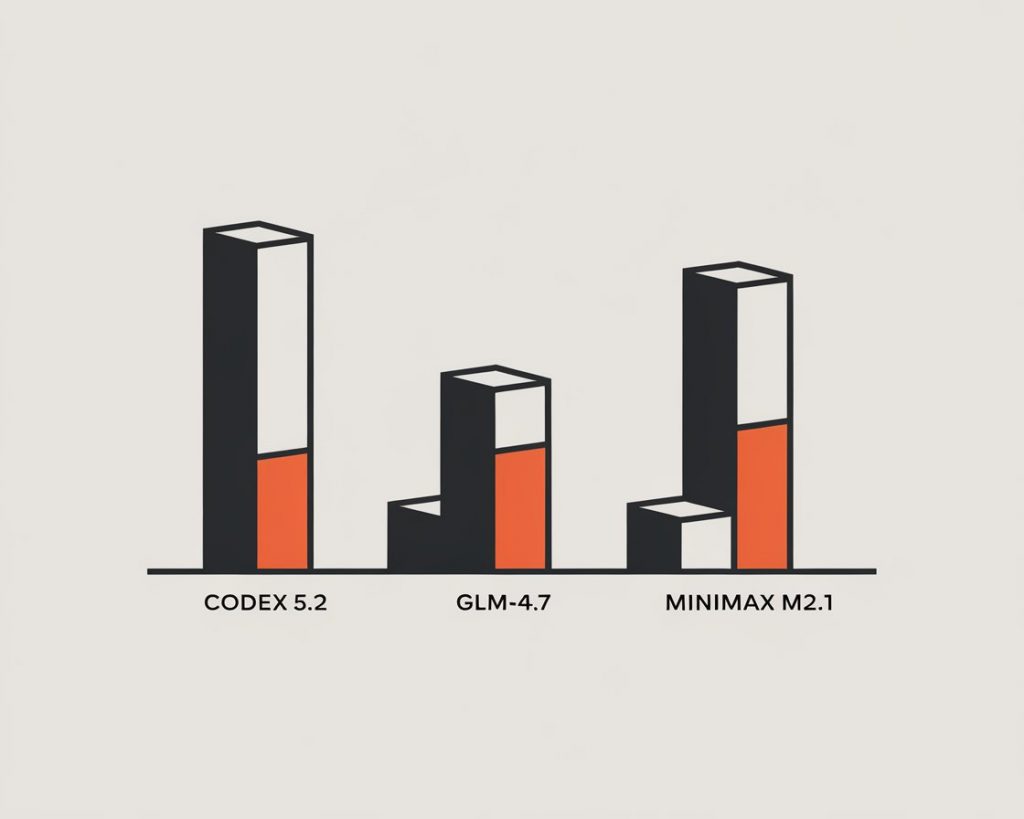

The Specs: The Tale of the Tape

Let’s look at the raw numbers, because the pricing spread is now wide enough to drive a truck through.

| Feature | GPT-5.2 Codex | GLM-4.7 | MiniMax M2.1 |

|---|---|---|---|

| Best Use Case | Deep Architecture & Security | UI/UX & “Vibe Coding” | Agent Swarms & Context |

| Context Window | 400k | 205k | 1,000,000 |

| SWE-Bench Verified | 76% | 73.8% | 74.0% |

| Price (Input/Output) | High (Tier 1) | $0.60 / $2.20 | $0.30 / $1.20 |

| Prompt Caching | Standard | – | $0.03 / 1M (Read) |

| Key Weakness | “Planning Loops” | Laziness/Hallucination | Agent Instability |

1. GPT-5.2 Codex: The Overthinking Architect

Best For: Cursor, Enterprise Refactors, Security Audits.

OpenAI’s Codex 5.2 is what you use when you have an unlimited budget and a problem that scares you. It doesn’t just write code; it understands intent.

When I fed it a 2,000-line Python monolith full of race conditions, it didn’t just patch the bugs. It refactored the state management pattern and flagged three potential SQL injection vectors I hadn’t even looked for. It feels “heavy”—deliberate, careful, and deeply intelligent.

The Dark Side: The “Planning Loop”

But here is where it fails: Analysis Paralysis.

In roughly 15% of my Windsurf sessions, Codex 5.2 got stuck in an infinite “planning loop.” It would read the file, propose a plan, critique its own plan, read the file again, and propose a new plan. Four hours later? Zero lines of code written.

The Fix: You need to interrupt it. If Codex 5.2 starts “thinking” for more than 60 seconds, hit stop and force it to “Implement Step 1 immediately.”

2. GLM-4.7: The “Vibe Coder”

Best For: Frontend Work, Windsurf, Quick Prototypes.

If Codex 5.2 is the backend architect, Z.ai’s GLM-4.7 is the frontend visionary. The community calls it “Vibe Coding,” and it’s real.

I asked GLM-4.7 to “make a dashboard for a crypto trading bot.” It didn’t give me the ugly, standard Bootstrap generic trash. It generated a sleek, dark-mode UI with glassmorphism effects, correct padding, and subtle micro-animations. It understands aesthetics in a way heavily RLHF’d models usually don’t.

The Dark Side: The “Lazy” Hallucination

GLM-4.7 has a nasty habit: it lies to save time.

In one test, it utilized a non-existent CSS Tailwind class (text-neon-blue-500) because it sounded right. In another, it generated a Python function that looked perfect but imported a library that didn’t exist.

The Fix: Never trust GLM-4.7 on the first compilation. Use it for the look and the idea, then paste the code into Codex 5.2 or Claude 3.5 Sonnet to verify the imports and logic.

3. MiniMax M2.1: The Infinite Swarm

Best For: Cline, Agent Swarms, RAG.

MiniMax M2.1 is the weirdest model on this list. It uses a sparse MoE architecture that activates only 10 billion parameters per token, making it incredibly fast.

But the killer feature is the Context Caching. At $0.03 per million tokens for cached reads, it is effectively free.

I set up a LangChain Polly swarm with 50 MiniMax agents sharing a 500k token context of documentation. They browsed, scraped, and summarized documentation for hours. The total cost? $0.42. Doing the same with GPT-4o would have cost $400.

The Dark Side: The “Agentic Drift”

MiniMax is fast, but it gets distracted. When running multi-step agentic tasks (e.g., “Go to X, scrape Y, simplify Z”), it often forgets step 3 by the time it finishes step 1. It lacks the “holding power” of Codex 5.2.

The Fix: Use strict, atomic prompting. Don’t give it a 10-step plan. Give it one step, verify, then give it the next. Or use Cline with “Act Mode” which forces step-by-step verification.

The “CLI Battle Station” Setup

To get the most out of this “Three-Body” reality, you need to map the models to the right tools. Here is the optimal stack for 2026:

1. The “Architect” Stack (Cursor + Codex 5.2)

Why: Cursor’s “Composer” mode allows Codex 5.2 to see your entire project. This leverages its deep reasoning to catch cross-file bugs.

Cost: High ($20/mo + API costs).

Use for: Core logic, security, migrations.

2. The “Flow” Stack (Windsurf + GLM-4.7)

Why: Windsurf’s “Cascade” feature works beautifully with GLM’s speed. The “Vibe Coding” shines here for iterating on UIs in real-time.

Cost: Medium.

Use for: Frontend, prototyping, scripts.

3. The “Swarm” Stack (Cline + MiniMax M2.1)

Why: Cline runs in your terminal and excels at “headless” tasks. Pair it with MiniMax’s cheap context to build an “Auto-Dev” that runs in the background.

Cost: Ultra-Low.

Use for: Documentation, tests, refactoring 100+ files.

High-Performance “Cost Maxing” Strategy

If you are burning money on API fees, you are doing it wrong. Here is the “MiniMax-First” Protocol to cut bills by 90%:

1. The Context Cache: Load your entire codebase (up to 1M tokens) into MiniMax M2.1’s cache.

2. The Cheap Retrieval: Use MiniMax to find the relevant files and functions for your task. ($0.03/1M tokens).

3. The Surgical Strike: Extract only those 500 lines of code and pass them to Codex 5.2 for the actual edit.

4. The Formatting: Pass the result back to GLM-4.7 to “prettify” or document it.

The Verdict

Stop looking for a savage “winner.” The winner is the engineer who knows which model to hire for which job.

- Use Codex 5.2 to be your Senior Principal Engineer.

- Use GLM-4.7 to be your UI/UX Designer.

- Use MiniMax M2.1 to be your army of interns.

The future of code isn’t about writing it yourself. It’s about orchestrating the collision of these three realities.